ClickHouse替代ELK可行性分析

目录:

介绍

市面常见的开源解决方法为

- filebeat->kafka->logstach->elasticsearch->kibana

但是因为ElasticSearch的数据存储包括占用量非常大,还有以下方案可选

- filebeat->kafka->gohangout->clickhouse->redash+grafana

以上两种方案主要是还是基于各自存储的生态上衍生的,所以对比还是存储

测试环境部署

环境采取了4台主机

一台用于部署filebeat、zookeeper、kafka、logstash和gohangout

余下三台上都部署ElasticSearch和ClickHouse

kafka和zk

$ wget 10.210.36.6:6789/docker-ce.repo -O /etc/yum.repos.d/docker-ce.repo

$ yum install -y docker-ce-19.03.8

$ systemctl start docker

$ docker run -d --name zookeeper -p 2181:2181 -t wurstmeister/zookeeper

docker run -d --name kafka --network host --env KAFKA_ZOOKEEPER_CONNECT=10.210.36.7:2181 --env KAFKA_ADVERTISED_HOST_NAME=10.210.36.7 --env KAFKA_ADVERTISED_PORT=9092 wurstmeister/kafka:latest

# 需要配置

message.max.bytes = 1000000000

filebeat

# 安装

$ wget https://artifacts.elastic.co/downloads/beats/filebeat/filebeat-7.15.2-linux-x86_64.tar.gz

# 配置

$ vi log.yml

filebeat.inputs:

- type: log

encoding: utf-8

enabled: true

paths:

- /root/riderordermsg/access.2021-11-*

fields:

project_topic: rider-order-msg

zone: hq

fields_under_root: true

multiline:

pattern: '^rider-order-msg'

negate: true

match: after

output.kafka:

hosts: ["10.210.36.7:9092"]

topic: "%{[project_topic]}"

partition.round_robin:

reachable_only: false

max_message_bytes: 10000000

# codec.format:

# string: '%{[message]}'

# 启动

$ ./filebeat -c log.yml

Clickhouse

# 安装

export LATEST_VERSION="21.10.2.15"

curl -O https://repo.clickhouse.com/tgz/stable/clickhouse-common-static-$LATEST_VERSION.tgz

curl -O https://repo.clickhouse.com/tgz/stable/clickhouse-common-static-dbg-$LATEST_VERSION.tgz

curl -O https://repo.clickhouse.com/tgz/stable/clickhouse-server-$LATEST_VERSION.tgz

curl -O https://repo.clickhouse.com/tgz/stable/clickhouse-client-$LATEST_VERSION.tgz

tar -xzvf clickhouse-common-static-$LATEST_VERSION.tgz

sudo clickhouse-common-static-$LATEST_VERSION/install/doinst.sh

tar -xzvf clickhouse-common-static-dbg-$LATEST_VERSION.tgz

sudo clickhouse-common-static-dbg-$LATEST_VERSION/install/doinst.sh

tar -xzvf clickhouse-server-$LATEST_VERSION.tgz

# 这一步有输入密码等功能

sudo clickhouse-server-$LATEST_VERSION/install/doinst.sh

tar -xzvf clickhouse-client-$LATEST_VERSION.tgz

sudo clickhouse-client-$LATEST_VERSION/install/doinst.sh

# 配置

chmod 644 /etc/clickhouse-server/config.xml

vi /etc/clickhouse-server/config.xml

<listen_host>0.0.0.0</listen_host>

<zookeeper>

<node>

<host>10.210.36.7</host>

<port>2181</port>

</node>

</zookeeper>

<shard3replica1>

<shard>

<replica>

<host>10.210.36.23</host>

<port>9000</port>

</replica>

</shard>

<shard>

<replica>

<host>10.210.36.28</host>

<port>9000</port>

</replica>

</shard>

<shard>

<replica>

<host>10.210.36.23</host>

<port>9000</port>

</replica>

</shard>

</shard3replica1>

# 启动

systemctl start clickhouse-server.service

# 建表

clickhouse-client -h 0.0.0.0

# 检测zk

SELECT * FROM system.zookeeper where path = '/'

# 分布式表

CREATE TABLE rider_order_msg_all ON CLUSTER shard3replica1 ( AppName String, EventTime DateTime, LogId String, XB3TraceId String, Host String, Method String, Url String, ResponseTime Int64, Status Int64, Bytes Int64, Parame String, Uid String, LocalIp String, Cost String, Header String, Body String ) ENGINE = Distributed(shard3replica1, default, rider_order_msg_local, rand())

# 分布式ddl

CREATE TABLE rider_order_msg_local ON CLUSTER shard3replica1 ( AppName String, EventTime DateTime, LogId String, XB3TraceId String, Host String, Method String, Url String, ResponseTime Int64, Status Int64, Bytes Int64, Parame String, Uid String, LocalIp String, Cost String, Header String, Body String) ENGINE = MergeTree() ORDER BY EventTime

Elasticsearch

# 安装

useradd es

wget https://artifacts.elastic.co/downloads/elasticsearch/elasticsearch-7.15.2-linux-x86_64.tar.gz

chown es.es elasticsearch-7.15.2-linux-x86_64.tar.gz

mv elasticsearch-7.15.2-linux-x86_64.tar.gz /home/es/

echo "vm.max_map_count=262144" >> /etc/sysctl.conf

sysctl -p

su - es

tar xf elasticsearch-7.15.2-linux-x86_64.tar.gz

# 配置

vi /home/es/elasticsearch-7.15.2/config/jvm.options

-Xms16g

-Xmx16g

vi /home/es/elasticsearch-7.15.2/config/elasticsearch.yml

cluster.name: sfjswl-log

node.name: log-es-01

node.master: true

node.data: true

node.ingest: false

node.ml: false

cluster.remote.connect: true

path.data: /home/es/elasticsearch-7.15.2/data/

path.logs: /home/es/elasticsearch-7.15.2/logs/

bootstrap.memory_lock: true

http.cors.allow-origin: "*"

http.cors.allow-methods: OPTIONS, HEAD, GET, POST, PUT, DELETE

http.cors.allow-headers: X-Requested-With, X-Auth-Token, Content-Type, Content-Length, Authorization

network.host: 0.0.0.0

http.cors.enabled: true

http.port: 9200

discovery.seed_hosts: ["10.210.36.23", "10.210.36.28", "10.210.36.40"]

cluster.initial_master_nodes: ["log-es-01"]

cluster.routing.allocation.same_shard.host: true

xpack.security.enabled: false

xpack.security.transport.ssl.enabled: false

xpack.monitoring.exporters.my_local:

type: local

use_ingest: false

cd /home/es/elasticsearch-7.15.2/

# 启动

./bin/elasticsearch

curl http://127.0.0.1:9200/_cluster/health?pretty

# 索引模板

PUT _index_template/rider-order-msg

{

"index_patterns": ["rider-order-msg*"],

"template": {

"settings": {

"number_of_shards": 3,

"number_of_replicas": 1

},

"mappings": {

"properties": {

"ResponseTime": {

"type": "integer"

},

"Status": {

"type": "integer"

},

"Bytes": {

"type": "integer"

}

}

}

}

}

gohangout

# 安装

$ wget https://github.com/childe/gohangout/releases/download/v1.7.8/gohangout-linux-x64-a4fcf52

# 配置

$ vi gohangout.yml

inputs:

- Kafka:

decorate_events: false

topic:

"rider-order-msg1": 1

codec: json

consumer_settings:

bootstrap.servers: "10.210.36.7:9092"

group.id: clickhouse

max.partition.fetch.bytes: '10485760'

auto.commit.interval.ms: '5000'

from.beginning: 'true'

messages_queue_length: 10

filters:

- Split:

src: message

sep: "|"

fields: ['AppName','EventTime','LogId','XB3TraceId','Host','Method','Url','ResponseTime','Status','Bytes','Parame','Uid','LocalIp','Cost','Header','Body']

ignore_blank: true

overwrite: true

- Convert:

fields:

ResponseTime:

to: int

setto_if_fail: 0

Status:

to: int

setto_if_fail: 0

Bytes:

to: int

setto_if_fail: 0

outputs:

- Clickhouse:

table: 'default.rider_order_msg_all'

conn_max_life_time: 1800

username: default

hosts:

- 'tcp://10.210.36.23:9000'

- 'tcp://10.210.36.28:9000'

- 'tcp://10.210.36.40:9000'

fields: ['AppName','EventTime','LogId','XB3TraceId','Host','Method','Url','ResponseTime','Status','Bytes','Parame','Uid','LocalIp','Cost','Header','Body']

bulk_actions: 1000

flush_interval: 30

concurrent: 1

# 启动

$ ./gohangout-linux-x64-a4fcf52 --config gohangout.yml

logstash

# 安装

$ wget https://artifacts.elastic.co/downloads/logstash/logstash-7.15.2-linux-x86_64.tar.gz

# 配置

$ vi config/pipelines.yml

- pipeline.id: whytest

path.config: "/root/logstash/pipelines/whytest.yml"

pipeline.workers: 1

pipeline.batch.size: 2500

pipeline.batch.delay: 100

vi pipelines/whytest.yml

input {

kafka {

consumer_threads => 1

group_id => "elk"

topics => ["rider-order-msg"]

bootstrap_servers => "10.210.36.7:9092"

}

}

filter {

ruby {

init => "@kname = ['AppName','EventTime','LogId','XB3TraceId','Host','Method','Url','ResponseTime','Status','Bytes','Parame','Uid','LocalIp','Cost','Header','Body']"

code => "

new_event = LogStash::Event.new(Hash[@kname.zip(event.get('message').split('|'))])

event.append(new_event)

"

}

date {

match => ["EventTime", "yyyy-MM-dd HH:mm:ss.SSS", "ISO8601"]

target => "@timestamp"

}

mutate {

remove_field =>["message"]

}

}

output {

elasticsearch {

action => "index"

hosts => ["10.210.36.23","10.210.36.28","10.210.36.40"]

index => "rider-order-msg-%{+YYYY.MM.dd}"

retry_max_interval => 2

timeout => 2

}

}

# 启动

$ ./bin/logstash

方案对比

| 支持功能\开源项目 | ElasticSearch | ClickHouse |

|---|---|---|

| 查询 | java | c++ |

| 存储类型 | 文档存储 | 列式数据库 |

| 分布式支持 | 分片和副本都支持 | 分片和副本都支持 |

| 扩展性 | 高 | 低 |

| 写入速度 | 慢 | 快 |

| CPU/内存占用 | 高 | 低 |

| 存储占用(54G日志数据导入) | 高 94G(174%) | 低 23G(42.6%) |

| 精确匹配查询速度 | 一般 | 快 |

| 模糊匹配查询速度 | 快 | 慢 |

| 权限管理 | 支持 | 支持 |

| 查询难度 | 低 | 高 |

| 可视化支持 | 高 | 低 |

| 使用案例 | 很多 | 携程 |

| 维护难度 | 低 | 高 |

| 社区活跃度 | 高 | 一般 |

| 文档完善度 | 完善 | 一般 |

| start数量 | 57.3K | 20.5K |

对于存储资源占用方面,肯定是ClickHouse更胜一筹,但是使用其替代的问题点需要解决

- 数据表维护:新建表需要指定副本所在节点,我们需要自行维护新表和存储的关系

- 集群扩展:旧数据balance比较复杂,需要拷贝数据进行挂载到表的操作,旧数据直接过期

- 数据查询:需要使用类似SQL的,有单独的函数用于查询,针对模糊查询性能很差,开发需要使用其他字段降低扫描的数据量

ClickHouse可视化方案对比

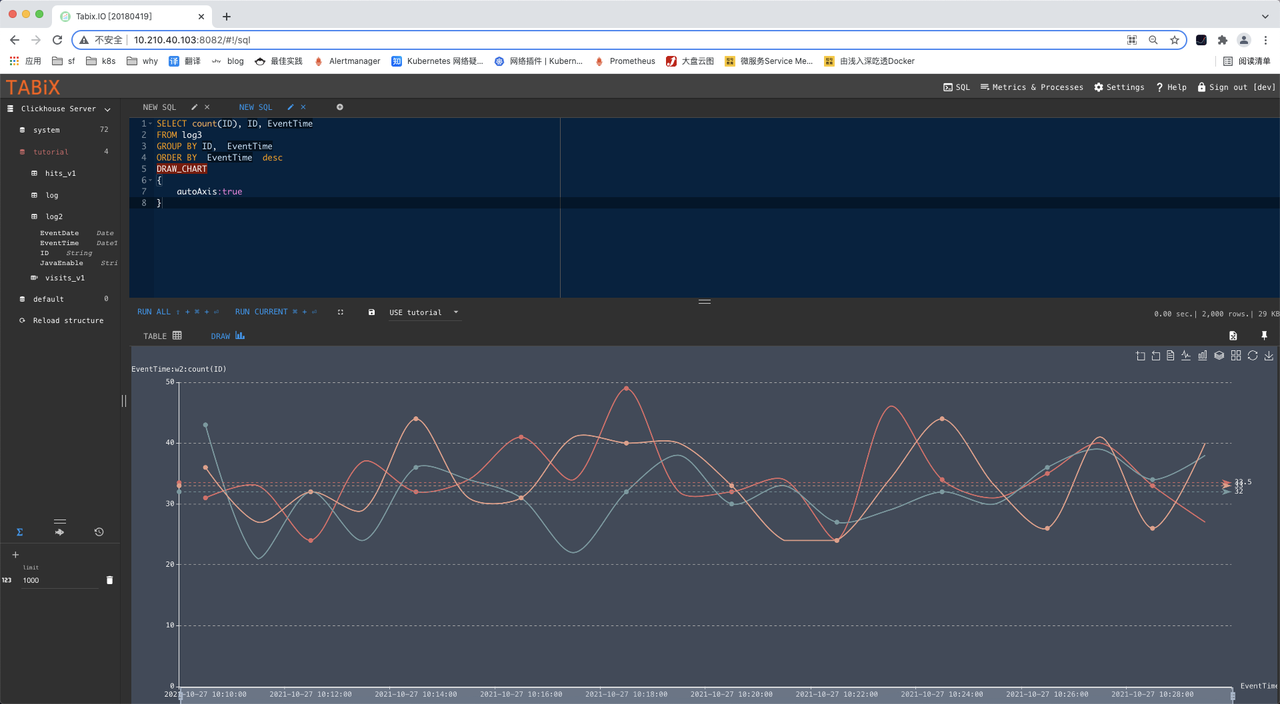

tabix

安装

docker run -d -p 8082:80 spoonest/clickhouse-tabix-web-client

学习画图成本较高

SELECT count(ID), ID, EventTime

FROM log3

GROUP BY ID, EventTime

ORDER BY EventTime desc

DRAW_CHART

{

autoAxis:true

}

lighthouse

只有简单的查询功能

HouseOps

需要编译但编译失败了

DBeaver

DBM

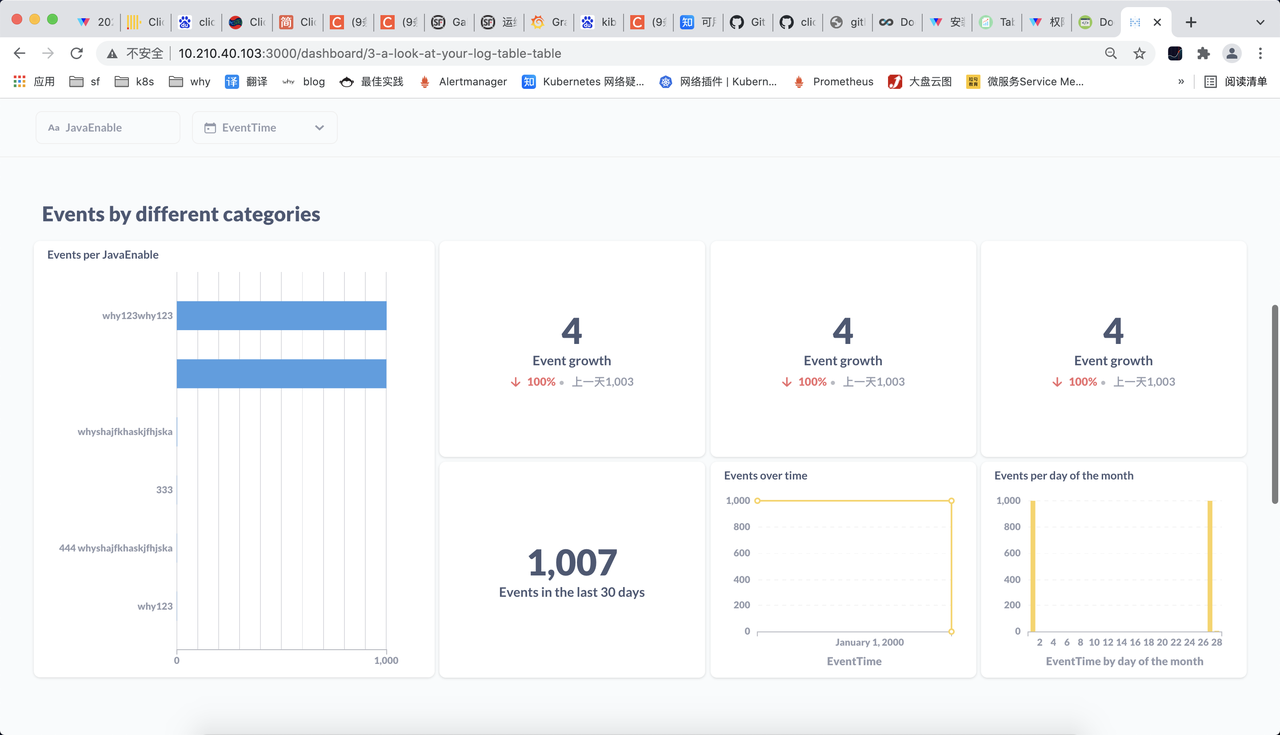

metabase

docker run -d -p 3000:3000 --name metabase metabase/metabase

docker exec -it docker restart metabase sh

cd /plugins

wget https://github.com/enqueue/metabase-clickhouse-driver/releases/download/0.7.3/clickhouse.metabase-driver.jar

exit

docker restart metabase

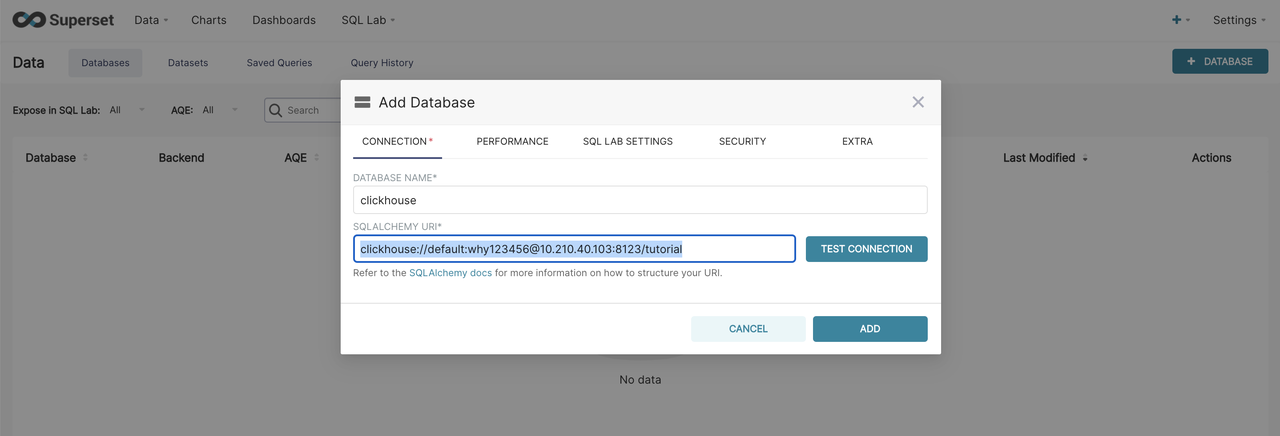

Superset

安装方式

sudo curl -L "https://github.com/docker/compose/releases/download/1.24.1/docker-compose-$(uname -s)-$(uname -m)" -o /usr/local/bin/docker-compose

sudo chmod +x /usr/local/bin/docker-compose

sudo ln -s /usr/local/bin/docker-compose /usr/bin/docker-compose

docker-compose --version

git clone https://github.com/gangtao/clickhouse-client.git

cd clickhouse-client/superset/

docker-compose up

但是连不上库

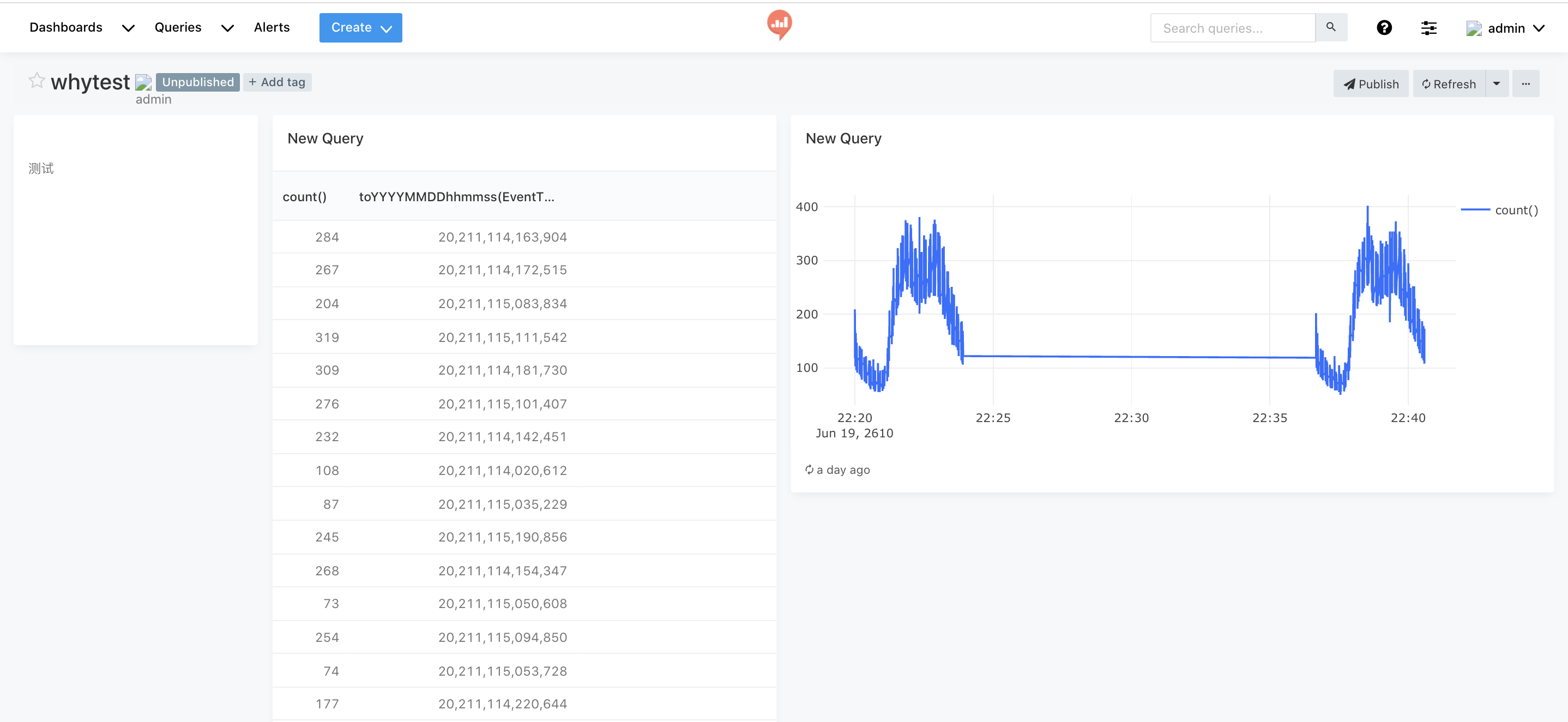

Redash

git clone https://github.com/getredash/setup.git

# 只执行

# create_directories

# create_config

sh setup.sh

docker-compose run --rm server create_db

docker-compose up -d

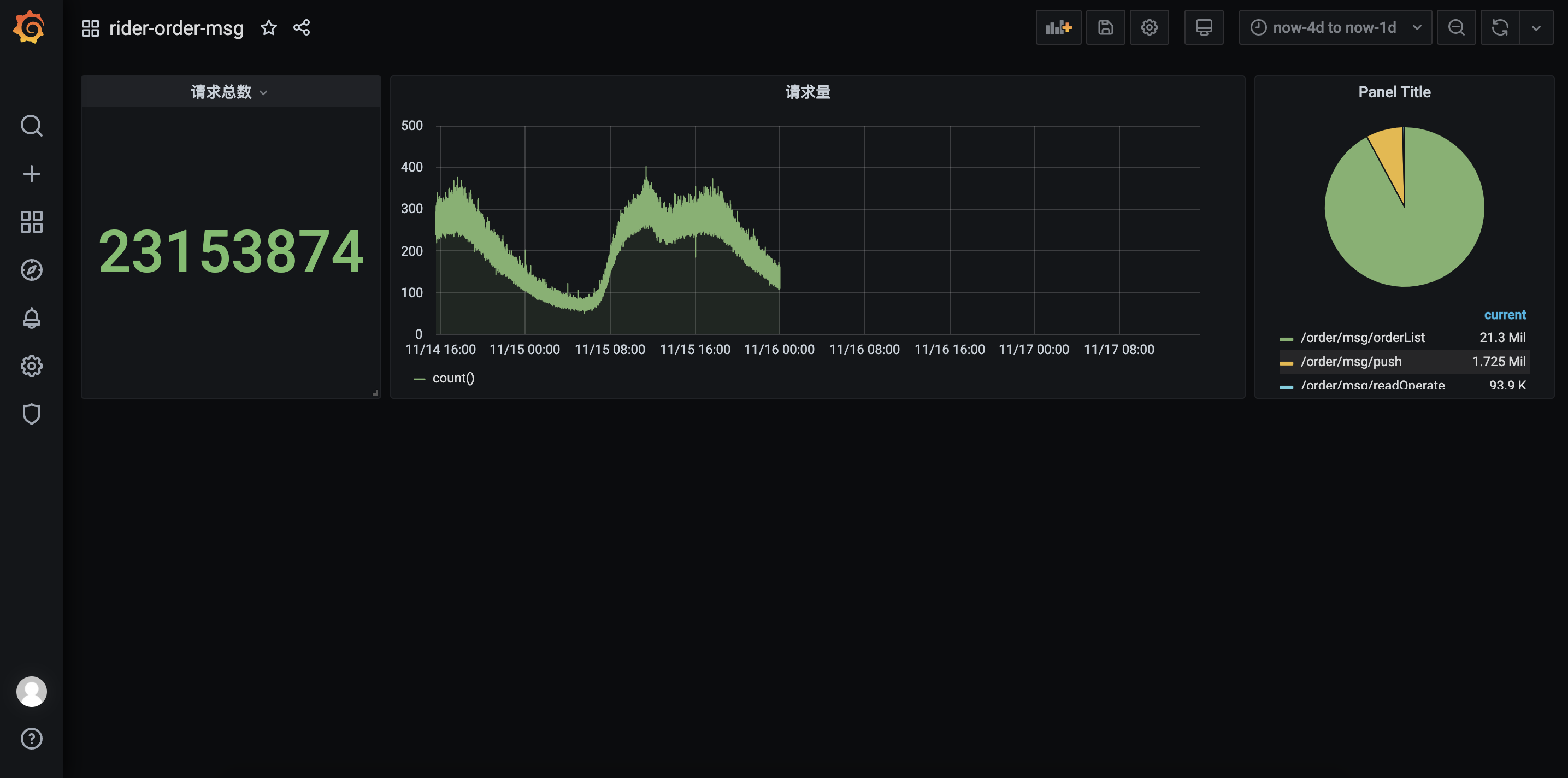

grafana

docker run -d -p 3000:3000 grafana/grafana:7.3.1

# clickhouse插件

# https://grafana.com/grafana/plugins/vertamedia-clickhouse-datasource/

grafana-cli plugins install vertamedia-clickhouse-datasource

# 饼图

# https://grafana.com/grafana/plugins/grafana-piechart-panel/

wget -nv https://grafana.com/api/plugins/grafana-piechart-panel/versions/latest/download -O /tmp/grafana-piechart-panel.zip

unzip -q /tmp/grafana-piechart-panel.zip -d /tmp

ls /var/lib/grafana/plugins/