cgroup实现方式

目录:

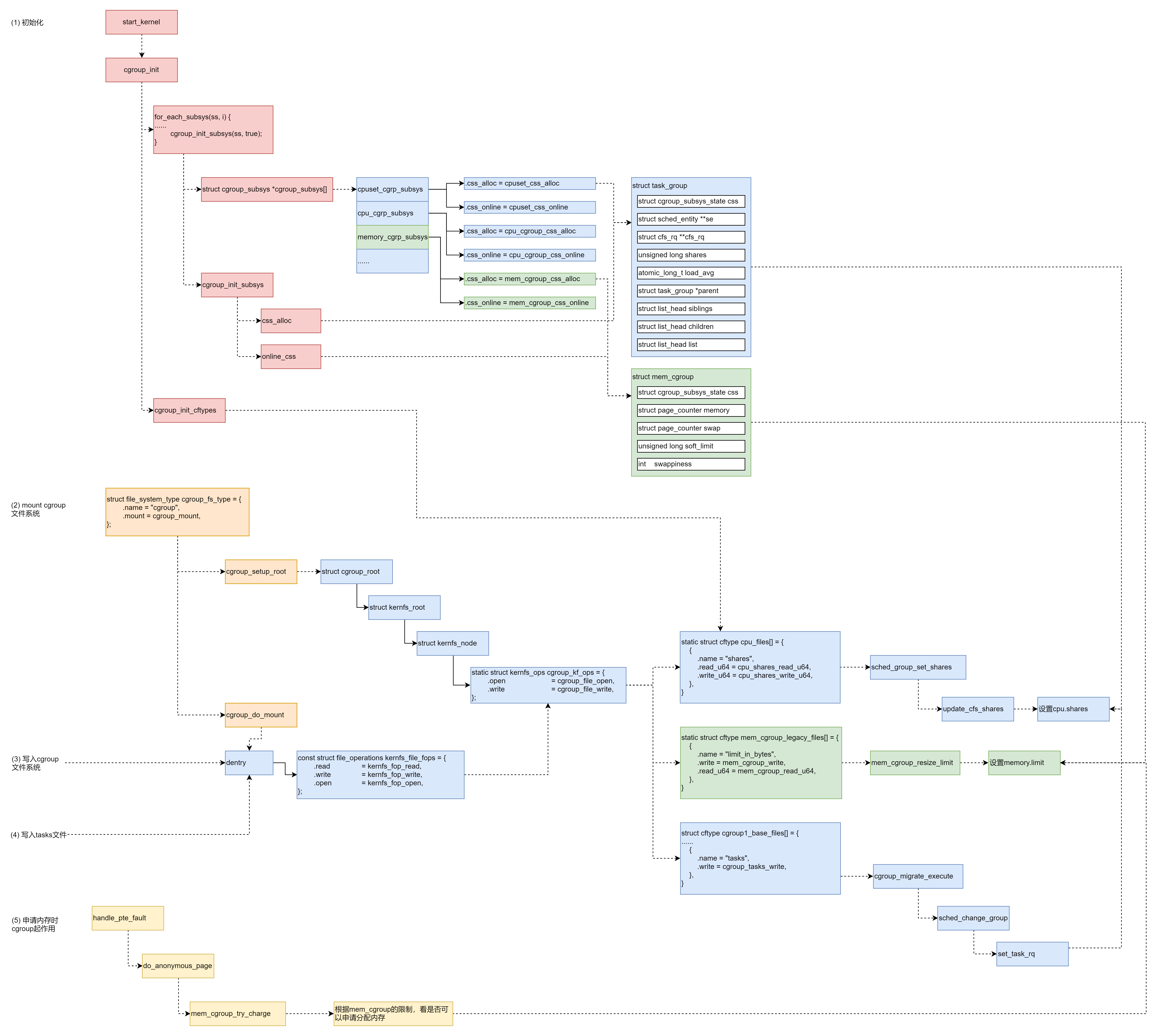

Cgroup的实现

摘自趣谈Linux操作系统的cgroup技术

在系统初始化的时候,cgroup也会进行初始化,在start_kernel中,cgroup_init_early和cgroup_init都会初始化

asmlinkage __visible void __init start_kernel(void)

{

......

cgroup_init_early();

......

cgroup_init();

......

}

在cgroup_init_early和cgroup_init中会有以下循环

for_each_subsys(ss, i) {

ss->id = i;

ss->name = cgroup_subsys_name[i];

......

cgroup_init_subsys(ss, true);

}

#define for_each_subsys(ss, ssid) \

for ((ssid) = 0; (ssid) < CGROUP_SUBSYS_COUNT && \

(((ss) = cgroup_subsys[ssid]) || true); (ssid)++)

for_each_subsys会循环cgroup_subsys数组

cgroup_subsys数组在宏SUBSYS中定义

#define SUBSYS(_x) [_x ## _cgrp_id] = &_x ## _cgrp_subsys,

struct cgroup_subsys *cgroup_subsys[] = {

#include <linux/cgroup_subsys.h>

};

#undef SUBSYS

数组中的项在cgroup_subsys.h中

//cgroup_subsys.h

#if IS_ENABLED(CONFIG_CPUSETS)

SUBSYS(cpuset)

#endif

#if IS_ENABLED(CONFIG_CGROUP_SCHED)

SUBSYS(cpu)

#endif

#if IS_ENABLED(CONFIG_CGROUP_CPUACCT)

SUBSYS(cpuacct)

#endif

#if IS_ENABLED(CONFIG_MEMCG)

SUBSYS(memory)

#endif

以上代码例如SUBSYS(cpu)其实是[cpu_cgrp_id] = &cpu_cgrp_subsys

cpuset_cgrp_subsys

struct cgroup_subsys cpuset_cgrp_subsys = {

.css_alloc = cpuset_css_alloc,

.css_online = cpuset_css_online,

.css_offline = cpuset_css_offline,

.css_free = cpuset_css_free,

.can_attach = cpuset_can_attach,

.cancel_attach = cpuset_cancel_attach,

.attach = cpuset_attach,

.post_attach = cpuset_post_attach,

.bind = cpuset_bind,

.fork = cpuset_fork,

.legacy_cftypes = files,

.early_init = true,

};

cpu_cgrp_subsys

struct cgroup_subsys cpu_cgrp_subsys = {

.css_alloc = cpu_cgroup_css_alloc,

.css_online = cpu_cgroup_css_online,

.css_released = cpu_cgroup_css_released,

.css_free = cpu_cgroup_css_free,

.fork = cpu_cgroup_fork,

.can_attach = cpu_cgroup_can_attach,

.attach = cpu_cgroup_attach,

.legacy_cftypes = cpu_files,

.early_init = true,

};

memory_cgrp_subsys

struct cgroup_subsys memory_cgrp_subsys = {

.css_alloc = mem_cgroup_css_alloc,

.css_online = mem_cgroup_css_online,

.css_offline = mem_cgroup_css_offline,

.css_released = mem_cgroup_css_released,

.css_free = mem_cgroup_css_free,

.css_reset = mem_cgroup_css_reset,

.can_attach = mem_cgroup_can_attach,

.cancel_attach = mem_cgroup_cancel_attach,

.post_attach = mem_cgroup_move_task,

.bind = mem_cgroup_bind,

.dfl_cftypes = memory_files,

.legacy_cftypes = mem_cgroup_legacy_files,

.early_init = 0,

};

所以在for_each_subsys的循环中,cgroup_subsys[]数组中的每个cgroup_subsys都会调用cgroup_init_subsys完成对cgroup_subsys的初始化

static void __init cgroup_init_subsys(struct cgroup_subsys *ss, bool early)

{

struct cgroup_subsys_state *css;

......

idr_init(&ss->css_idr);

INIT_LIST_HEAD(&ss->cfts);

/* Create the root cgroup state for this subsystem */

ss->root = &cgrp_dfl_root;

css = ss->css_alloc(cgroup_css(&cgrp_dfl_root.cgrp, ss));

......

init_and_link_css(css, ss, &cgrp_dfl_root.cgrp);

......

css->id = cgroup_idr_alloc(&ss->css_idr, css, 1, 2, GFP_KERNEL);

init_css_set.subsys[ss->id] = css;

......

BUG_ON(online_css(css));

......

}

这个初始化主要做两个事情

- 调用

cgroup_subsys的css_alloc函数创建一个cgroup_subsys_state - 调用

online_css,也就是css_online来激活cgroup

css_alloc对于cpu来说就是cpu_cgroup_css_alloc,会调用sched_create_group创建一个task_group,这个struct的第一项就是cgroup_subsys_state,所以是其的扩展

struct task_group {

struct cgroup_subsys_state css;

#ifdef CONFIG_FAIR_GROUP_SCHED

/* schedulable entities of this group on each cpu */

struct sched_entity **se;

/* runqueue "owned" by this group on each cpu */

struct cfs_rq **cfs_rq;

unsigned long shares;

#ifdef CONFIG_SMP

atomic_long_t load_avg ____cacheline_aligned;

#endif

#endif

struct rcu_head rcu;

struct list_head list;

struct task_group *parent;

struct list_head siblings;

struct list_head children;

struct cfs_bandwidth cfs_bandwidth;

};

这里还有sched_entity,用于调度的实体,所以task_group也是一个调度的实体

然后是online_css调用的cpu_cgroup_css_online,会调用sched_online_group->online_fair_sched_group

void online_fair_sched_group(struct task_group *tg)

{

struct sched_entity *se;

struct rq *rq;

int i;

for_each_possible_cpu(i) {

rq = cpu_rq(i);

se = tg->se[i];

update_rq_clock(rq);

attach_entity_cfs_rq(se);

sync_throttle(tg, i);

}

}

对每一个CPU,取出每个CPU的队列rq,也会取出task_group的sched_entity,然后通过attach_entity_cfs_rq将sched_entity添加到运行队列

对于内存,css_alloc就是mem_cgroup_css_alloc,会调用mem_cgroup_alloc创建mem_cgroup,其第一项也是cgroup_subsys_state

struct mem_cgroup {

struct cgroup_subsys_state css;

/* Private memcg ID. Used to ID objects that outlive the cgroup */

struct mem_cgroup_id id;

/* Accounted resources */

struct page_counter memory;

struct page_counter swap;

/* Legacy consumer-oriented counters */

struct page_counter memsw;

struct page_counter kmem;

struct page_counter tcpmem;

/* Normal memory consumption range */

unsigned long low;

unsigned long high;

/* Range enforcement for interrupt charges */

struct work_struct high_work;

unsigned long soft_limit;

......

int swappiness;

......

/*

* percpu counter.

*/

struct mem_cgroup_stat_cpu __percpu *stat;

int last_scanned_node;

/* List of events which userspace want to receive */

struct list_head event_list;

spinlock_t event_list_lock;

struct mem_cgroup_per_node *nodeinfo[0];

/* WARNING: nodeinfo must be the last member here */

};

在cgroup_init函数中,会调用cgroup_init_cftypes(NULL, cgroup1_base_files)来初始化cgroup文件类型cftype的操作函数,也就是将struct kernfs_ops *kf_ops设置为cgroup_kf_ops

struct cftype cgroup1_base_files[] = {

......

{

.name = "tasks",

.seq_start = cgroup_pidlist_start,

.seq_next = cgroup_pidlist_next,

.seq_stop = cgroup_pidlist_stop,

.seq_show = cgroup_pidlist_show,

.private = CGROUP_FILE_TASKS,

.write = cgroup_tasks_write,

},

}

static struct kernfs_ops cgroup_kf_ops = {

.atomic_write_len = PAGE_SIZE,

.open = cgroup_file_open,

.release = cgroup_file_release,

.write = cgroup_file_write,

.seq_start = cgroup_seqfile_start,

.seq_next = cgroup_seqfile_next,

.seq_stop = cgroup_seqfile_stop,

.seq_show = cgroup_seqfile_show,

};

然后创建cgroup文件系统,用于操作和配置cgroup

struct file_system_type cgroup_fs_type = {

.name = "cgroup",

.mount = cgroup_mount,

.kill_sb = cgroup_kill_sb,

.fs_flags = FS_USERNS_MOUNT,

};

当mount这个cgroup的时候会调用cgroup_mount->cgroup1_mount

struct dentry *cgroup1_mount(struct file_system_type *fs_type, int flags,

void *data, unsigned long magic,

struct cgroup_namespace *ns)

{

struct super_block *pinned_sb = NULL;

struct cgroup_sb_opts opts;

struct cgroup_root *root;

struct cgroup_subsys *ss;

struct dentry *dentry;

int i, ret;

bool new_root = false;

......

root = kzalloc(sizeof(*root), GFP_KERNEL);

new_root = true;

init_cgroup_root(root, &opts);

ret = cgroup_setup_root(root, opts.subsys_mask, PERCPU_REF_INIT_DEAD);

......

dentry = cgroup_do_mount(&cgroup_fs_type, flags, root,

CGROUP_SUPER_MAGIC, ns);

......

return dentry;

}

将cgroup组织为树形结构,是因为有cgroup_root,在init_cgroup_root会初始化这个cgroup_root

int cgroup_setup_root(struct cgroup_root *root, u16 ss_mask, int ref_flags)

{

LIST_HEAD(tmp_links);

struct cgroup *root_cgrp = &root->cgrp;

struct kernfs_syscall_ops *kf_sops;

struct css_set *cset;

int i, ret;

root->kf_root = kernfs_create_root(kf_sops,

KERNFS_ROOT_CREATE_DEACTIVATED,

root_cgrp);

root_cgrp->kn = root->kf_root->kn;

ret = css_populate_dir(&root_cgrp->self);

ret = rebind_subsystems(root, ss_mask);

......

list_add(&root->root_list, &cgroup_roots);

cgroup_root_count++;

......

kernfs_activate(root_cgrp->kn);

......

}

每个文件对应一个inode,在cgroup还对应着一个kernfs_node

css_populate_dir会调用cgroup_addrm_files->cgroup_add_file->cgroup_add_file来创建整颗文件树,并为每个文件创建对应的kernfs_node结构,并将这个文件的操作函数设置为kf_ops,也指向cgroup_kf_ops

static int cgroup_add_file(struct cgroup_subsys_state *css, struct cgroup *cgrp,

struct cftype *cft)

{

char name[CGROUP_FILE_NAME_MAX];

struct kernfs_node *kn;

......

kn = __kernfs_create_file(cgrp->kn, cgroup_file_name(cgrp, cft, name),

cgroup_file_mode(cft), 0, cft->kf_ops, cft,

NULL, key);

......

}

struct kernfs_node *__kernfs_create_file(struct kernfs_node *parent,

const char *name,

umode_t mode, loff_t size,

const struct kernfs_ops *ops,

void *priv, const void *ns,

struct lock_class_key *key)

{

struct kernfs_node *kn;

unsigned flags;

int rc;

flags = KERNFS_FILE;

kn = kernfs_new_node(parent, name, (mode & S_IALLUGO) | S_IFREG, flags);

kn->attr.ops = ops;

kn->attr.size = size;

kn->ns = ns;

kn->priv = priv;

......

rc = kernfs_add_one(kn);

......

return kn;

}

在cgroup_setup_root返回后,cgroup1_mount要做cgroup_do_mount,调用kernfs_mount去mount文件系统,返回一个普通文件系统识别的dentry,特殊文件系统的操作函数为kernfs_file_fops

const struct file_operations kernfs_file_fops = {

.read = kernfs_fop_read,

.write = kernfs_fop_write,

.llseek = generic_file_llseek,

.mmap = kernfs_fop_mmap,

.open = kernfs_fop_open,

.release = kernfs_fop_release,

.poll = kernfs_fop_poll,

.fsync = noop_fsync,

};

当写入一个Cgroup文件来设置参数的时候,根据文件系统的操作,kernfs_fop_write会被调用,这里调用的kernfs_ops的write函数,根据上边定义的为cgroup_file_write,在这里会调用cftypw的write函数,对于CPU和内存的write有不同的定义

static struct cftype cpu_files[] = {

#ifdef CONFIG_FAIR_GROUP_SCHED

{

.name = "shares",

.read_u64 = cpu_shares_read_u64,

.write_u64 = cpu_shares_write_u64,

},

#endif

#ifdef CONFIG_CFS_BANDWIDTH

{

.name = "cfs_quota_us",

.read_s64 = cpu_cfs_quota_read_s64,

.write_s64 = cpu_cfs_quota_write_s64,

},

{

.name = "cfs_period_us",

.read_u64 = cpu_cfs_period_read_u64,

.write_u64 = cpu_cfs_period_write_u64,

},

}

static struct cftype mem_cgroup_legacy_files[] = {

{

.name = "usage_in_bytes",

.private = MEMFILE_PRIVATE(_MEM, RES_USAGE),

.read_u64 = mem_cgroup_read_u64,

},

{

.name = "max_usage_in_bytes",

.private = MEMFILE_PRIVATE(_MEM, RES_MAX_USAGE),

.write = mem_cgroup_reset,

.read_u64 = mem_cgroup_read_u64,

},

{

.name = "limit_in_bytes",

.private = MEMFILE_PRIVATE(_MEM, RES_LIMIT),

.write = mem_cgroup_write,

.read_u64 = mem_cgroup_read_u64,

},

{

.name = "soft_limit_in_bytes",

.private = MEMFILE_PRIVATE(_MEM, RES_SOFT_LIMIT),

.write = mem_cgroup_write,

.read_u64 = mem_cgroup_read_u64,

},

}

如果是设置的cpu.shares,就会调用cpu_shares_write_u64,更新了task_group的shares变量,就会触发更新CPU队列上的调度实体

int sched_group_set_shares(struct task_group *tg, unsigned long shares)

{

int i;

shares = clamp(shares, scale_load(MIN_SHARES), scale_load(MAX_SHARES));

tg->shares = shares;

for_each_possible_cpu(i) {

struct rq *rq = cpu_rq(i);

struct sched_entity *se = tg->se[i];

struct rq_flags rf;

update_rq_clock(rq);

for_each_sched_entity(se) {

update_load_avg(se, UPDATE_TG);

update_cfs_shares(se);

}

}

......

}

tasks文件写入进程号,按照cgroup1_base_files来定义,我们应该调用cgroup_tasks_write

整体的调用链为cgroup_tasks_write->__cgroup_procs_write->cgroup_attach_task-> cgroup_migrate->cgroup_migrate_execute,将进程和cgroup关联起来,也就是进程迁移到Cgroup下

static int cgroup_migrate_execute(struct cgroup_mgctx *mgctx)

{

struct cgroup_taskset *tset = &mgctx->tset;

struct cgroup_subsys *ss;

struct task_struct *task, *tmp_task;

struct css_set *cset, *tmp_cset;

......

if (tset->nr_tasks) {

do_each_subsys_mask(ss, ssid, mgctx->ss_mask) {

if (ss->attach) {

tset->ssid = ssid;

ss->attach(tset);

}

} while_each_subsys_mask();

}

......

}

每个cgroup子系统会调用对应的attach函数,CPU调用的是cpu_cgroup_attach-> sched_move_task-> sched_change_group

static void sched_change_group(struct task_struct *tsk, int type)

{

struct task_group *tg;

tg = container_of(task_css_check(tsk, cpu_cgrp_id, true),

struct task_group, css);

tg = autogroup_task_group(tsk, tg);

tsk->sched_task_group = tg;

#ifdef CONFIG_FAIR_GROUP_SCHED

if (tsk->sched_class->task_change_group)

tsk->sched_class->task_change_group(tsk, type);

else

#endif

set_task_rq(tsk, task_cpu(tsk));

}

在sched_change_group中设置这个进程以这个task_group的方式参与调度,从而使cpu.shares生效

对于内存来讲就是使用mem_cgroup_write->mem_cgroup_resize_limit来设置struct mem_cgroup的memory.limit

在进程执行过程中,申请内存的时候,会调用handle_pte_fault->do_anonymous_page()->mem_cgroup_try_charge()

int mem_cgroup_try_charge(struct page *page, struct mm_struct *mm,

gfp_t gfp_mask, struct mem_cgroup **memcgp,

bool compound)

{

struct mem_cgroup *memcg = NULL;

......

if (!memcg)

memcg = get_mem_cgroup_from_mm(mm);

ret = try_charge(memcg, gfp_mask, nr_pages);

......

}

在mem_cgroup_try_charge中先调用get_mem_cgroup_from_mm获取进程对应的mem_cgroup,然后在try_charge中根据mem_cgroup的限制看是否可以分配内存

总结一下

流程图

流程

- 系统初始化的时候,初始化cgroup的各个子系统的操作函数,分配各个子系统的数据结构

- mount cgroup文件系统,创建文件系统的树形结构,以及操作函数

- 写入cgroup文件,设置cpu或者memory的相关参数,这个时候文件系统的操作函数会调用到cgroup子系统的操作函数,从而将参数设置到cgroup子系统的数据结构中

- 写入tasks文件,将进程交给某个cgroup进行管理,因为tasks文件也是一个cgroup文件,统一会调用文件系统的操作函数进而调用cgroup子系统的操作函数,将cgroup子系统的数据结构和进程关联起来

- 对于CPU来讲,会修改scheduled entity,放入相应的队列里面去,从而下次调度的时候就起作用了。对于内存的cgroup设定,只有在申请内存的时候才起作用