<组件使用>生产环境下单NameNode节点的HDFS集群配置HA

目录:

实验环境

NA1:NameNode NA2:ResourceManager DT1:DataNode,NodeManager,zookeeper DT2:DataNode,NodeManager,zookeeper DT3:DataNode,NodeManager,zookeeper

前提条件

集群中三台或三台以上的zookeeper。如果集群中有依赖HBase集群请在HBase集群关闭的情况进行,在配置NameNode HA的过程需要对zookeeper进行

NameNode配置文件修改

core-site.xml

<!-- 指定hdfs的nameservice为redoop -->

<property>

<name>fs.defaultFS</name>

<value>hdfs://redoop</value>

</property>

<!-- 指定zookeeper地址 -->

<property>

<name>ha.zookeeper.quorum</name>

<value>DT1:2181,DT2:2181,DT3:2181</value>

</property>

hdfs-site.xml

<!--指定hdfs的nameservice为redoop,需要和core-site.xml中的保持一致 -->

<property>

<name>dfs.nameservice</name>

<value>redoop</value>

</property>

<!-- redoop下面有两个NameNode,分别是nn1,nn2 -->

<property>

<name>dfs.ha.namenodes.redoop</name>

<value>nn1,nn2</value>

</property>

<!-- nn1的RPC通信地址 -->

<property>

<name>dfs.namenode.rpc-address.redoop.nn1</name>

<value>NA1:8020</value>

</property>

<!-- nn2的RPC通信地址 -->

<property>

<name>dfs.namenode.rpc-address.redoop.nn2</name>

<value>NA2:8020</value>

</property>

<!-- nn1的http通信地址 -->

<property>

<name>dfs.namenode.http-address.redoop.nn1</name>

<value>NA1:50070</value>

</property>

<!-- nn2的http通信地址 -->

<property>

<name>dfs.namenode.http-address.redoop.nn2</name>

<value>NA2:50070</value>

</property>

<!-- 开启NameNode失败自动切换 -->

<property>

<name>dfs.ha.automatic-failover.enabled</name>

<value>true</value>

</property>

<!-- 配置失败自动切换实现方式 -->

<property>

<name>dfs.client.failover.proxy.provider.redoop</name>

<value>org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider</value>

</property>

<!-- 配置隔离机制方法,多个机制用换行分割,即每个机制暂用一行-->

<property>

<name>dfs.ha.fencing.methods</name>

<value>shell(/bin/true)</value>

</property>

<!-- 指定NameNode的元数据在JournalNode上的存放位置 -->

<property>

<name>dfs.namenode.shared.edits.dir</name>

<value>qjournal://DT1:8485;DT2:8485;DT3:8485/ns1</value>

</property>

<!-- 指定JournalNode在本地磁盘存放数据的位置 -->

<property>

<name>dfs.journalnode.edits.dir</name>

<value>/hadoop/hdfs/journalnode</value>

</property>

NameNode进入安全模式

[root@NA1 /]# sudo su -l hdfs -c 'hdfs dfsadmin -safemode enter'

Safe mode is ON

进入安全模式后hdfs无法进行写入操作,fsimage的信息不会变更。

创建Checkpoint

[root@NA1 /]# sudo su -l hdfs -c 'hdfs dfsadmin -saveNamespace'

Save namespace successful

保存内存信息到磁盘,实现edits和fsimage的合并,为NameNode元数据信息同步到journalnode提供前提条件。

关闭HDFS和zookeeper

在zookeeper所在机器启动journalNode

[root@DT1 /]# sudo su -l hdfs -c 'hadoop-daemons.sh start journalnode'

[root@DT2 /]# sudo su -l hdfs -c 'hadoop-daemons.sh start journalnode'

[root@DT3 /]# sudo su -l hdfs -c 'hadoop-daemons.sh start journalnode'

初始化journalNode

[root@NA1 /]# sudo su -l hdfs -c 'hdfs namenode -initializeSharedEdits'

16/07/25 15:55:36 INFO namenode.NameNode: STARTUP_MSG:

/************************************************************

STARTUP_MSG: Starting NameNode

STARTUP_MSG: host = NA1/192.168.0.217

STARTUP_MSG: args = [-initializeSharedEdits]

STARTUP_MSG: version = 2.7.1.2.3.4.0-3485

STARTUP_MSG: classpath = /usr/hdp/2.3.4.0-3485/hadoop/conf:/usr/hdp/2.3.4.0-3485/hadoop/lib/commons-logging-1.1.3.jar:/usr/hdp/2.3.4.0-3485/tez/lib/jettison-1.3.4.jar:/usr/hdp/2.3.4.0-3485/tez/lib/hadoop-yarn-server-web-proxy-2.7.1.2.3.4.0-3485.jar:/usr/hdp/2.3.4.0-3485/tez/lib/jetty-util-6.1.26.hwx.jar:/usr/hdp/2.3.4.0-3485/tez/conf

STARTUP_MSG: build = git@github.com:hortonworks/hadoop.git -r ef0582ca14b8177a3cbb6376807545272677d730; compiled by 'jenkins' on 2015-12-16T03:01Z

STARTUP_MSG: java = 1.7.0_67

************************************************************/

16/07/25 15:55:36 INFO namenode.NameNode: registered UNIX signal handlers for [TERM, HUP, INT]

16/07/25 15:55:36 INFO namenode.NameNode: createNameNode [-initializeSharedEdits]

16/07/25 15:55:37 WARN common.Util: Path /namenode_dir/hadoop/hdfs/namenode should be specified as a URI in configuration files. Please update hdfs configuration.

16/07/25 15:55:37 WARN common.Util: Path /namenode_dir/hadoop/hdfs/namenode should be specified as a URI in configuration files. Please update hdfs configuration.

16/07/25 15:55:37 WARN namenode.FSNamesystem: Only one image storage directory (dfs.namenode.name.dir) configured. Beware of data loss due to lack of redundant storage directories!

16/07/25 15:55:37 WARN namenode.FSNamesystem: Only one namespace edits storage directory (dfs.namenode.edits.dir) configured. Beware of data loss due to lack of redundant storage directories!

16/07/25 15:55:37 WARN common.Util: Path /namenode_dir/hadoop/hdfs/namenode should be specified as a URI in configuration files. Please update hdfs configuration.

16/07/25 15:55:37 WARN common.Storage: set restore failed storage to true

16/07/25 15:55:37 INFO namenode.FSNamesystem: No KeyProvider found.

16/07/25 15:55:37 INFO namenode.FSNamesystem: Enabling async auditlog

16/07/25 15:55:37 INFO namenode.FSNamesystem: fsLock is fair:false

16/07/25 15:55:37 INFO blockmanagement.HeartbeatManager: Setting heartbeat recheck interval to 30000 since dfs.namenode.stale.datanode.interval is less than dfs.namenode.heartbeat.recheck-interval

16/07/25 15:55:37 INFO blockmanagement.DatanodeManager: dfs.block.invalidate.limit=1000

16/07/25 15:55:37 INFO blockmanagement.DatanodeManager: dfs.namenode.datanode.registration.ip-hostname-check=true

16/07/25 15:55:37 INFO blockmanagement.BlockManager: dfs.namenode.startup.delay.block.deletion.sec is set to 000:01:00:00.000

16/07/25 15:55:37 INFO blockmanagement.BlockManager: The block deletion will start around 2016 Jul 25 16:55:37

16/07/25 15:55:37 INFO util.GSet: Computing capacity for map BlocksMap

16/07/25 15:55:37 INFO util.GSet: VM type = 64-bit

16/07/25 15:55:37 INFO util.GSet: 2.0% max memory 1011.3 MB = 20.2 MB

16/07/25 15:55:37 INFO util.GSet: capacity = 2^21 = 2097152 entries

16/07/25 15:55:37 INFO blockmanagement.BlockManager: dfs.block.access.token.enable=true

16/07/25 15:55:37 INFO blockmanagement.BlockManager: dfs.block.access.key.update.interval=600 min(s), dfs.block.access.token.lifetime=600 min(s), dfs.encrypt.data.transfer.algorithm=null

16/07/25 15:55:37 INFO blockmanagement.BlockManager: defaultReplication = 3

16/07/25 15:55:37 INFO blockmanagement.BlockManager: maxReplication = 50

16/07/25 15:55:37 INFO blockmanagement.BlockManager: minReplication = 1

16/07/25 15:55:37 INFO blockmanagement.BlockManager: maxReplicationStreams = 2

16/07/25 15:55:37 INFO blockmanagement.BlockManager: replicationRecheckInterval = 3000

16/07/25 15:55:37 INFO blockmanagement.BlockManager: encryptDataTransfer = false

16/07/25 15:55:37 INFO blockmanagement.BlockManager: maxNumBlocksToLog = 1000

16/07/25 15:55:37 INFO namenode.FSNamesystem: fsOwner = hdfs (auth:SIMPLE)

16/07/25 15:55:37 INFO namenode.FSNamesystem: supergroup = hdfs

16/07/25 15:55:37 INFO namenode.FSNamesystem: isPermissionEnabled = true

16/07/25 15:55:37 INFO namenode.FSNamesystem: Determined nameservice ID: H3CStandby

16/07/25 15:55:37 INFO namenode.FSNamesystem: HA Enabled: true

16/07/25 15:55:37 INFO namenode.FSNamesystem: Append Enabled: true

16/07/25 15:55:37 INFO util.GSet: Computing capacity for map INodeMap

16/07/25 15:55:37 INFO util.GSet: VM type = 64-bit

16/07/25 15:55:37 INFO util.GSet: 1.0% max memory 1011.3 MB = 10.1 MB

16/07/25 15:55:37 INFO util.GSet: capacity = 2^20 = 1048576 entries

16/07/25 15:55:37 INFO namenode.FSDirectory: ACLs enabled? false

16/07/25 15:55:37 INFO namenode.FSDirectory: XAttrs enabled? true

16/07/25 15:55:37 INFO namenode.FSDirectory: Maximum size of an xattr: 16384

16/07/25 15:55:37 INFO namenode.NameNode: Caching file names occuring more than 10 times

16/07/25 15:55:37 INFO util.GSet: Computing capacity for map cachedBlocks

16/07/25 15:55:37 INFO util.GSet: VM type = 64-bit

16/07/25 15:55:37 INFO util.GSet: 0.25% max memory 1011.3 MB = 2.5 MB

16/07/25 15:55:37 INFO util.GSet: capacity = 2^18 = 262144 entries

16/07/25 15:55:37 INFO namenode.FSNamesystem: dfs.namenode.safemode.threshold-pct = 0.9900000095367432

16/07/25 15:55:37 INFO namenode.FSNamesystem: dfs.namenode.safemode.min.datanodes = 0

16/07/25 15:55:37 INFO namenode.FSNamesystem: dfs.namenode.safemode.extension = 30000

16/07/25 15:55:37 INFO metrics.TopMetrics: NNTop conf: dfs.namenode.top.window.num.buckets = 10

16/07/25 15:55:37 INFO metrics.TopMetrics: NNTop conf: dfs.namenode.top.num.users = 10

16/07/25 15:55:37 INFO metrics.TopMetrics: NNTop conf: dfs.namenode.top.windows.minutes = 1,5,25

16/07/25 15:55:37 INFO namenode.FSNamesystem: Retry cache on namenode is enabled

16/07/25 15:55:37 INFO namenode.FSNamesystem: Retry cache will use 0.03 of total heap and retry cache entry expiry time is 600000 millis

16/07/25 15:55:37 INFO util.GSet: Computing capacity for map NameNodeRetryCache

16/07/25 15:55:37 INFO util.GSet: VM type = 64-bit

16/07/25 15:55:37 INFO util.GSet: 0.029999999329447746% max memory 1011.3 MB = 310.7 KB

16/07/25 15:55:37 INFO util.GSet: capacity = 2^15 = 32768 entries

16/07/25 15:55:37 INFO common.Storage: Lock on /namenode_dir/hadoop/hdfs/namenode/in_use.lock acquired by nodename 14148@NA1

16/07/25 15:55:37 INFO namenode.FSImage: No edit log streams selected.

16/07/25 15:55:37 INFO namenode.FSImageFormatPBINode: Loading 160 INodes.

16/07/25 15:55:37 INFO namenode.FSImageFormatProtobuf: Loaded FSImage in 0 seconds.

16/07/25 15:55:37 INFO namenode.FSImage: Loaded image for txid 999 from /namenode_dir/hadoop/hdfs/namenode/current/fsimage_0000000000000000999

16/07/25 15:55:37 INFO namenode.FSNamesystem: Need to save fs image? false (staleImage=false, haEnabled=true, isRollingUpgrade=false)

16/07/25 15:55:37 INFO namenode.NameCache: initialized with 0 entries 0 lookups

16/07/25 15:55:37 INFO namenode.FSNamesystem: Finished loading FSImage in 238 msecs

16/07/25 15:55:37 WARN common.Storage: set restore failed storage to true

16/07/25 15:55:38 INFO namenode.FileJournalManager: Recovering unfinalized segments in /namenode_dir/hadoop/hdfs/namenode/current

16/07/25 15:55:38 INFO namenode.FileJournalManager: Finalizing edits file /namenode_dir/hadoop/hdfs/namenode/current/edits_inprogress_0000000000000001000 -> /namenode_dir/hadoop/hdfs/namenode/current/edits_0000000000000001000-0000000000000001000

16/07/25 15:55:38 INFO client.QuorumJournalManager: Starting recovery process for unclosed journal segments...

16/07/25 15:55:38 INFO client.QuorumJournalManager: Successfully started new epoch 1

16/07/25 15:55:38 INFO namenode.EditLogInputStream: Fast-forwarding stream '/namenode_dir/hadoop/hdfs/namenode/current/edits_0000000000000001000-0000000000000001000' to transaction ID 1000

16/07/25 15:55:38 INFO namenode.FSEditLog: Starting log segment at 1000

16/07/25 15:55:38 INFO namenode.FSEditLog: Ending log segment 1000

16/07/25 15:55:38 INFO namenode.FSEditLog: Number of transactions: 1 Total time for transactions(ms): 0 Number of transactions batched in Syncs: 0 Number of syncs: 1 SyncTimes(ms): 18

16/07/25 15:55:38 INFO util.ExitUtil: Exiting with status 0

16/07/25 15:55:38 INFO namenode.NameNode: SHUTDOWN_MSG:

/************************************************************

SHUTDOWN_MSG: Shutting down NameNode at NA1/192.168.0.217

************************************************************/

启动NameNode和zookeeper

初始化元数据信息

[root@NA1 /]# sudo su -l hdfs -c 'hdfs zkfc -formatZK'

16/07/25 16:03:59 INFO tools.DFSZKFailoverController: STARTUP_MSG:

/************************************************************

STARTUP_MSG: Starting DFSZKFailoverController

STARTUP_MSG: host = NA1/192.168.0.217

STARTUP_MSG: args = [-formatZK]

STARTUP_MSG: version = 2.7.1.2.3.4.0-3485

STARTUP_MSG: classpath = /usr/hdp/2.3.4.0-3485/hadoop/conf:/usr/hdp/2.3.4.0-3485/hadoop/lib/commons-logging-1.1.3.jar:/usr/hdp/2.3.4.0-3485/tez/lib/jettison-1.3.4.jar:/usr/hdp/2.3.4.0-3485/tez/lib/hadoop-yarn-server-web-proxy-2.7.1.2.3.4.0-3485.jar:/usr/hdp/2.3.4.0-3485/tez/lib/jetty-util-6.1.26.hwx.jar:/usr/hdp/2.3.4.0-3485/tez/conf

STARTUP_MSG: build = git@github.com:hortonworks/hadoop.git -r ef0582ca14b8177a3cbb6376807545272677d730; compiled by 'jenkins' on 2015-12-16T03:01Z

STARTUP_MSG: java = 1.7.0_67

************************************************************/

16/07/25 16:03:59 INFO tools.DFSZKFailoverController: registered UNIX signal handlers for [TERM, HUP, INT]

16/07/25 16:04:00 INFO tools.DFSZKFailoverController: Failover controller configured for NameNode NameNode at NA1/192.168.0.217:8020

16/07/25 16:04:00 INFO zookeeper.ZooKeeper: Client environment:zookeeper.version=3.4.6-3485--1, built on 12/16/2015 02:35 GMT

16/07/25 16:04:00 INFO zookeeper.ZooKeeper: Client environment:host.name=NA1

16/07/25 16:04:00 INFO zookeeper.ZooKeeper: Client environment:java.version=1.7.0_67

16/07/25 16:04:00 INFO zookeeper.ZooKeeper: Client environment:java.vendor=Oracle Corporation

16/07/25 16:04:00 INFO zookeeper.ZooKeeper: Client environment:java.home=/usr/jdk64/jdk1.7.0_67/jre

16/07/25 16:04:00 INFO zookeeper.ZooKeeper: Client environment:java.class.path=/usr/hdp/2.3.4.0-3485/hadoop/conf:/usr/hdp/2.3.4.0-3485/hadoop/lib/commons-logging-1.1.3.jar:/usr/hdp/2.3.4.0-3485/hadoop/lib/commons-math3-3.1.1.jar:/usr/hdp/2.3.4.0-3485/hadoop/lib/apacheds-kerberos-codec-2.0.0-M15.jar:/usr/hdp/2.3.4.0-3485/hadoop/lib/log4j-1.2.17.jar:/usr/hdp/2.3.4.0-3485/tez/lib/hadoop-mapreduce-client-core-2.7.1.2.3.4.0-3485.jar:/usr/hdp/2.3.4.0-3485/tez/lib/jetty-6.1.26.hwx.jar:/usr/hdp/2.3.4.0-3485/tez/lib/slf4j-api-1.7.5.jar:/usr/hdp/2.3.4.0-3485/tez/lib/commons-cli-1.2.jar:/usr/hdp/2.3.4.0-3485/tez/lib/jettison-1.3.4.jar:/usr/hdp/2.3.4.0-3485/tez/lib/hadoop-yarn-server-web-proxy-2.7.1.2.3.4.0-3485.jar:/usr/hdp/2.3.4.0-3485/tez/lib/jetty-util-6.1.26.hwx.jar:/usr/hdp/2.3.4.0-3485/tez/conf

16/07/25 16:04:00 INFO zookeeper.ZooKeeper: Client environment:java.library.path=:/usr/hdp/2.3.4.0-3485/hadoop/lib/native/Linux-amd64-64:/usr/hdp/2.3.4.0-3485/hadoop/lib/native

16/07/25 16:04:00 INFO zookeeper.ZooKeeper: Client environment:java.io.tmpdir=/tmp

16/07/25 16:04:00 INFO zookeeper.ZooKeeper: Client environment:java.compiler=<NA>

16/07/25 16:04:00 INFO zookeeper.ZooKeeper: Client environment:os.name=Linux

16/07/25 16:04:00 INFO zookeeper.ZooKeeper: Client environment:os.arch=amd64

16/07/25 16:04:00 INFO zookeeper.ZooKeeper: Client environment:os.version=2.6.32-431.el6.x86_64

16/07/25 16:04:00 INFO zookeeper.ZooKeeper: Client environment:user.name=hdfs

16/07/25 16:04:00 INFO zookeeper.ZooKeeper: Client environment:user.home=/home/hdfs

16/07/25 16:04:00 INFO zookeeper.ZooKeeper: Client environment:user.dir=/home/hdfs

16/07/25 16:04:00 INFO zookeeper.ZooKeeper: Initiating client connection, connectString=dn3:2181,dn1:2181,dn2:2181 sessionTimeout=5000 watcher=org.apache.hadoop.ha.ActiveStandbyElector$WatcherWithClientRef@2a677c0a

16/07/25 16:04:00 INFO zookeeper.ClientCnxn: Opening socket connection to server DN3/192.168.174.90:2181. Will not attempt to authenticate using SASL (unknown error)

16/07/25 16:04:00 INFO zookeeper.ClientCnxn: Socket connection established to DN3/192.168.174.90:2181, initiating session

16/07/25 16:04:00 INFO zookeeper.ClientCnxn: Session establishment complete on server DN3/192.168.174.90:2181, sessionid = 0x35621117faf0000, negotiated timeout = 5000

16/07/25 16:04:00 INFO ha.ActiveStandbyElector: Session connected.

16/07/25 16:04:00 INFO ha.ActiveStandbyElector: Successfully created /hadoop-ha/H3CStandby in ZK.

16/07/25 16:04:00 INFO ha.ActiveStandbyElector: Terminating ZK connection for elector id=1963617384 appData=null cb=Elector callbacks for NameNode at NA1/192.168.0.217:8020

16/07/25 16:04:00 INFO zookeeper.ZooKeeper: Session: 0x35621117faf0000 closed

16/07/25 16:04:00 INFO zookeeper.ClientCnxn: EventThread shut down

16/07/25 16:04:00 INFO tools.DFSZKFailoverController: SHUTDOWN_MSG:

/************************************************************

SHUTDOWN_MSG: Shutting down DFSZKFailoverController at NA1/192.168.0.217

************************************************************/

[root@NA2 /]# sudo su -l hdfs -c 'hdfs namenode -bootstrapStandby'

16/07/25 16:06:02 INFO namenode.NameNode: STARTUP_MSG:

/************************************************************

STARTUP_MSG: Starting NameNode

STARTUP_MSG: host = NA2/192.168.0.218

STARTUP_MSG: args = [-bootstrapStandby]

STARTUP_MSG: version = 2.7.1.2.3.4.0-3485

STARTUP_MSG: classpath = /usr/hdp/2.3.4.0-3485/hadoop/conf:/usr/hdp/2.3.4.0-3485/hadoop/lib/activation-1.1.jar:/usr/hdp/2.3.4.0-3485/tez/lib/jersey-json-1.9.jar:/usr/hdp/2.3.4.0-3485/tez/lib/hadoop-mapreduce-client-common-2.7.1.2.3.4.0-3485.jar:/usr/hdp/2.3.4.0-3485/tez/lib/hadoop-yarn-server-timeline-plugins-2.7.1.2.3.4.0-3485.jar:/usr/hdp/2.3.4.0-3485/tez/lib/jetty-util-6.1.26.hwx.jar:/usr/hdp/2.3.4.0-3485/tez/conf

STARTUP_MSG: build = git@github.com:hortonworks/hadoop.git -r ef0582ca14b8177a3cbb6376807545272677d730; compiled by 'jenkins' on 2015-12-16T03:01Z

STARTUP_MSG: java = 1.7.0_67

************************************************************/

16/07/25 16:06:02 INFO namenode.NameNode: registered UNIX signal handlers for [TERM, HUP, INT]

16/07/25 16:06:02 INFO namenode.NameNode: createNameNode [-bootstrapStandby]

16/07/25 16:06:02 WARN common.Util: Path /namenode_dir/hadoop/hdfs/namenode should be specified as a URI in configuration files. Please update hdfs configuration.

16/07/25 16:06:02 WARN common.Util: Path /namenode_dir/hadoop/hdfs/namenode should be specified as a URI in configuration files. Please update hdfs configuration.

=====================================================

About to bootstrap Standby ID nn2 from:

Nameservice ID: H3CStandby

Other Namenode ID: nn1

Other NN's HTTP address: http://na1:50070

Other NN's IPC address: na1/192.168.0.217:8020

Namespace ID: 1579245750

Block pool ID: BP-855280988-192.168.0.217-1469430943704

Cluster ID: CID-7604cbf8-f515-40c6-8d91-f68ef285e6c5

Layout version: -63

isUpgradeFinalized: true

=====================================================

16/07/25 16:06:03 INFO common.Storage: Storage directory /namenode_dir/hadoop/hdfs/namenode has been successfully formatted.

16/07/25 16:06:03 WARN common.Util: Path /namenode_dir/hadoop/hdfs/namenode should be specified as a URI in configuration files. Please update hdfs configuration.

16/07/25 16:06:03 WARN common.Util: Path /namenode_dir/hadoop/hdfs/namenode should be specified as a URI in configuration files. Please update hdfs configuration.

16/07/25 16:06:03 WARN common.Storage: set restore failed storage to true

16/07/25 16:06:03 INFO namenode.TransferFsImage: Opening connection to http://na1:50070/imagetransfer?getimage=1&txid=999&storageInfo=-63:1579245750:0:CID-7604cbf8-f515-40c6-8d91-f68ef285e6c5

16/07/25 16:06:03 INFO namenode.TransferFsImage: Image Transfer timeout configured to 60000 milliseconds

16/07/25 16:06:03 INFO namenode.TransferFsImage: Transfer took 0.02s at 600.00 KB/s

16/07/25 16:06:03 INFO namenode.TransferFsImage: Downloaded file fsimage.ckpt_0000000000000000999 size 12424 bytes.

16/07/25 16:06:03 INFO util.ExitUtil: Exiting with status 0

16/07/25 16:06:03 INFO namenode.NameNode: SHUTDOWN_MSG:

/************************************************************

SHUTDOWN_MSG: Shutting down NameNode at NA2/192.168.0.218

************************************************************/

启动NameNode Standby

NameNode HA验证

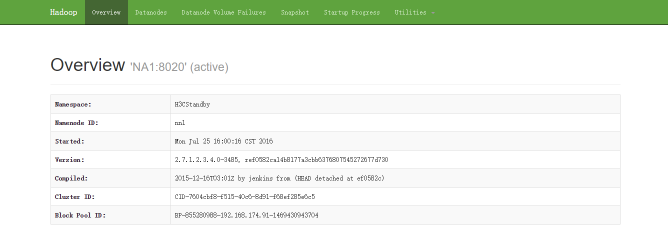

进入NA1对应的ip的namenode管理界面(http://192.168.0.217:50070)

NA1的Namenode状态为active。

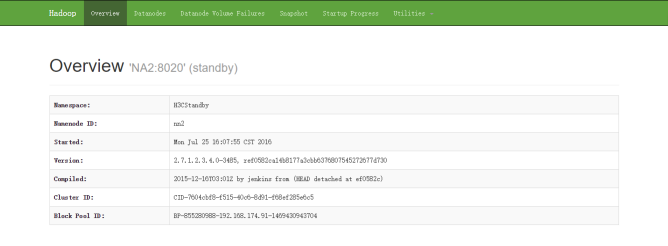

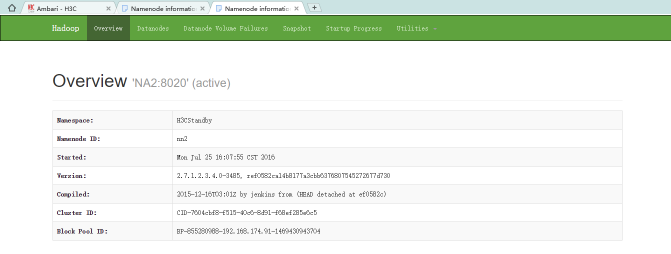

进入NA2对应的ip的namenode管理界面(http://192.168.0.218:50070)

NA1的Namenode状态为active。

进入NA2对应的ip的namenode管理界面(http://192.168.0.218:50070)

NA1的Namenode状态为standby。

在界面中选择主机。

NA1的Namenode状态为standby。

在界面中选择主机。

选择活动的namenode进入NA1主机界面。

并点击确定。

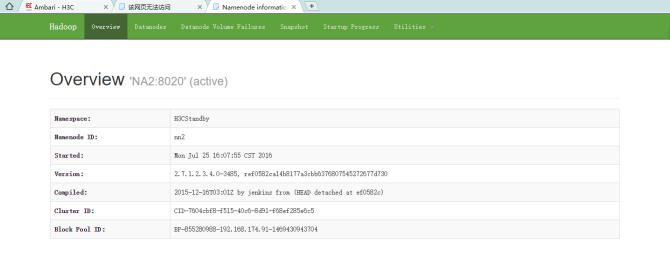

当NA1的namenode关闭后,刷新NA2对应的ip的namenode管理界面(http://192.168.0.218:50070),NA2主机的namenode状态由standby变为active,NA1对应的ip的namenode管理界面(http://192.168.0.217:50070),namenode管理界面无法进行访问。

http://192.168.0.217:50070界面:

HDFS服务界面一个namenode变为stopped。

HDFS服务界面一个namenode变为stopped。

选中namenode stopped进入na1主机的管理界面,启动namenode。

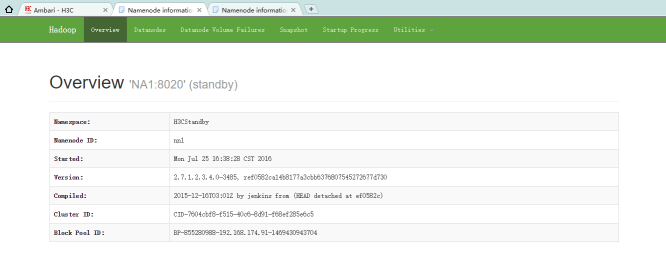

当NA1的namenode启动后,刷新NA1对应的ip的namenode管理界面(http://192.168.0.217:50070),NA1主机的namenode管理界面可以正常进行访问,namenode状态为standby,NA2对应的ip的namenode管理界面(http://192.168.0.218:50070)namenode状态仍为active。

http://192.168.0.217:50070界面:

http://192.168.0.218:50070界面:

http://192.168.0.218:50070界面:

证明NameNode HA配置成功。

证明NameNode HA配置成功。