kubernetes上的Prometheus

目录:

kubernetes上的Prometheus介绍

kubernetes监控主要关注以下三点

- 容器监控使用cAdvisor

- 节点监控通过node_exporter

- kubernetes监控通过kube-stat-metrics

kubernetes自身的监控包括

- Node资源利用率

- Node数量

- Pods数量

- 资源对象状态

Pod监控包括

- Pod数量

- 容器资源利用率(CPU,内存,流量)

- 应用程序(需要业务暴露)

在Prometheus中通过kubernetes_sd_config参数配置,原理是使用kubernetes的api-server获取被监控的目标

- Node可以自动去发现集群中的节点

- Service可以自动的发现Service的IP和端口相关

- Pod可以自动去发现Pod暴露的指标

- Endpoint

- ingress可以探测访问的情况等

kubernetes上部署Prometheus

首先准备kubernetes集群

$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

master Ready master 37d v1.13.2

node-01 Ready <none> 37d v1.13.2

node-02 Ready <none> 37d v1.13.2

github上kubernetes关于Prometheus部署的yaml

$ git clone https://github.com/kubernetes/kubernetes

$ ls kubernetes/cluster/addons/prometheus/

alertmanager-configmap.yaml alertmanager-service.yaml kube-state-metrics-service.yaml OWNERS prometheus-service.yaml

alertmanager-deployment.yaml kube-state-metrics-deployment.yaml node-exporter-ds.yml prometheus-configmap.yaml prometheus-statefulset.yaml

alertmanager-pvc.yaml kube-state-metrics-rbac.yaml node-exporter-service.yaml prometheus-rbac.yaml README.md

配置文件分为四部分

- prometheus

- kube-metrics

- node-exporter

- alertmanager

kubernetes/cluster/addons/prometheus/prometheus-rbac.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: prometheus

namespace: kube-system

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

ServiceAccount作用是授权Prometheus使用ServiceAccount来访问kubernetes的api,后边ClusterRole是对应授予的权限,ClusterRoleBinding将权限绑定到Prometheus的ServiceAccount

kubernetes/cluster/addons/prometheus/prometheus-configmap.yaml

就是prometheus.yml了,scrape_configs包括

job_name: prometheus自身监控job_name: kubernetes-apiserversjob_name: kubernetes-nodes-kubeletjob_name: kubernetes-nodes-cadvisorjob_name: kubernetes-service-endpointsjob_name: kubernetes-servicesjob_name: kubernetes-podsalerting

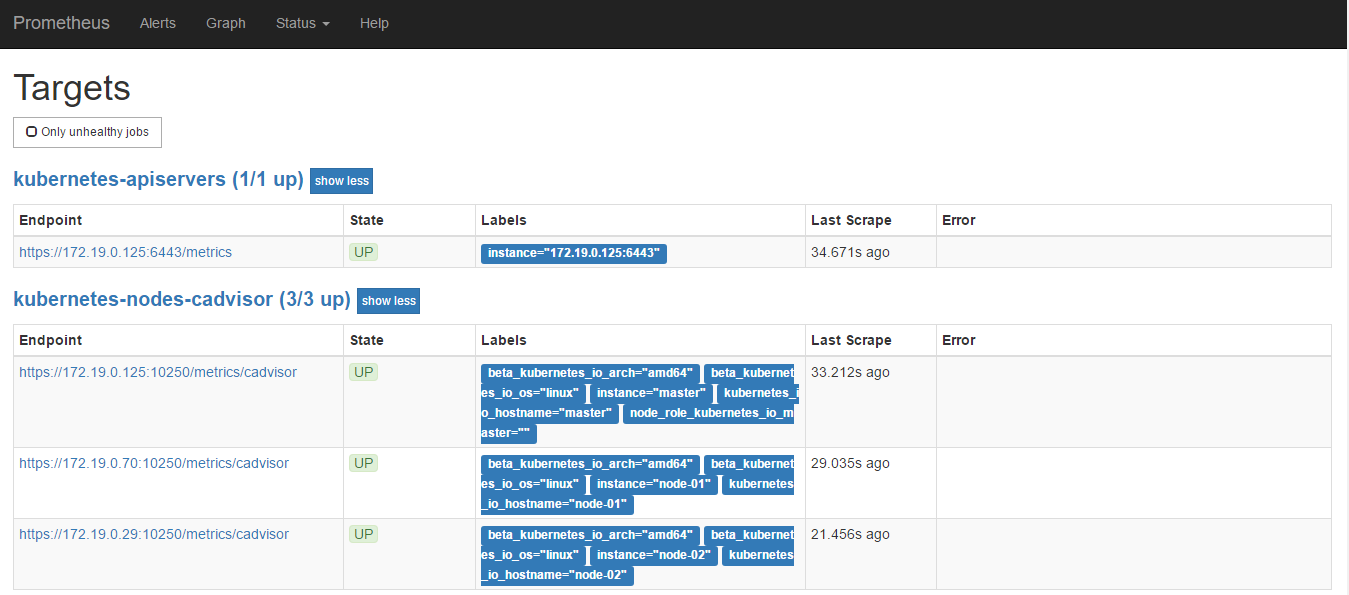

job_name: kubernetes-apiservers部分

- job_name: kubernetes-apiservers

kubernetes_sd_configs:

- role: endpoints

relabel_configs:

- action: keep

regex: default;kubernetes;https

source_labels:

- __meta_kubernetes_namespace

- __meta_kubernetes_service_name

- __meta_kubernetes_endpoint_port_name

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

insecure_skip_verify: true

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

对namespace包含default;service_name包含kubernetes;endpoint_port为https的标签进行采集,使用管理员权限跳过https认证进行访问

job_name: kubernetes-nodes-kubelet和job_name: kubernetes-nodes-cadvisor

- job_name: kubernetes-nodes-kubelet

kubernetes_sd_configs:

- role: node

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

insecure_skip_verify: true

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

- job_name: kubernetes-nodes-cadvisor

kubernetes_sd_configs:

- role: node

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

- target_label: __metrics_path__

replacement: /metrics/cadvisor

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

insecure_skip_verify: true

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

通过kubelet的10250端口进行访问

job_name: kubernetes-service-endpoints部分

- job_name: kubernetes-service-endpoints

kubernetes_sd_configs:

- role: endpoints

relabel_configs:

- action: keep

regex: true

source_labels:

- __meta_kubernetes_service_annotation_prometheus_io_scrape

- action: replace

regex: (https?)

source_labels:

- __meta_kubernetes_service_annotation_prometheus_io_scheme

target_label: __scheme__

- action: replace

regex: (.+)

source_labels:

- __meta_kubernetes_service_annotation_prometheus_io_path

target_label: __metrics_path__

- action: replace

regex: ([^:]+)(?::\d+)?;(\d+)

replacement: $1:$2

source_labels:

- __address__

- __meta_kubernetes_service_annotation_prometheus_io_port

target_label: __address__

- action: labelmap

regex: __meta_kubernetes_service_label_(.+)

- action: replace

source_labels:

- __meta_kubernetes_namespace

target_label: kubernetes_namespace

- action: replace

source_labels:

- __meta_kubernetes_service_name

target_label: kubernetes_name

__meta_kubernetes_service_annotation_prometheus_io_scrape是否采集这个目标__meta_kubernetes_service_annotation_prometheus_io_scheme采集使用的协议__meta_kubernetes_service_annotation_prometheus_io_path采集路径__meta_kubernetes_service_annotation_prometheus_io_port采集端口

创建Service需要指明annotations

annotations:

prometheus.io/port: "9153"

prometheus.io/scrape: "true"

job_name: kubernetes-services部分

- job_name: kubernetes-services

kubernetes_sd_configs:

- role: service

metrics_path: /probe

params:

module:

- http_2xx

relabel_configs:

- action: keep

regex: true

source_labels:

- __meta_kubernetes_service_annotation_prometheus_io_probe

- source_labels:

- __address__

target_label: __param_target

- replacement: blackbox

target_label: __address__

- source_labels:

- __param_target

target_label: instance

- action: labelmap

regex: __meta_kubernetes_service_label_(.+)

- source_labels:

- __meta_kubernetes_namespace

target_label: kubernetes_namespace

- source_labels:

- __meta_kubernetes_service_name

target_label: kubernetes_name

同理也是需要声明annotation

job_name: kubernetes-pods部分

- job_name: kubernetes-pods

kubernetes_sd_configs:

- role: pod

relabel_configs:

- action: keep

regex: true

source_labels:

- __meta_kubernetes_pod_annotation_prometheus_io_scrape

- action: replace

regex: (.+)

source_labels:

- __meta_kubernetes_pod_annotation_prometheus_io_path

target_label: __metrics_path__

- action: replace

regex: ([^:]+)(?::\d+)?;(\d+)

replacement: $1:$2

source_labels:

- __address__

- __meta_kubernetes_pod_annotation_prometheus_io_port

target_label: __address__

- action: labelmap

regex: __meta_kubernetes_pod_label_(.+)

- action: replace

source_labels:

- __meta_kubernetes_namespace

target_label: kubernetes_namespace

- action: replace

source_labels:

- __meta_kubernetes_pod_name

target_label: kubernetes_pod_name

同理也是声明annotation

kubernetes/cluster/addons/prometheus/prometheus-statefulset.yaml

部署在kube-system命名空间,只有一个副本,Pod包含初始化容器(数据目录授权),对configmap修改进行reload的容器,Prometheus容器(有健康检测),Prometheus的存储使用的是StorageClass的方式,需要修改StorageClassName

当然可以修改为静态PV

volumes:

- name: config-volume

configMap:

name: prometheus-config

- name: prometheus-data

persistentVolumeClaim:

claimName: prometheus-data

为了测试我们也可以直接使用emptyDir

volumes:

- name: config-volume

configMap:

name: prometheus-config

- name: prometheus-data

emptyDir: {}

kubernetes/cluster/addons/prometheus/prometheus-service.yaml

通过Service对外提供服务,这边也可以通过NodePort方式进行暴露

spec:

type: NodePort

ports:

- name: http

nodePort: 30000

port: 9090

protocol: TCP

targetPort: 9090

selector:

k8s-app: prometheus

部署

$ kubectl apply -f kubernetes/cluster/addons/prometheus/prometheus-rbac.yaml

$ kubectl apply -f kubernetes/cluster/addons/prometheus/prometheus-configmap.yaml

$ kubernetes/cluster/addons/prometheus/prometheus-statefulset.yaml

$ kubernetes/cluster/addons/prometheus/prometheus-service.yaml

grafana

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: grafana

namespace: kube-system

spec:

serviceName: "grafana"

replicas: 1

selector:

matchLabels:

app: grafana

template:

metadata:

labels:

app: grafana

spec:

containers:

- name: grafana

image: grafana/grafana

ports:

- containerPort: 3000

protocol: TCP

resources:

limits:

cpu: 500m

memory: 512Mi

requests:

cpu: 500m

memory: 512Mi

---

apiVersion: v1

kind: Service

metadata:

name: grafana

namespace: kube-system

spec:

type: NodePort

ports:

- port: 80

targetPort: 3000

nodePort: 30007

selector:

app: grafana

指定数据源http://prometheus:9090

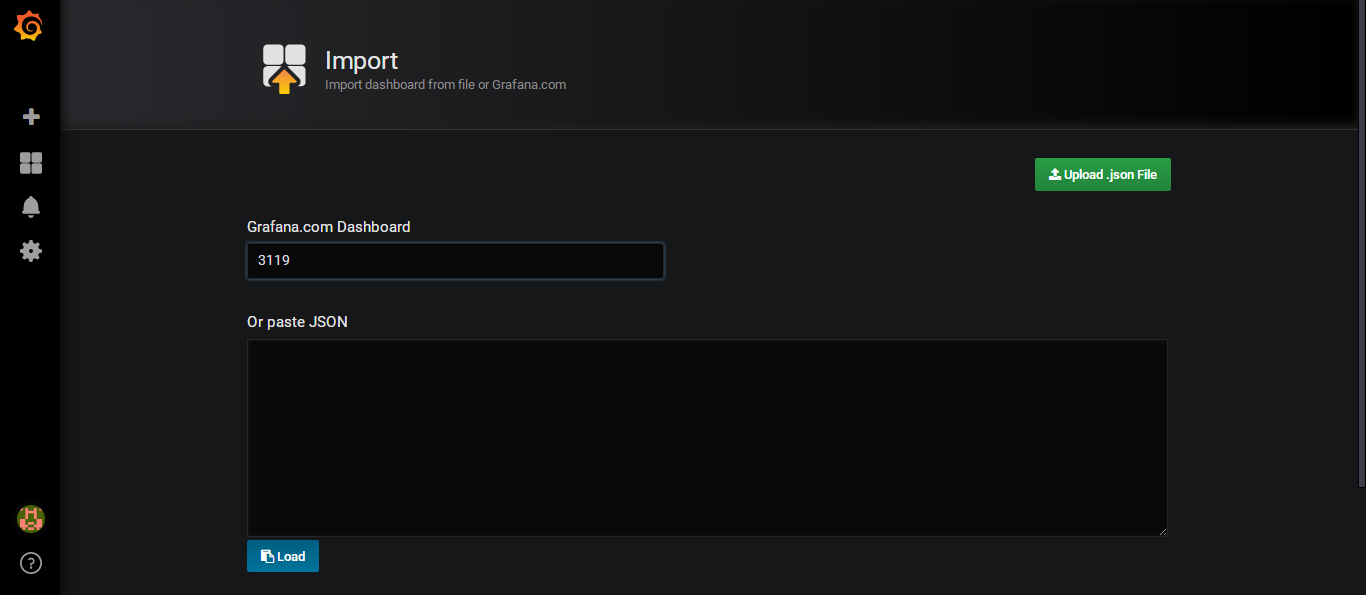

添加模板,推荐的模板有

- 集群资源 3119

- 资源状态 6417

- Node监控 9276

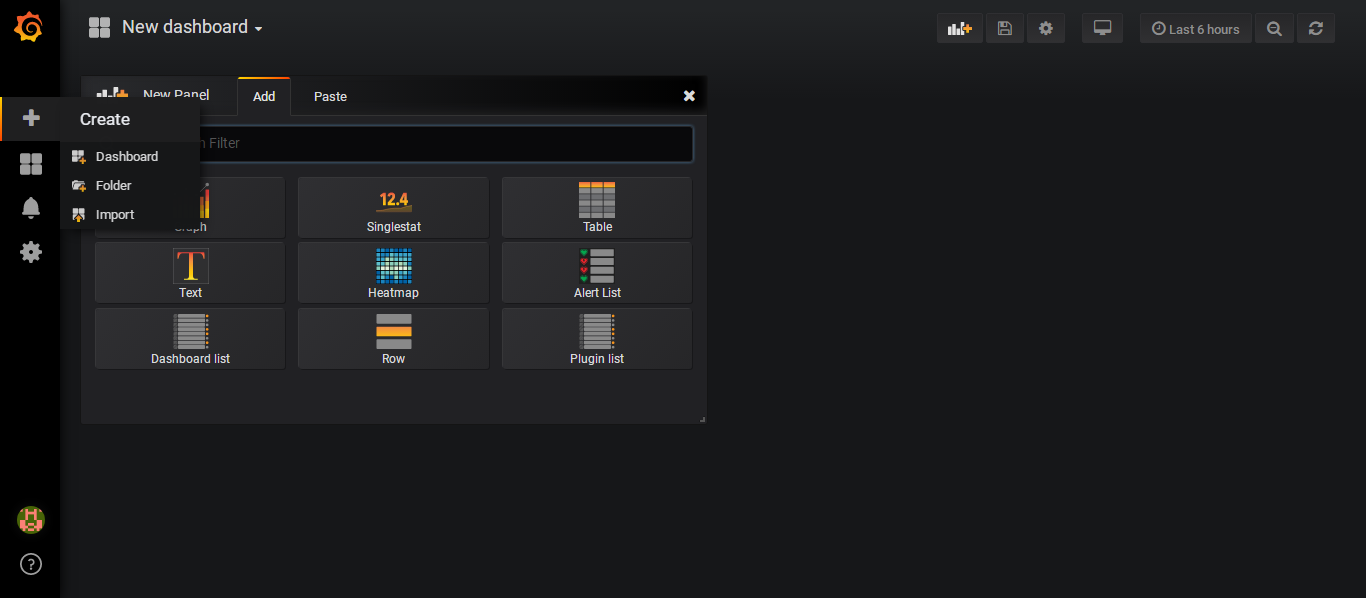

进入Dashboard界面

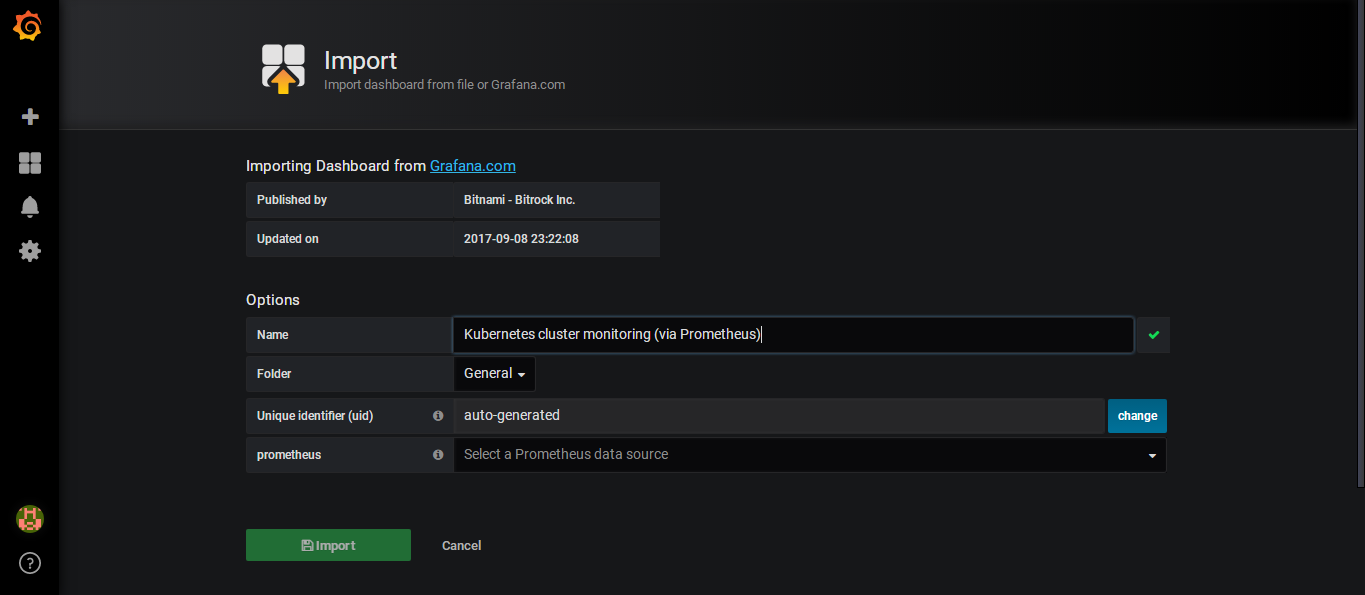

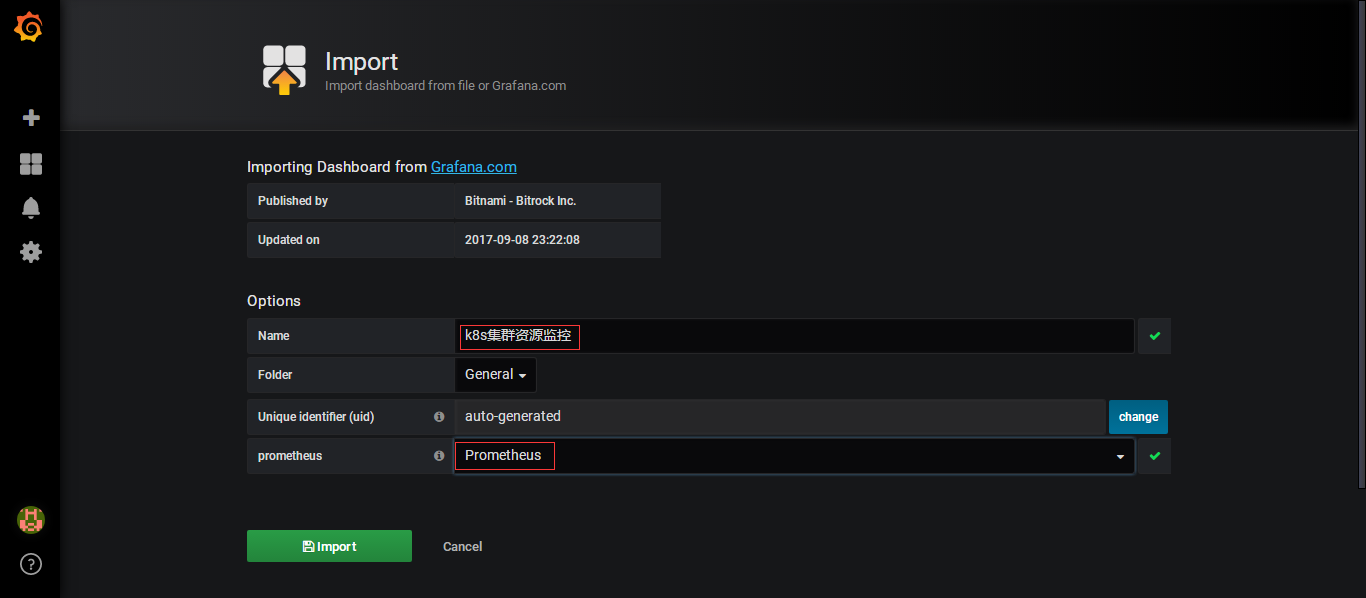

导入模板,输入3119

然后会自动获取

Name可以自行修改一下,这边我修改为k8s集群资源监控

然后创建成功了

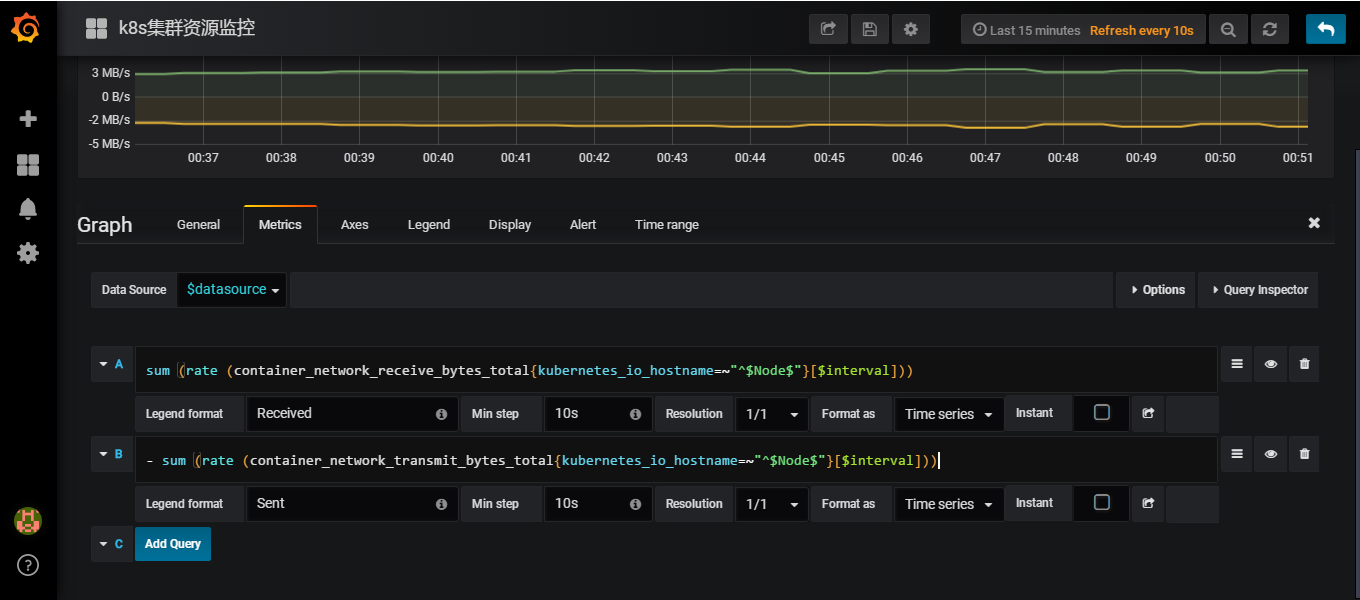

可以通过edit查看一下使用的promSQL

promSQL为

sum (rate (container_network_receive_bytes_total{kubernetes_io_hostname=~"^$Node$"}[$interval]))

sum (rate (container_network_transmit_bytes_total{kubernetes_io_hostname=~"^$Node$"}[$interval]))

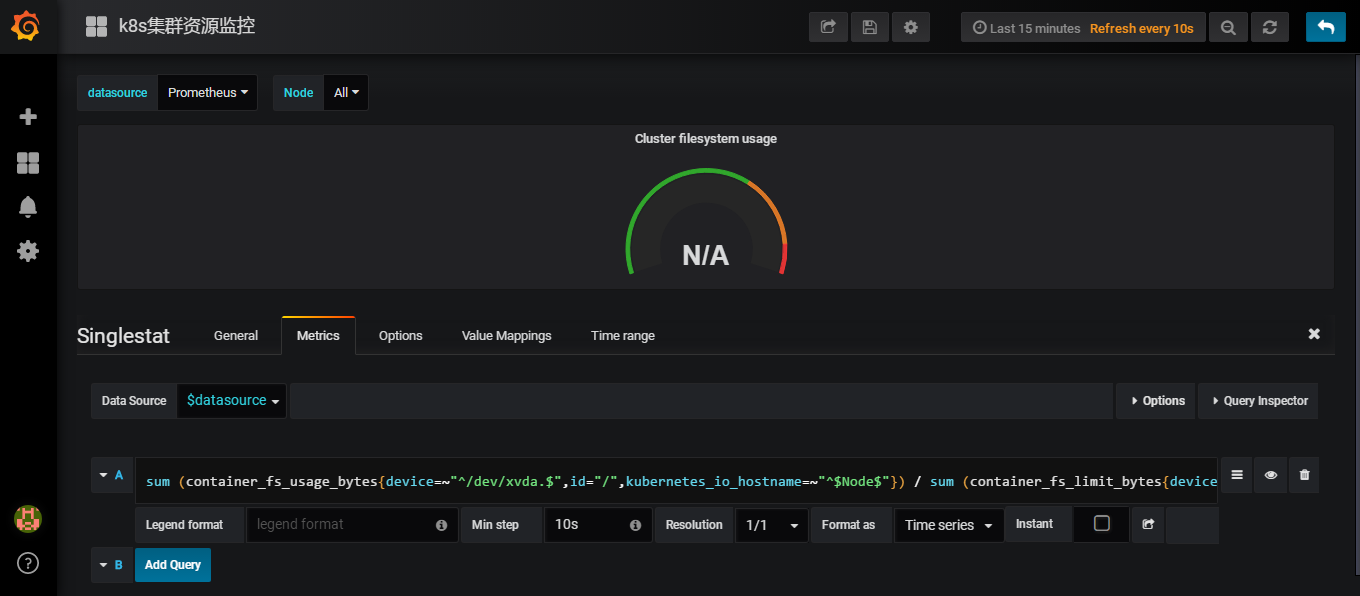

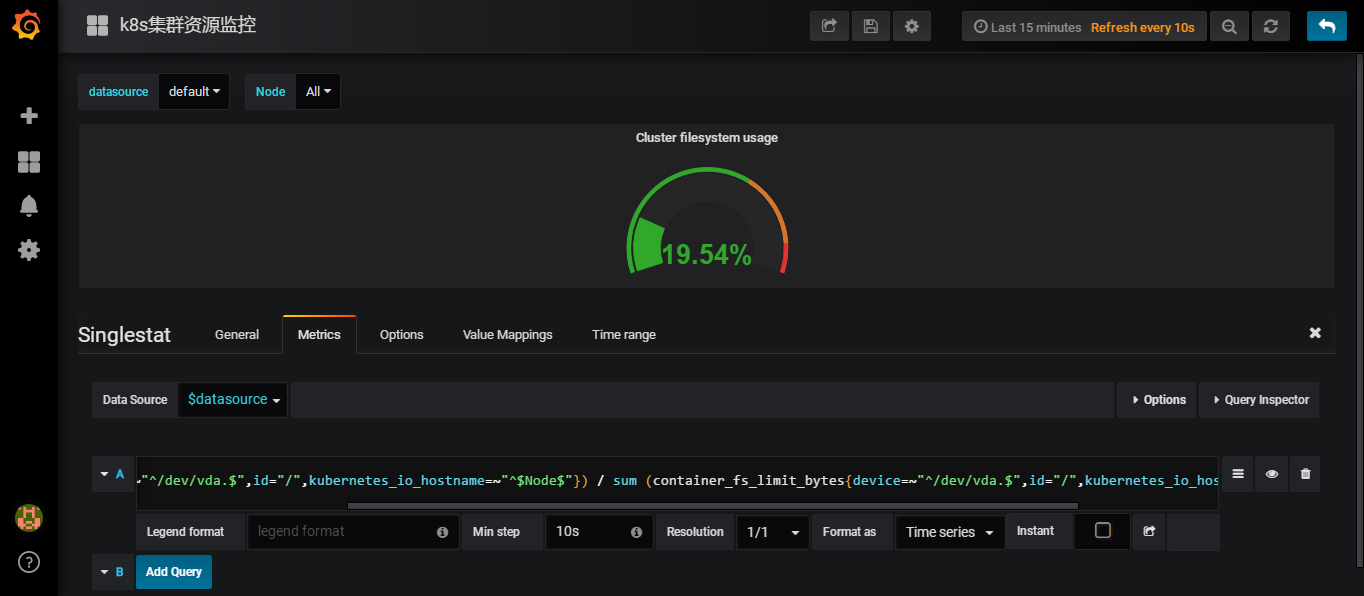

同理可以查看一下Cluster filesystem usage

可以看到使用的promSQL使用的是sum (container_fs_usage_bytes{device=~"^/dev/xvda.$",id="/",kubernetes_io_hostname=~"^$Node$"}) / sum (container_fs_limit_bytes{device=~"^/dev/xvda.$",id="/",kubernetes_io_hostname=~"^$Node$"}) * 100

我们没有对应的磁盘名称,云主机上为/dev/vda,将/dev/xvda替换为/dev/vda

使用exporters

对于Node监控,还是使用node_exporter

hostNetwork: true

hostPID: true

volumes:

- name: proc

hostPath:

path: /proc

- name: sys

hostPath:

path: /sys

使用主机的网络和PID,挂载/proc和/sys

但是这种部署会造成很多数据获取不到,还是推荐在node上部署node_exporter

$ wget https://github.com/prometheus/node_exporter/releases/download/v0.17.0/node_exporter-0.17.0.linux-amd64.tar.gz

$ tar xf node_exporter-0.17.0.linux-amd64.tar.gz

$ cd node_exporter-0.17.0.linux-amd64/

$ cat <<EOF >/usr/lib/systemd/system/node_exporter.service

[Unit]

Description=Prometheus

Documentation=https://prometheus.io/docs/

[Service]

Restart=on-failure

ExecStart=/root/node_exporter-0.17.0.linux-amd64/node_exporter --collector.systemd --collector.systemd.unit-whitelist=(docker|kubelet|kube-proxy|flanneld).service

[Install]

WantedBy=multi-user.target

EOF

$ systemctl daemon-reload

$ systemctl enable node_exporter

$ systemctl restart node_exporter

修改kubernetes/cluster/addons/prometheus/prometheus-configmap.yaml

添加pod

- job_name: kubernetes-node

static_configs:

- targets:

- 172.19.0.70:9100

apply之后,Prometheus的Pod中有检测配置文件并reload的容器

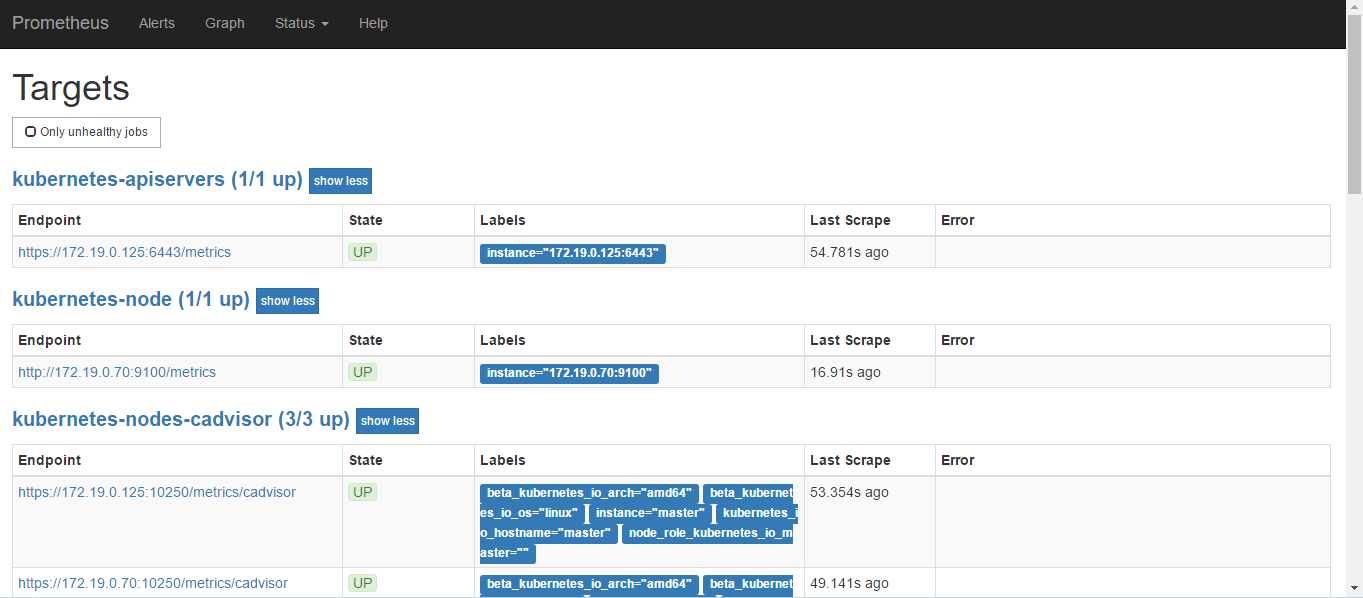

在Web的Target中可以看到对应的kubernetes-node

然后在grafana导入9276模板,定义模板名称kubernetes-node监控,数据源为Prometheus

不显示的可能是promSQL的问题了

监控k8s资源对象

主容器为kube-state-metrics采集资源对象的信息,配置也通过对应的configmap实现

$ kubectl apply -f kubernetes/cluster/addons/prometheus/kube-state-metrics-rbac.yaml

$ kubectl apply -f kubernetes/cluster/addons/prometheus/kube-state-metrics-deployment.yaml

$ kubectl apply -f kubernetes/cluster/addons/prometheus/kube-state-metrics-service.yaml

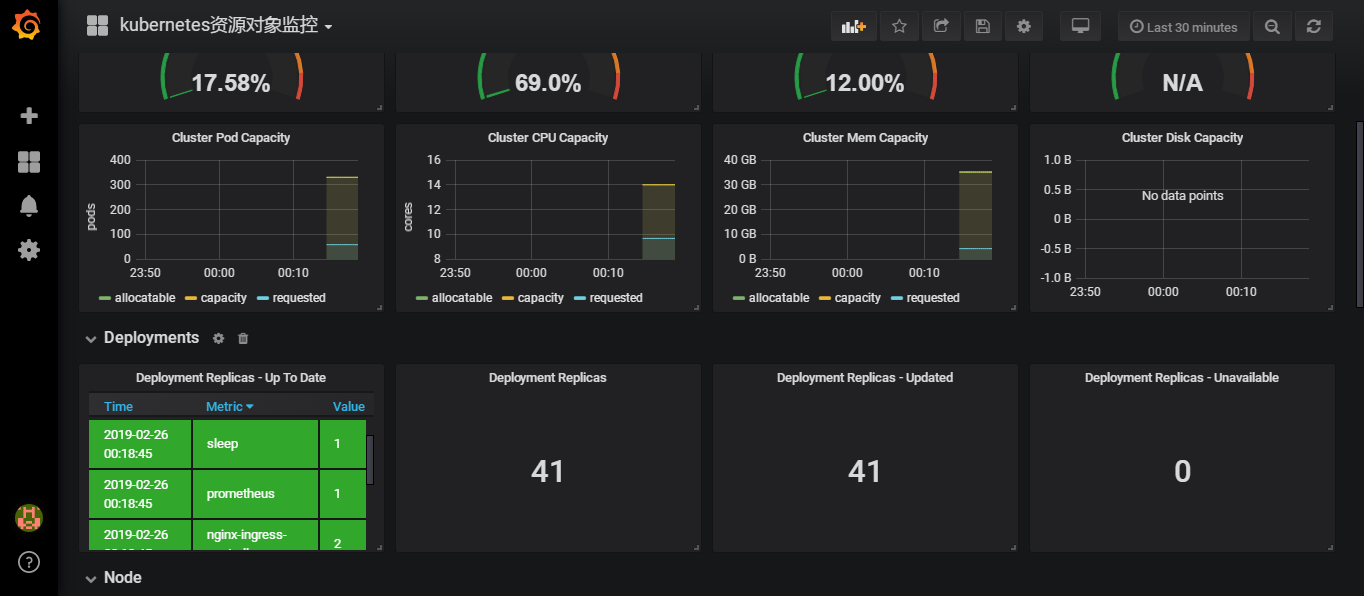

然后在grafana导入6417模板,定义模板名称kubernetes资源对象监控,数据源为Prometheus

alertmanager

有对应的alertmanager-deployment.yaml配置文件,如果没有storage就直接使用empty

volumes:

- name: config-volume

configMap:

name: alertmanager-config

- name: storage-volume

emptyDir: {}

创建对应的alertmanager需要的对象

$ kubectl apply -f alertmanager-configmap.yaml

$ kubectl apply -f alertmanager-deployment.yaml

$ kubectl apply -f alertmanager-service.yaml

$ kubectl get deployments -n kube-system

NAME READY UP-TO-DATE AVAILABLE AGE

alertmanager 1/1 1 1 5m50s

修改prometheus-configmap.yaml,将alertmanager指向刚才创建的alertmanager

alerting:

alertmanagers:

- static_configs:

- targets: ["alertmanager:80"]

修改对应的rule

rule_files:

- /etc/config/rules/*.rules

之后的rule都会通过volume的方式挂载到/etc/config/rules目录的

prometheus-statefulset.yaml

...

volumeMounts:

- name: config-volume

mountPath: /etc/config

- name: prometheus-data

mountPath: /data

subPath: ""

- name: prometheus-config

mountPath: /etc/config/rules

terminationGracePeriodSeconds: 300

volumes:

- name: config-volume

configMap:

name: prometheus-config

- name: prometheus-data

emptyDir: {}

- name: prometheus-rules

configMap:

name: prometheus-rules

对应的rule的configmap文件prometheus-rules.yaml

groups:

- name: general.rules

rules:

# Alert for any instance that is unreachable for >5 minutes.

- alert: InstanceDown

expr: up == 0

for: 1m

labels:

severity: error

annotations:

summary: "Instance {{ $labels.instance }} down"

description: "{{ $labels.instance }} of job {{ $labels.job }} has been down for more than 5 minutes."

创建对应的文件

$ kubectl apply -f prometheus-rules.yaml

$ kubectl apply -f prometheus-configmap.yaml

$ kubectl apply -f prometheus-statefulset.yaml

另外alertmanager报警相关配置也需要单独配置一下即可