istio之mixer适配器

目录:

istio

基于Denier适配器的访问控制

首先定义一个Handler,每个适配器都会不同的配置,可以参考官方的adapters

apiVersion: "config.istio.io/v1alpha2"

kind: denier

metadata:

name: code-7

spec:

status:

code: 7

message: Not allowed

包含一个错误码和消息,如果有预检请求通过rule对象传递到这个Handler就会调用是吧,返回相应信息

然后想要直接通过Rule对象的match字段匹配即可,可以使用checknothing模板,就是无需对进入的数据进行检查

apiVersion: "config.istio.io/v1alpha2"

kind: checknothing

metadata:

name: palce-holder

spec:

最后创建一个Rule对象,将二者连接起来

apiVersion: "config.istio.io/v1alpha2"

kind: rule

metadata:

name: deny-sleep-v1-to-httpbin

spec:

match: destination.labels["app"] == "httpbin" && source.labels["app"] == "sleep" && source.labels["version"] == "v1"

actions:

- handler: code-7.denier

instances: [ palce-holder.checknothing ]

验证一下

$ export SOURCE_POD2=$(kubectl get pod -l app=sleep,version=v2 -o jsonpath={.items..metadata.name})

$ kubectl exec -it -c sleep $SOURCE_POD2 bash

bash-4.4# http --body http://httpbin:8000/ip

{

"origin": "127.0.0.1"

}

bash-4.4# exit

$ export SOURCE_POD1=$(kubectl get pod -l app=sleep,version=v1 -o jsonpath={.items..metadata.name})

$ kubectl exec -it -c sleep $SOURCE_POD1 bash

bash-4.4# http http://httpbin:8000/ip

HTTP/1.1 403 Forbidden

content-length: 51

content-type: text/plain

date: Mon, 04 Mar 2019 04:33:36 GMT

server: envoy

x-envoy-upstream-service-time: 3

PERMISSION_DENIED:code-7.denier.default:Not allowed

可以看到sleep的v1版本请求是被403了,而v2版本是可以正常的访问的

基于Listchecker适配器的访问控制

为了继续的测试,需要将上边的三个对象进行删除

相对于Denier适配器,Listchecker控制器更加灵活

Listchecker适配器会保存一个列表,可以声明列表为白名单或者黑名单

创建Handler对象

apiVersion: config.istio.io/v1alpha2

kind: listchecker

metadata:

name: chaos

spec:

overrides: ["v1", "v3"]

blacklist: true

overrides字段用于保存一个列表用于检查,并在black字段声明这一列表为黑名单,还可以使用给一个providerUrl字段引用一个远程列表并定时更新,如果是想用白名单,就将blacklist改为false

使用listentry模板从输出数据中提取内容并输出到Listchecker适配器

apiVersion: config.istio.io/v1alpha2

kind: listentry

metadata:

name: version

spec:

value: source.labels["version"]

listentry的value字段作用就是用表达式从输入数据中提取内容进行后续输出

让通过rule将instance和handle字段连接

apiVersion: "config.istio.io/v1alpha2"

kind: rule

metadata:

name: deny-sleep-v1-to-httpbin

spec:

match: destination.labels["app"] == "httpbin"

actions:

- handler: chaos.listchecker

instances: [ version.listentry ]

验证一下

$ export SOURCE_POD2=$(kubectl get pod -l app=sleep,version=v2 -o jsonpath={.items..metadata.name})

$ export SOURCE_POD1=$(kubectl get pod -l app=sleep,version=v1 -o jsonpath={.items..metadata.name})

$ kubectl exec -it -c sleep $SOURCE_POD2 bash

bash-4.4# http --body http://httpbin:8000/ip

{

"origin": "127.0.0.1"

}

$ kubectl exec -it -c sleep $SOURCE_POD1 bash

bash-4.4# http --body http://httpbin:8000/ip

PERMISSION_DENIED:chaos.listchecker.default:v1 is blacklisted

使用MemQuota适配器进行服务限流

限流的主要作用是对特定的服务进行限制,防止服务过载

适配器在预检阶段对流量进行配额判断,根据特定流量设定对规则来进行判断流量请求是否已经超过指定的额度,如果超额就进行限流

限流的实现需要配置Mixer端和Client端,Mixer端实现限流逻辑,Client端使用QuotaSpec定义限额

Mixer对象定义

定义MemQueta,默认规则为每秒最多调用20次,单位为httpbin服务设置限流规则,每5秒只能调用一次

apiVersion: "config.istio.io/v1alpha2"

kind: memquota

metadata:

name: handler

spec:

quotas:

- name: dest-quota.quota.default

maxAmount: 20

validDuration: 10s

overrides:

- dimensions:

destinations: httpbin

maxAmount: 1

validDuration: 5s

定义基于Quota模板的Instance对象

apiVersion: "config.istio.io/v1alpha2"

kind: quota

metadata:

name: dest-quota

spec:

dimensions:

destination: destination.labels["app"] | destination.service | "unknown"

对destination的定义是一个属性表达式,取值逻辑是:首先判断流量目标是否具有app标签,如果没有则获取目标服务的名字,否则取值为"unknown"

然后依然是通过rule对象将两者连接在一起

apiVersion: "config.istio.io/v1alpha2"

kind: rule

metadata:

name: quota

spec:

actions:

- handler: handler.memquota

instances: [ dest-quota.quota ]

client对象定义

Client需要定义的有两个内容,受限制的应用和配额扣减方式,分别对应这QuotaSpec和QuotaSpecBind对象

定义配额扣减方式为dest-quota

apiVersion: "config.istio.io/v1alpha2"

kind: QuotaSpec

metadata:

name: request-count

spec:

rules:

- quotas:

- charge: 5

quota: dest-quota

定义QuotaSpecBinding对象

apiVersion: config.istio.io/v1alpha2

kind: QuotaSpecBinding

metadata:

name: spec-sleep

spec:

quotaSpecs:

- name: request-count

namespace: default

services:

- name: httpbin

namespace: default

测试限流功能

$ kubectl exec -it -c sleep $SOURCE_POD1 bash

bash-4.4# for i in `seq 10`; do http --body http://httpbin:8000/ip; done

{

"origin": "127.0.0.1"

}

{

"origin": "127.0.0.1"

}

{

"origin": "127.0.0.1"

}

RESOURCE_EXHAUSTED:Quota is exhausted for: dest-quota

RESOURCE_EXHAUSTED:Quota is exhausted for: dest-quota

{

"origin": "127.0.0.1"

}

{

"origin": "127.0.0.1"

}

RESOURCE_EXHAUSTED:Quota is exhausted for: dest-quota

RESOURCE_EXHAUSTED:Quota is exhausted for: dest-quota

RESOURCE_EXHAUSTED:Quota is exhausted for: dest-quota

bash-4.4# for i in `seq 10`; do http --body http://flaskapp/env/version; done

v1

v1

v1

v1

v1

v1

v1

v1

v1

v1

但是看起来限制频率没有那么高呢

RedisQuota适配器

需要依赖Redis做数据的存储,其他的和MEMQuota的流程是一致的

apiVersion: v1

kind: ReplicationController

metadata:

name: redis

labels:

name: redis

spec:

replicas: 1

selector:

name: redis

template:

metadata:

labels:

name: redis

spec:

containers:

- name: redis

image: redis

ports:

- containerPort: 6379

---

apiVersion: v1

kind: Service

metadata:

name: redis

labels:

name: redis

spec:

ports:

- port: 6379

targetPort: 6379

selector:

name: redis

---

apiVersion: "config.istio.io/v1alpha2"

kind: redisquota

metadata:

name: handler

spec:

redisServerUrl: "redis:6379"

quotas:

- name: dest-quota.quota.default

maxAmount: 20

bucketDuration: 1s

validDuration: 10s

ratelimitAlgorithm: ROLLING_WINDOW

overrides:

- dimensions:

destinations: httpbin

maxAmount: 1

- redisServerUrl,用于指定Redis服务地址

- bucketDuration,设置一个大于0的秒数?

- rateLimitAlgorithm,可以为每个Quota指定各自的限流算法,在MemQuota中使用的FIXED_WINDOW算法,且不能更改

对应Instance对象,和memquota一样

apiVersion: "config.istio.io/v1alpha2"

kind: quota

metadata:

name: dest-quota

spec:

dimensions:

destination: destination.labels["app"] | destination.service | "unknown"

用于绑定的rule

apiVersion: "config.istio.io/v1alpha2"

kind: rule

metadata:

name: quota

spec:

actions:

- handler: handler.redisquota

instances: [ dest-quota.quota ]

client端也和memquota一样

apiVersion: "config.istio.io/v1alpha2"

kind: QuotaSpec

metadata:

name: request-count

spec:

rules:

- quotas:

- charge: 5

quota: dest-quota

---

apiVersion: config.istio.io/v1alpha2

kind: QuotaSpecBinding

metadata:

name: spec-sleep

spec:

quotaSpecs:

- name: request-count

namespace: default

services:

- name: httpbin

namespace: default

Prometheus定义监控指标

prometheus的监控指标是Sidecar进行调用的时候向Mixer服务报告流量信息,可以通过Rule对象指定对应的MetricsInstance通过PrometheusHandler进行输出,然后由Prometheus获取

$ kubectl get metrics.config.istio.io -n istio-system

NAME AGE

requestcount 19d

requestduration 19d

requestsize 19d

responsesize 19d

tcpbytereceived 19d

tcpbytesent 19d

每一个都是一个metrics实例

对应的适配器

$ kubectl get prometheuses.config.istio.io -n istio-system -o yaml

apiVersion: v1

items:

- apiVersion: config.istio.io/v1alpha2

kind: prometheus

metadata:

...

name: handler

namespace: istio-system

...

spec:

metrics:

- instance_name: requestcount.metric.istio-system

kind: COUNTER

label_names:

- reporter

- source_app

- source_principal

...

适配器中定义了好多监控指标规则

istio-system命名空间中包含两个Prometheus相关的Rule对象,分别是promhttp和promtcp,将不同的metric实例和Prometheus Handler连接在一起

自定义监控指标

默认情况下requestsize是不包括Header大小的,可以定义一个metrics对象,在value字段使用的request.total_size来获取并监控

$ kubectl get metrics.config.istio.io -n istio-system requestsize -o yaml > request.totalsize.yaml

spec.value改为request.total_size | 0, metadata.name改为requesttotalsize

apiVersion: config.istio.io/v1alpha2

kind: metric

metadata:

name: requesttotalsize

namespace: istio-system

spec:

dimensions:

connection_security_policy: conditional((context.reporter.kind | "inbound") ==

"outbound", "unknown", conditional(connection.mtls | false, "mutual_tls", "none"))

destination_app: destination.labels["app"] | "unknown"

destination_principal: destination.principal | "unknown"

destination_service: destination.service.host | "unknown"

destination_service_name: destination.service.name | "unknown"

destination_service_namespace: destination.service.namespace | "unknown"

destination_version: destination.labels["version"] | "unknown"

destination_workload: destination.workload.name | "unknown"

destination_workload_namespace: destination.workload.namespace | "unknown"

reporter: conditional((context.reporter.kind | "inbound") == "outbound", "source",

"destination")

request_protocol: api.protocol | context.protocol | "unknown"

response_code: response.code | 200

source_app: source.labels["app"] | "unknown"

source_principal: source.principal | "unknown"

source_version: source.labels["version"] | "unknown"

source_workload: source.workload.name | "unknown"

source_workload_namespace: source.workload.namespace | "unknown"

monitored_resource_type: '"UNSPECIFIED"'

value: request.total_size | 0

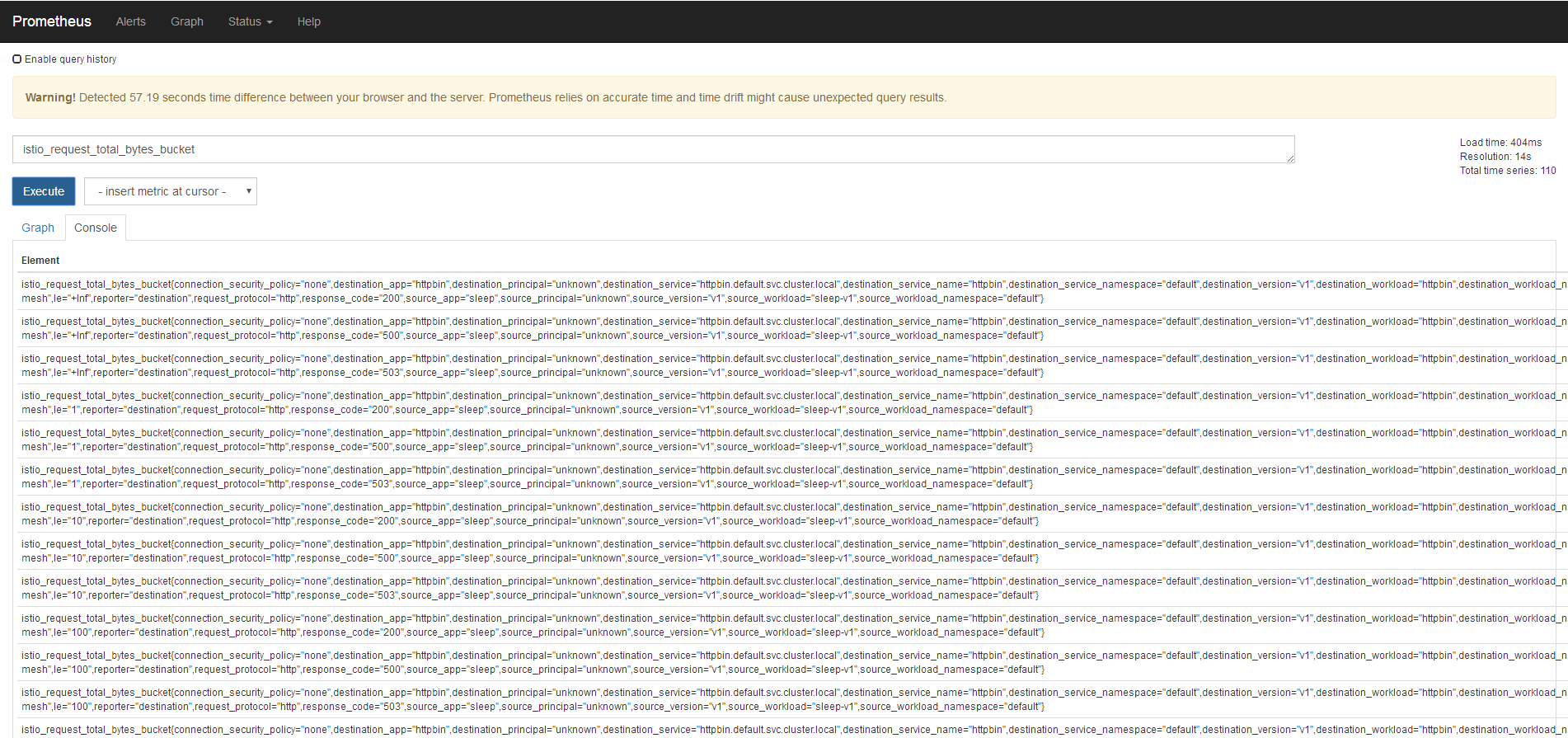

部署后查询

$ kubectl get metrics.config.istio.io -n istio-system

NAME AGE

requestcount 20d

requestduration 20d

requesttotalsize 13s

responsesize 20d

tcpbytereceived 20d

tcpbytesent 20d

编辑Prometheus Handler

$ kubectl edit prometheuses.config.istio.io -n istio-system

...添加

- buckets:

exponentialBuckets:

growthFactor: 10

numFiniteBuckets: 8

scale: 1

instance_name: requesttotalsize.metric.istio-system

kind: DISTRIBUTION

label_names:

- reporter

- source_app

- source_principal

- source_workload

- source_workload_namespace

- source_version

- destination_app

- destination_principal

- destination_workload

- destination_workload_namespace

- destination_version

- destination_service

- destination_service_name

- destination_service_namespace

- request_protocol

- response_code

- connection_security_policy

name: request_total_bytes

更改Role

$ kubectl edit rules.config.istio.io -n istio-system promhttp

spec:

actions:

- handler: handler.prometheus

instances:

- requestcount.metric

- requestduration.metric

- requestsize.metric

- responsesize.metric

- requesttotalsize.metric

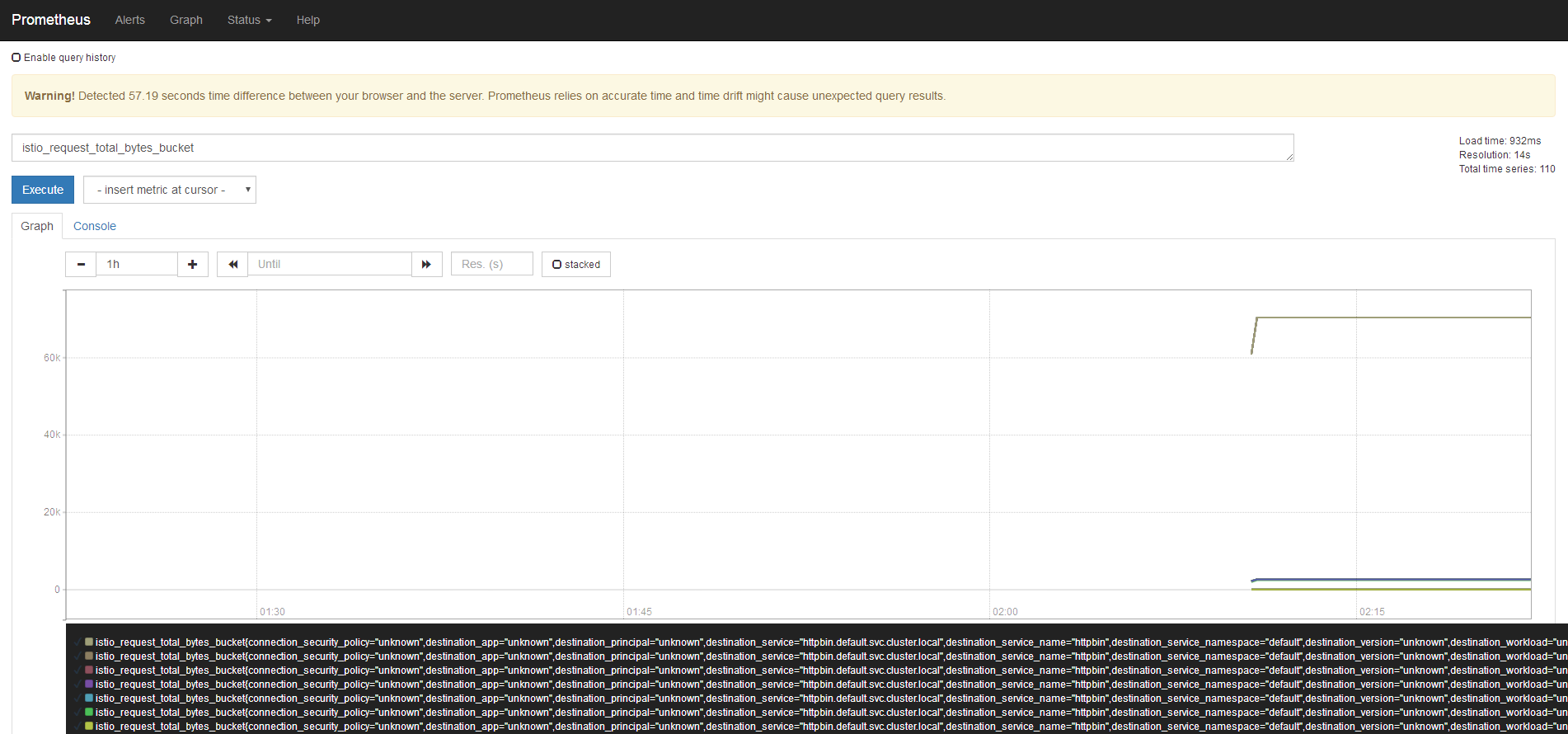

进行测试验证

$ kubectl exec -it -c sleep $SOURCE_POD1 bash

bash-4.4# wrk http://httpbin:8000/ip

Running 10s test @ http://httpbin:8000/ip

2 threads and 10 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 2.00ms 2.78ms 30.25ms 88.48%

Req/Sec 4.10k 1.18k 6.60k 64.00%

81579 requests in 10.02s, 16.99MB read

Non-2xx or 3xx responses: 78721

Requests/sec: 8139.38

Transfer/sec: 1.70MB

在prometheus中可以搜到istio_requests_total_size

istio的安全加固

istio支持对service之间的请求进行加密,这无疑又加大了请求消耗的资源和时间,就不记录了。

istio的试用建议

有四个阶段

- 范围定义:选择试用的服务,确定受影响范围,并根据istio项目的情况决定事项istio的功能

- 方案部署:istio的部署和业务对象的部署

- 测试验证:对部署结果测试

- 切换演练:对业务失败的时候切换为稳定环境

istio自身成熟的功能组是流量控制,但是版本号依然为v1alpha3,alpha阶段的产品不保证向后的兼容性。istio也存在多次版本的回退等

对于mixer数据模型复杂,将应用流量都集中到一点上,虽然加入了缓存在减少延迟,但是依然是一个高风险的节点

pilot对于性能方面也是较差

sidecar注入面试会增加服务之间调用的网络时延

性价比排序

- 服务监控和跟踪

- 路由管理

- 限流和黑白名单功能

- mTLS和RBAC

试用过程中选择可以容忍中断时间,对延迟不敏感的业务,并且做好故障对应的处理或者恢复