kubernetes的监控

目录:

Kubernetes监控

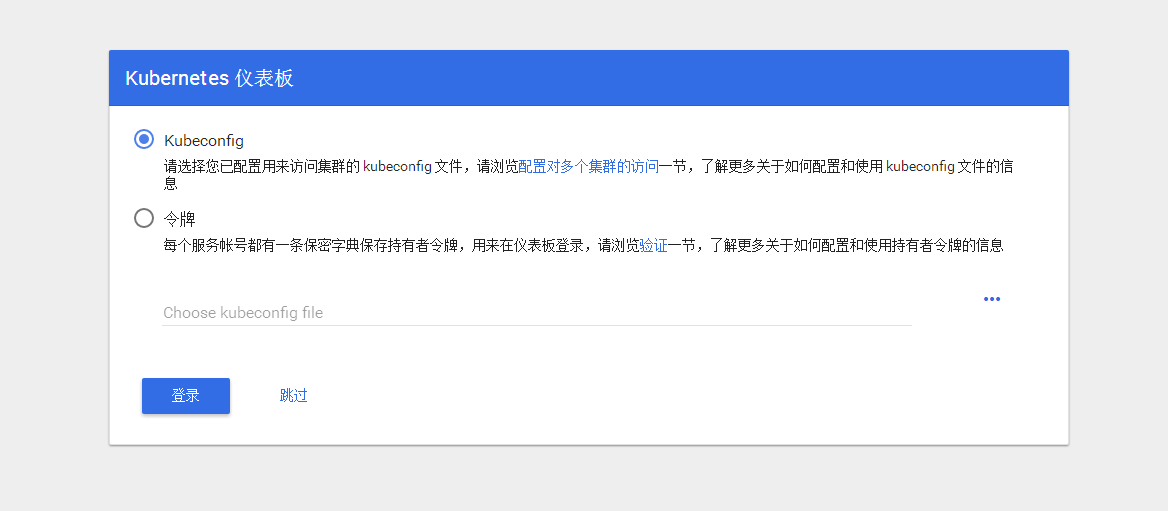

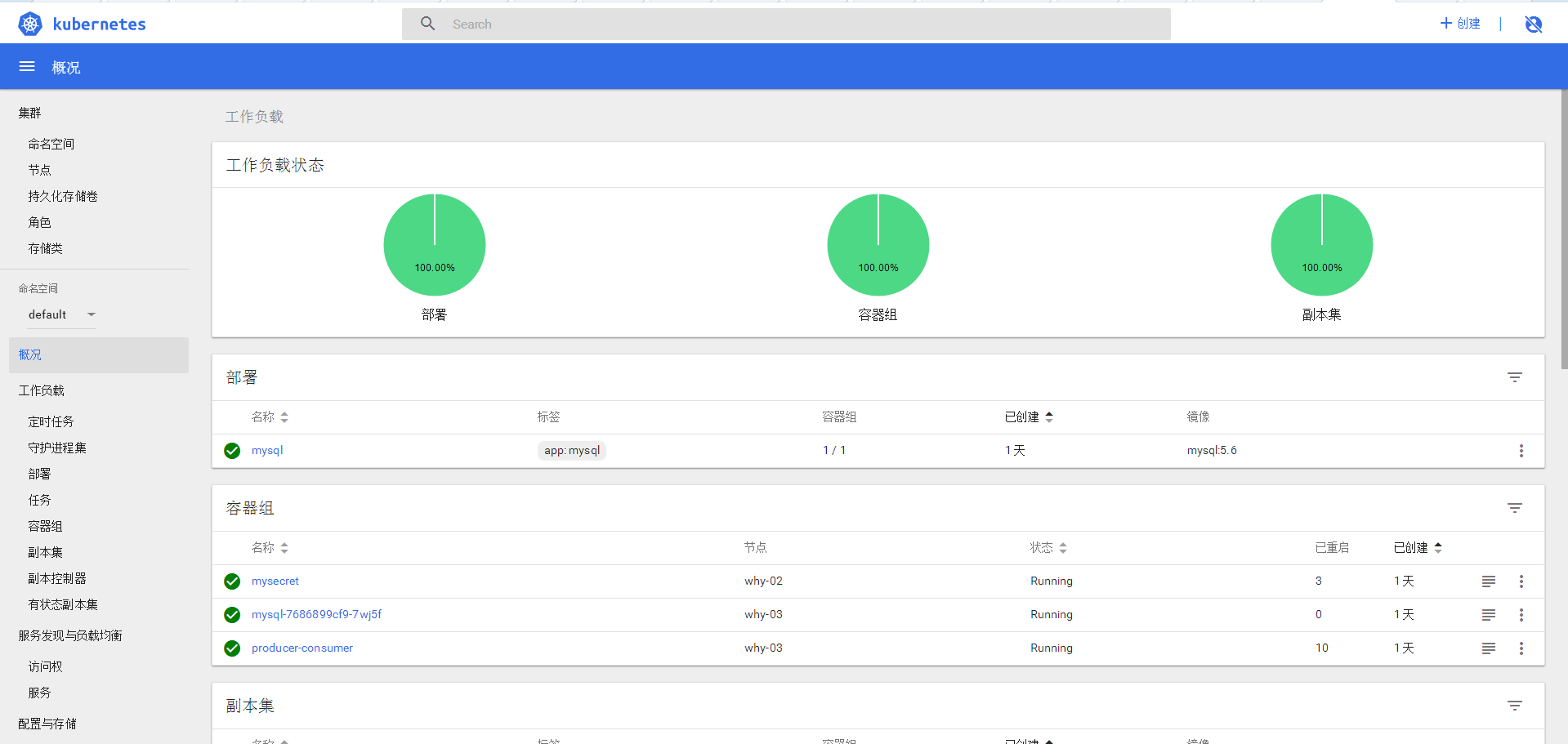

Kubernetes Dashboard

Kubernetes还开发了一个基于Web的Dashboard,用户可以用Kubernetes Dashboard部署容器化的应用、监控应用的状态、执行故障排查任务以及管理Kubernetes各种资源。

在 Kubernetes Dashboard 中可以查看集群中应用的运行状态,也能够创建和修改各种 Kubernetes 资源,比如 Deployment、Job、DaemonSet 等。用户可以 Scale Up/Down Deployment、执行 Rolling Update、重启某个 Pod 或者通过向导部署新的应用。Dashboard 能显示集群中各种资源的状态以及日志信息。

可以说,Kubernetes Dashboard 提供了 kubectl 的绝大部分功能,大家可以根据情况进行选择。

$ kubectl create -f https://raw.githubusercontent.com/kubernetes/dashboard/master/src/deploy/recommended/kubernetes-dashboard.yaml

secret/kubernetes-dashboard-certs created

serviceaccount/kubernetes-dashboard created

role.rbac.authorization.k8s.io/kubernetes-dashboard-minimal created

rolebinding.rbac.authorization.k8s.io/kubernetes-dashboard-minimal created

deployment.apps/kubernetes-dashboard created

service/kubernetes-dashboard created

可以看到启动的Deployment和Service

$ kubectl --namespace=kube-system get deployment kubernetes-dashboard

NAME READY UP-TO-DATE AVAILABLE AGE

kubernetes-dashboard 1/1 1 1 116s

$ kubectl --namespace=kube-system get service kubernetes-dashboard

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes-dashboard ClusterIP 10.105.95.52 <none> 443/TCP 2m33s

配置为NodePort

$ kubectl --namespace=kube-system edit service kubernetes-dashboard

service/kubernetes-dashboard edited

将type: ClusterIP改为NodePort

$ kubectl --namespace=kube-system get service kubernetes-dashboard

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes-dashboard NodePort 10.105.95.52 <none> 443:32429/TCP 8m19s

由于云主机的问题这边没法直接使用HTTPS的方式请求,会报错NET::ERR_CERT_INVALID

$ kubectl proxy --address=0.0.0.0 --disable-filter=true

W1211 19:18:53.833782 13376 proxy.go:140] Request filter disabled, your proxy is vulnerable to XSRF attacks, please be cautious

Starting to serve on [::]:8001

然后访问http://119.28.223.250:8001/api/v1/namespaces/kube-system/services/https:kubernetes-dashboard:/proxy/

最简单的取消认证

dashboard-admin.yaml

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: kubernetes-dashboard

labels:

k8s-app: kubernetes-dashboard

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kube-system

应用规则

$ kubectl apply -f dashboard-admin.yaml

clusterrolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

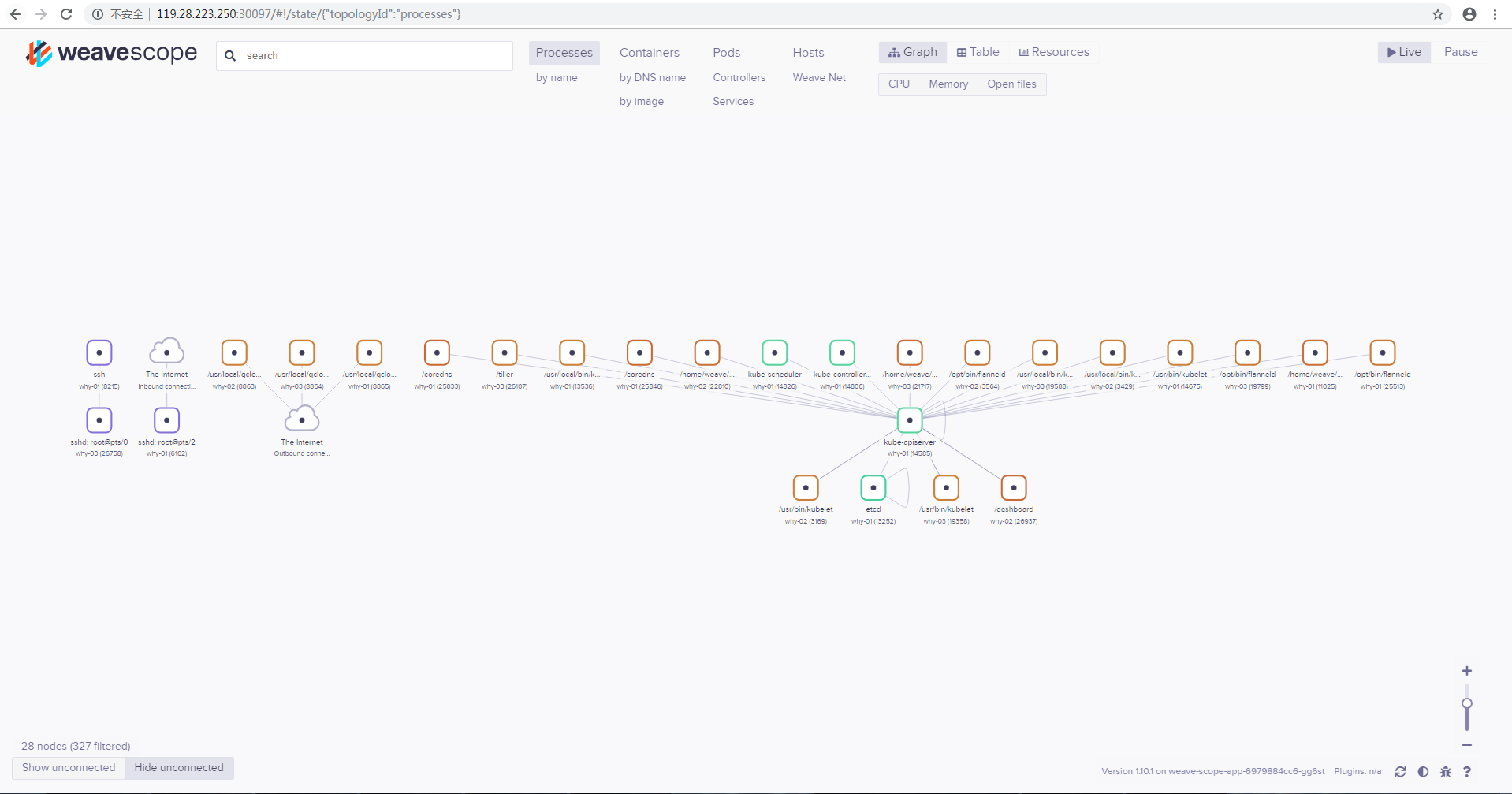

weave-scope

$ kubectl apply -f "https://cloud.weave.works/k8s/scope.yaml?k8s-version=$(kubectl version | base64 | tr -d '\n')"

namespace/weave created

serviceaccount/weave-scope created

clusterrole.rbac.authorization.k8s.io/weave-scope created

clusterrolebinding.rbac.authorization.k8s.io/weave-scope created

deployment.apps/weave-scope-app created

service/weave-scope-app created

daemonset.extensions/weave-scope-agent created

- DaemonSet每个节点会运行weave-scope-agent来收集数据

- Deployment的weave-scope-app是scope应用,从agent获取数据,通过

Web UI展示并与用户交互 - Service的weave-scope-app,默认是ClusterIP类型,可以直接通过

kubectl edit修改为NodePort

$ kubectl edit --namespace=weave service weave-scope-app

service/weave-scope-app edited

[why@why-01 ~]$ kubectl get --namespace=weave service weave-scope-app

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

weave-scope-app NodePort 10.97.111.171 <none> 80:30097/TCP 20m

直接访问对应的NodePort即可

Heapster

Heapster是k8s原生的解决方案,但是监控项等并不全面,不过多介绍

$ git clone https://github.com/kubernetes/heapster.git

$ kubectl apply -f heapster/deploy/kube-config/influxdb/

$ kubectl apply -f heapster/deploy/kube-config/rbac/heapster-rbac.yaml

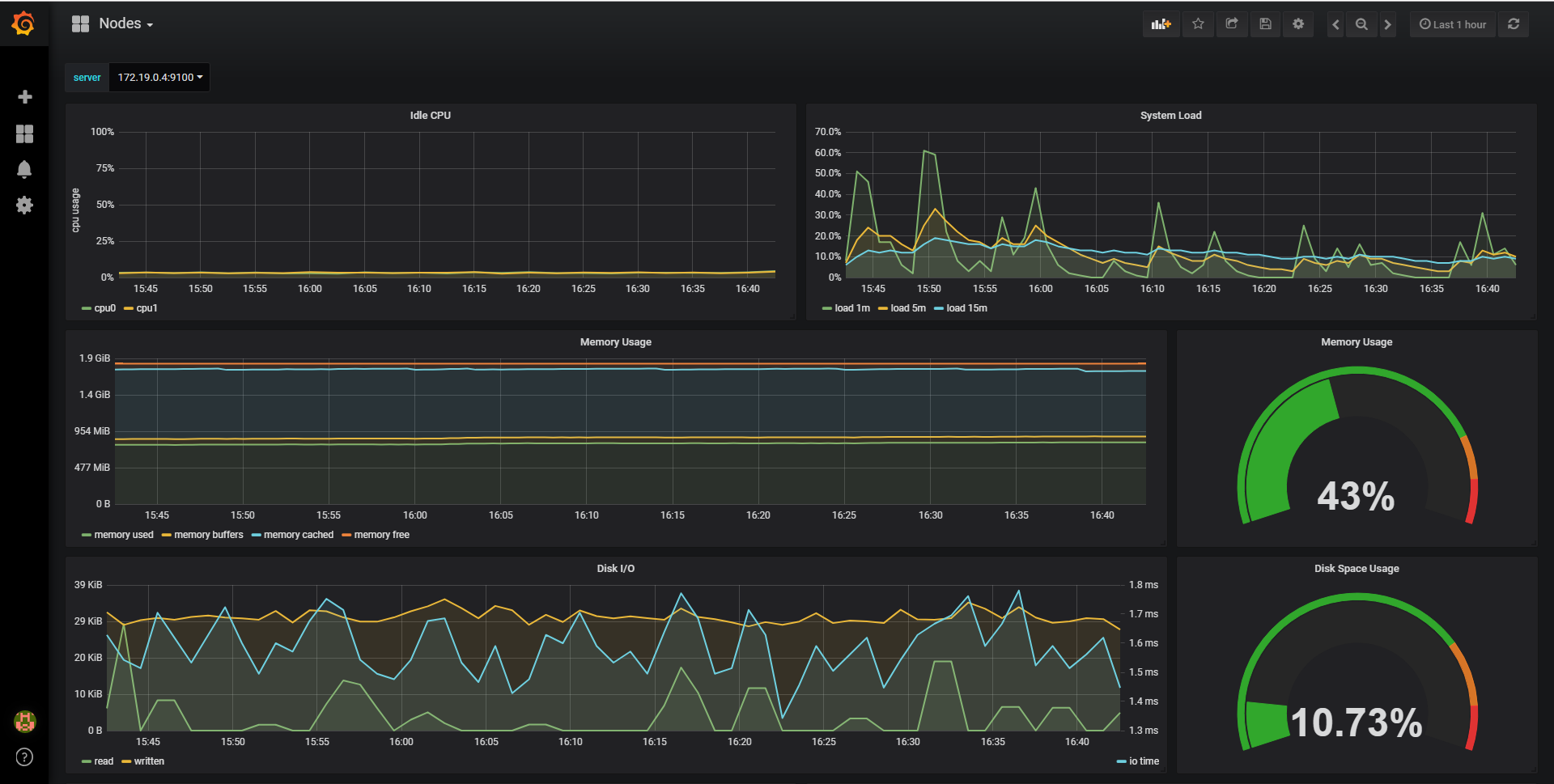

Prometheus Operator

安装Prometheus Operator

$ git clone https://github.com/coreos/prometheus-operator.git

$ cd prometheus-operator/

$ helm install --name prometheus-operator --set rbacEnable=true --namespace=monitoring helm/prometheus-operator

NAME: prometheus-operator

LAST DEPLOYED: Wed Dec 12 12:06:33 2018

NAMESPACE: monitoring

STATUS: DEPLOYED

RESOURCES:

==> v1/ServiceAccount

NAME SECRETS AGE

prometheus-operator 1 59s

==> v1beta1/ClusterRole

NAME AGE

prometheus-operator 59s

psp-prometheus-operator 59s

==> v1beta1/ClusterRoleBinding

NAME AGE

prometheus-operator 59s

psp-prometheus-operator 59s

==> v1beta1/Deployment

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

prometheus-operator 1 1 1 1 59s

==> v1/Pod(related)

NAME READY STATUS RESTARTS AGE

prometheus-operator-867bbfddbd-rjcgn 1/1 Running 0 58s

==> v1beta1/PodSecurityPolicy

NAME PRIV CAPS SELINUX RUNASUSER FSGROUP SUPGROUP READONLYROOTFS VOLUMES

prometheus-operator false RunAsAny RunAsAny MustRunAs MustRunAs false configMap,emptyDir,projected,secret,downwardAPI,persistentVolumeClaim

==> v1/ConfigMap

NAME DATA AGE

prometheus-operator 1 59s

NOTES:

The Prometheus Operator has been installed. Check its status by running:

kubectl --namespace monitoring get pods -l "app=prometheus-operator,release=prometheus-operator"

Visit https://github.com/coreos/prometheus-operator for instructions on how

to create & configure Alertmanager and Prometheus instances using the Operator.

$ kubectl get --namespace=monitoring deployment prometheus-operator

NAME READY UP-TO-DATE AVAILABLE AGE

prometheus-operator 1/1 1 1 5m19s

安装prometheus

$ helm install --name prometheus --set serviceMonitorsSelector.app=prometheus --set ruleSelector.app=prometheus --namespace=monitoring helm/prometheus

NAME: prometheus

LAST DEPLOYED: Wed Dec 12 12:16:54 2018

NAMESPACE: monitoring

STATUS: DEPLOYED

RESOURCES:

==> v1/Service

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

prometheus ClusterIP 10.107.63.29 <none> 9090/TCP 1s

==> v1/Prometheus

NAME AGE

prometheus 1s

==> v1/PrometheusRule

NAME AGE

prometheus-rules 1s

==> v1/ServiceMonitor

NAME AGE

prometheus 1s

==> v1beta1/PodSecurityPolicy

NAME PRIV CAPS SELINUX RUNASUSER FSGROUP SUPGROUP READONLYROOTFS VOLUMES

prometheus false RunAsAny RunAsAny MustRunAs MustRunAs false configMap,emptyDir,projected,secret,downwardAPI,persistentVolumeClaim

==> v1/ServiceAccount

NAME SECRETS AGE

prometheus 1 1s

==> v1beta1/ClusterRole

NAME AGE

prometheus 1s

psp-prometheus 1s

==> v1beta1/ClusterRoleBinding

NAME AGE

prometheus 1s

psp-prometheus 1s

NOTES:

A new Prometheus instance has been created.

DEPRECATION NOTICE:

- additionalRulesConfigMapLabels is not used anymore, use additionalRulesLabels

安装alertmanager

$ helm install --name alertmanager --namespace=monitoring helm/alertmanager

NAME: alertmanager

LAST DEPLOYED: Wed Dec 12 12:16:55 2018

NAMESPACE: monitoring

STATUS: DEPLOYED

RESOURCES:

==> v1beta1/ClusterRoleBinding

NAME AGE

psp-alertmanager 0s

==> v1/Service

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

alertmanager ClusterIP 10.111.75.208 <none> 9093/TCP 0s

==> v1/Alertmanager

NAME AGE

alertmanager 0s

==> v1/PrometheusRule

NAME AGE

alertmanager 0s

==> v1/ServiceMonitor

NAME AGE

alertmanager 0s

==> v1beta1/PodSecurityPolicy

NAME PRIV CAPS SELINUX RUNASUSER FSGROUP SUPGROUP READONLYROOTFS VOLUMES

alertmanager false RunAsAny RunAsAny MustRunAs MustRunAs false configMap,emptyDir,projected,secret,downwardAPI,persistentVolumeClaim

==> v1/Secret

NAME TYPE DATA AGE

alertmanager-alertmanager Opaque 1 0s

==> v1beta1/ClusterRole

NAME AGE

psp-alertmanager 0s

NOTES:

A new Alertmanager instance has been created.

DEPRECATION NOTICE:

- additionalRulesConfigMapLabels is not used anymore, use additionalRulesLabels

安装grafana

$ helm install --name grafana --namespace=monitoring helm/grafana

NAME: grafana

LAST DEPLOYED: Wed Dec 12 12:17:00 2018

NAMESPACE: monitoring

STATUS: DEPLOYED

RESOURCES:

==> v1beta1/ClusterRole

NAME AGE

psp-grafana-grafana 0s

==> v1/Service

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

grafana-grafana ClusterIP 10.98.127.236 <none> 80/TCP 0s

==> v1beta1/PodSecurityPolicy

NAME PRIV CAPS SELINUX RUNASUSER FSGROUP SUPGROUP READONLYROOTFS VOLUMES

grafana-grafana false RunAsAny RunAsAny MustRunAs MustRunAs false configMap,emptyDir,projected,secret,downwardAPI,persistentVolumeClaim,hostPath

==> v1/Secret

NAME TYPE DATA AGE

grafana-grafana Opaque 2 0s

==> v1/ConfigMap

NAME DATA AGE

grafana-grafana 10 0s

==> v1/ServiceMonitor

NAME AGE

grafana 0s

==> v1/Pod(related)

NAME READY STATUS RESTARTS AGE

grafana-grafana-6d848c8db8-nwldw 0/2 ContainerCreating 0 0s

==> v1/ServiceAccount

NAME SECRETS AGE

grafana-grafana 1 0s

==> v1beta1/ClusterRoleBinding

NAME AGE

psp-grafana-grafana 0s

==> v1beta1/Deployment

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

grafana-grafana 1 1 1 0 0s

NOTES:

1. Get your 'admin' user password by running:

kubectl get secret --namespace monitoring grafana -o jsonpath="{.data.password}" | base64 --decode ; echo

2. The Grafana server can be accessed via port 80 on the following DNS name from within your cluster:

grafana.monitoring.svc.cluster.local

Get the Grafana URL to visit by running these commands in the same shell:

export POD_NAME=$(kubectl get pods --namespace monitoring -l "app=grafana" -o jsonpath="{.items[0].metadata.name}")

kubectl --namespace monitoring port-forward $POD_NAME 3000

3. Login with the password from step 1 and the username: admin

#################################################################################

###### WARNING: Persistence is disabled!!! You will lose your data when #####

###### the Grafana pod is terminated. #####

#################################################################################

安装 kube-prometheus

kube-prometheus是一个 Helm Chart,打包了监控Kubernetes需要的所有Exporter和ServiceMonitor。

helm/README.md文件中有需要如何进行下载

$ helm repo add coreos https://s3-eu-west-1.amazonaws.com/coreos-charts/stable/

$ helm install coreos/kube-prometheus --name kube-prometheus --namespace=monitoring

NAME: kube-prometheus

LAST DEPLOYED: Tue Dec 25 18:06:28 2018

NAMESPACE: monitoring

STATUS: DEPLOYED

RESOURCES:

==> v1beta1/PodSecurityPolicy

NAME PRIV CAPS SELINUX RUNASUSER FSGROUP SUPGROUP READONLYROOTFS VOLUMES

kube-prometheus-alertmanager false RunAsAny RunAsAny MustRunAs MustRunAs false configMap,emptyDir,projected,secret,downwardAPI,persistentVolumeClaim

kube-prometheus-exporter-kube-state false RunAsAny RunAsAny MustRunAs MustRunAs false configMap,emptyDir,projected,secret,downwardAPI,persistentVolumeClaim

kube-prometheus-exporter-node false RunAsAny RunAsAny MustRunAs MustRunAs false configMap,emptyDir,projected,secret,downwardAPI,persistentVolumeClaim,hostPath

kube-prometheus-grafana false RunAsAny RunAsAny MustRunAs MustRunAs false configMap,emptyDir,projected,secret,downwardAPI,persistentVolumeClaim,hostPath

kube-prometheus false RunAsAny RunAsAny MustRunAs MustRunAs false configMap,emptyDir,projected,secret,downwardAPI,persistentVolumeClaim

==> v1/ServiceAccount

NAME SECRETS AGE

kube-prometheus-exporter-kube-state 1 1s

kube-prometheus-exporter-node 1 1s

kube-prometheus-grafana 1 1s

kube-prometheus 1 1s

==> v1beta1/ClusterRoleBinding

NAME AGE

psp-kube-prometheus-alertmanager 1s

kube-prometheus-exporter-kube-state 1s

psp-kube-prometheus-exporter-kube-state 1s

psp-kube-prometheus-exporter-node 1s

psp-kube-prometheus-grafana 1s

kube-prometheus 1s

psp-kube-prometheus 1s

==> v1beta1/Role

NAME AGE

kube-prometheus-exporter-kube-state 1s

==> v1beta1/DaemonSet

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

kube-prometheus-exporter-node 3 3 0 3 0 <none> 1s

==> v1/ServiceMonitor

NAME AGE

kube-prometheus-alertmanager 0s

kube-prometheus-exporter-kube-controller-manager 0s

kube-prometheus-exporter-kube-dns 0s

kube-prometheus-exporter-kube-etcd 0s

kube-prometheus-exporter-kube-scheduler 0s

kube-prometheus-exporter-kube-state 0s

kube-prometheus-exporter-kubelets 0s

kube-prometheus-exporter-kubernetes 0s

kube-prometheus-exporter-node 0s

kube-prometheus-grafana 0s

kube-prometheus 0s

==> v1/Secret

NAME TYPE DATA AGE

alertmanager-kube-prometheus Opaque 1 1s

kube-prometheus-grafana Opaque 2 1s

==> v1beta1/ClusterRole

NAME AGE

psp-kube-prometheus-alertmanager 1s

kube-prometheus-exporter-kube-state 1s

psp-kube-prometheus-exporter-kube-state 1s

psp-kube-prometheus-exporter-node 1s

psp-kube-prometheus-grafana 1s

kube-prometheus 1s

psp-kube-prometheus 1s

==> v1beta1/RoleBinding

NAME AGE

kube-prometheus-exporter-kube-state 1s

==> v1/Service

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kube-prometheus-alertmanager ClusterIP 10.104.41.1 <none> 9093/TCP 1s

kube-prometheus-exporter-kube-controller-manager ClusterIP None <none> 10252/TCP 1s

kube-prometheus-exporter-kube-dns ClusterIP None <none> 10054/TCP,10055/TCP 1s

kube-prometheus-exporter-kube-etcd ClusterIP None <none> 4001/TCP 1s

kube-prometheus-exporter-kube-scheduler ClusterIP None <none> 10251/TCP 1s

kube-prometheus-exporter-kube-state ClusterIP 10.106.212.179 <none> 80/TCP 1s

kube-prometheus-exporter-node ClusterIP 10.97.180.154 <none> 9100/TCP 1s

kube-prometheus-grafana ClusterIP 10.99.217.58 <none> 80/TCP 1s

kube-prometheus ClusterIP 10.99.118.188 <none> 9090/TCP 1s

==> v1/ConfigMap

NAME DATA AGE

kube-prometheus-grafana 10 1s

==> v1beta1/Deployment

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

kube-prometheus-exporter-kube-state 1 1 1 0 1s

kube-prometheus-grafana 1 1 1 0 1s

==> v1/PrometheusRule

NAME AGE

kube-prometheus-alertmanager 1s

kube-prometheus-exporter-kube-controller-manager 1s

kube-prometheus-exporter-kube-etcd 1s

kube-prometheus-exporter-kube-scheduler 1s

kube-prometheus-exporter-kube-state 1s

kube-prometheus-exporter-kubelets 0s

kube-prometheus-exporter-kubernetes 0s

kube-prometheus-exporter-node 0s

kube-prometheus-rules 0s

kube-prometheus 0s

==> v1/Pod(related)

NAME READY STATUS RESTARTS AGE

kube-prometheus-exporter-node-8zk2s 0/1 ContainerCreating 0 1s

kube-prometheus-exporter-node-nd78m 0/1 ContainerCreating 0 1s

kube-prometheus-exporter-node-w9x2d 0/1 ContainerCreating 0 1s

kube-prometheus-exporter-kube-state-7bb8cf75d9-r9b7t 0/2 ContainerCreating 0 1s

kube-prometheus-grafana-6f4bb75c95-5tq4k 0/2 ContainerCreating 0 1s

==> v1/Alertmanager

NAME AGE

kube-prometheus 1s

==> v1/Prometheus

NAME AGE

kube-prometheus 1s

NOTES:

DEPRECATION NOTICE:

- alertmanager.ingress.fqdn is not used anymore, use alertmanager.ingress.hosts []

- prometheus.ingress.fqdn is not used anymore, use prometheus.ingress.hosts []

- grafana.ingress.fqdn is not used anymore, use prometheus.grafana.hosts []

- additionalRulesConfigMapLabels is not used anymore, use additionalRulesLabels

- prometheus.additionalRulesConfigMapLabels is not used anymore, use additionalRulesLabels

- alertmanager.additionalRulesConfigMapLabels is not used anymore, use additionalRulesLabels

- exporter-kube-controller-manager.additionalRulesConfigMapLabels is not used anymore, use additionalRulesLabels

- exporter-kube-etcd.additionalRulesConfigMapLabels is not used anymore, use additionalRulesLabels

- exporter-kube-scheduler.additionalRulesConfigMapLabels is not used anymore, use additionalRulesLabels

- exporter-kubelets.additionalRulesConfigMapLabels is not used anymore, use additionalRulesLabels

- exporter-kubernetes.additionalRulesConfigMapLabels is not used anymore, use additionalRulesLabels

如果有下载失败了可以通过一下方式删除

helm list --all

helm delete --purge <app-name>

Alert因为有些变动,暂时没有配置,参考

$ kubectl apply --namespace=monitoring -f contrib/kube-prometheus/manifests/grafana-dashboardDatasources.yaml

secret/grafana-datasources created

$ kubectl apply --namespace=monitoring -f contrib/kube-prometheus/manifests/grafana-dashboardSources.yaml

configmap/grafana-dashboards created

$ kubectl apply --namespace=monitoring -f contrib/kube-prometheus/manifests/grafana-dashboardDefinitions.yaml

configmap/grafana-dashboard-k8s-cluster-rsrc-use created

configmap/grafana-dashboard-k8s-node-rsrc-use created

configmap/grafana-dashboard-k8s-resources-cluster created

configmap/grafana-dashboard-k8s-resources-namespace created

configmap/grafana-dashboard-k8s-resources-pod created

configmap/grafana-dashboard-nodes created

configmap/grafana-dashboard-pods created

configmap/grafana-dashboard-statefulset created

修改数据库的配置URL为http://kube-prometheus.monitoring:9090就可以了

- Weave Scope 可以展示集群和应用的完整视图。其出色的交互性让用户能够轻松对容器化应用进行实时监控和问题诊断。

- Heapster 是 Kubernetes 原生的集群监控方案。预定义的 Dashboard 能够从 Cluster 和 Pods 两个层次监控 Kubernetes。

- Prometheus Operator 可能是目前功能最全面的 Kubernetes 开源监控方案。除了能够监控 Node 和 Pod,还支持集群的各种管理组件,比如 API Server、Scheduler、Controller Manager 等。