kubernetes集群

目录:

集群示例

创建有两种,第一种是先创建pod,再创建service,另一种把顺序倒过来

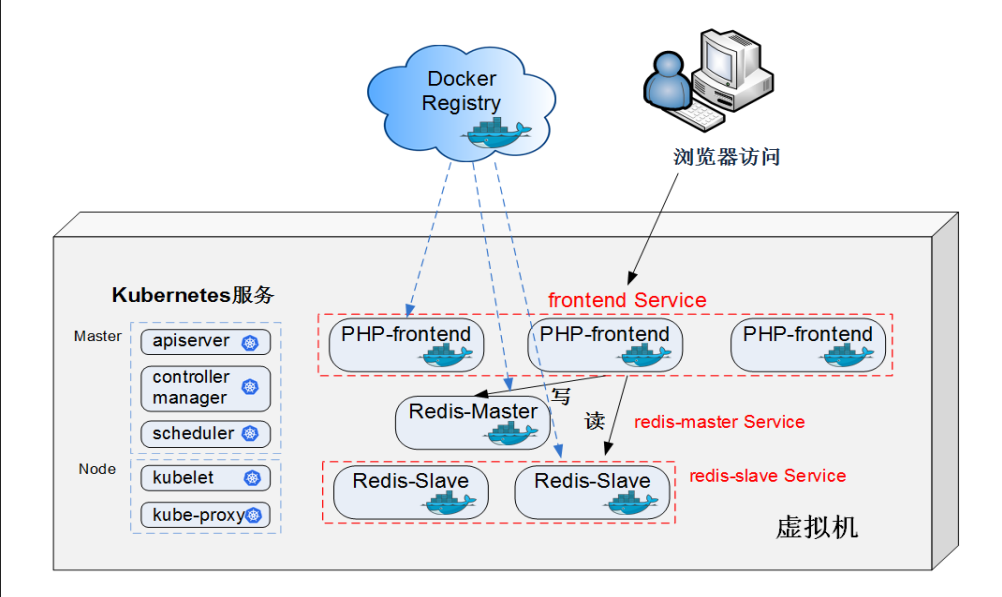

示例结构图

有三个php-frontend,一个redis-master和两个redis-slave,两个redis-slave从redis-master进行同步数据,而php-frontend在写入的时候写入主库,而读取的时候从从库读取。

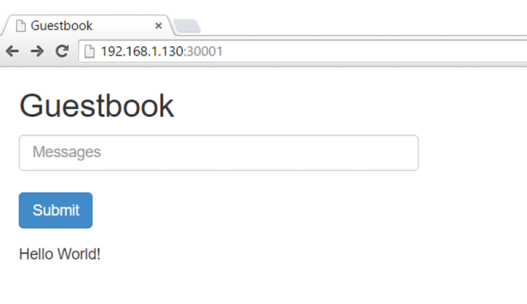

最后安装完成就是这个样子。

图中使用了docker registry镜像仓库,这边我就直接使用默认网上的官方仓库了。

创建集群

创建redis-master的pod

[root@why-01 ~]# vi /etc/k8s_yaml/redis-master-controller.yaml

[root@why-01 ~]# cat /etc/k8s_yaml/redis-master-controller.yaml

apiVersion: v1

kind: ReplicationController

metadata:

name: redis-master

labels:

name: redis-master

spec:

replicas: 1

selector:

name: redis-master

template:

metadata:

labels:

name: redis-master

spec:

containers:

- name: master

image: kubeguide/redis-master

ports:

- containerPort: 6379

[root@why-01 ~]# kubectl create -f /etc/k8s_yaml/redis-master-controller.yaml

replicationcontroller "redis-master" created

对于配置我了解的也不是更多,把已知的配置做一下解析

- kind是指创建的什么类型

- metadata元数据信息

- metadata中的name是

- metadata中的labels是其上的标签

- spec

- spec中的replicas是副本数,也就是创建docker容器的数量

- spec中的selector

- spec中的template使用的模板

- spec中的template中的metadata是元数据信息

- spec中的template中的metadata的labels是元数据信息的标签

- spec中的template中的spec是

- spec中的template中的spec的containers是

- spec中的template中的spec的containers中的name是

- spec中的template中的spec的containers中的image是使用镜像

- spec中的template中的spec的containers中的ports是使用的端口

查看redis-master的pod创建情况

[root@why-01 ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx 1/1 Running 0 3h

redis-master-7jzcq 0/1 ContainerCreating 0 2m

[root@why-01 ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx 1/1 Running 0 3h

redis-master-7jzcq 0/1 ContainerCreating 0 4m

[root@why-01 ~]# kubectl describe pod redis-master

Name: redis-master-7jzcq

Namespace: default

Node: why-02/10.181.13.57

Start Time: Wed, 18 Oct 2017 23:04:28 +0800

Labels: name=redis-master

Status: Pending

IP:

Controllers: ReplicationController/redis-master

Containers:

master:

Container ID:

Image: kubeguide/redis-master

Image ID:

Port: 6379/TCP

State: Waiting

Reason: ContainerCreating

Ready: False

Restart Count: 0

Volume Mounts: <none>

Environment Variables: <none>

Conditions:

Type Status

Initialized True

Ready False

PodScheduled True

No volumes.

QoS Class: BestEffort

Tolerations: <none>

Events:

FirstSeen LastSeen Count From SubObjectPath Type Reason Message

--------- -------- ----- ---- ------------- -------- ------ -------

7m 7m 1 {kubelet why-02} Warning MissingClusterDNS kubelet does not have ClusterDNS IP configured and cannot create Pod using "ClusterFirst" policy. Falling back to DNSDefault policy.

7m 7m 1 {default-scheduler } Normal Scheduled Successfully assigned redis-master-7jzcq to why-02

7m 7m 1 {kubelet why-02} spec.containers{master} Normal Pulling pulling image "kubeguide/redis-master"

[root@why-01 ~]# kubectl describe pod redis-master

Name: redis-master-7jzcq

Namespace: default

Node: why-02/10.181.13.57

Start Time: Wed, 18 Oct 2017 23:04:28 +0800

Labels: name=redis-master

Status: Running

IP: 172.17.0.3

Controllers: ReplicationController/redis-master

Containers:

master:

Container ID: docker://a53735b8ce6477213582fe674282947f1328ed6effe2880222fcf4b82c63d121

Image: kubeguide/redis-master

Image ID: docker-pullable://docker.io/kubeguide/redis-master@sha256:e11eae36476b02a195693689f88a325b30540f5c15adbf531caaecceb65f5b4d

Port: 6379/TCP

State: Running

Started: Wed, 18 Oct 2017 23:17:39 +0800

Ready: True

Restart Count: 0

Volume Mounts: <none>

Environment Variables: <none>

Conditions:

Type Status

Initialized True

Ready True

PodScheduled True

No volumes.

QoS Class: BestEffort

Tolerations: <none>

Events:

FirstSeen LastSeen Count From SubObjectPath Type Reason Message

--------- -------- ----- ---- ------------- -------- ------ -------

19m 19m 1 {default-scheduler } Normal Scheduled Successfully assigned redis-master-7jzcq to why-02

19m 19m 1 {kubelet why-02} spec.containers{master} Normal Pulling pulling image "kubeguide/redis-master"

19m 6m 2 {kubelet why-02} Warning MissingClusterDNS kubelet does not have ClusterDNS IP configured and cannot create Pod using "ClusterFirst" policy. Falling back to DNSDefault policy.

6m 6m 1 {kubelet why-02} spec.containers{master} Normal Pulled Successfully pulled image "kubeguide/redis-master"

6m 6m 1 {kubelet why-02} spec.containers{master} Normal Created Created container with docker id a53735b8ce64; Security:[seccomp=unconfined]

6m 6m 1 {kubelet why-02} spec.containers{master} Normal Started Started container with docker id a53735b8ce64

创建redis-master的service

[root@why-01 ~]# vi /etc/k8s_yaml/redis-master-service.yaml

[root@why-01 ~]# cat /etc/k8s_yaml/redis-master-service.yaml

apiVersion: v1

kind: Service

metadata:

name: redis-master

labels:

name: redis-master

spec:

ports:

- port: 6379

targetPort: 6379

selector:

name: redis-master

[root@why-01 ~]# kubectl create -f /etc/k8s_yaml/redis-master-service.yaml

service "redis-master" created

[root@why-01 ~]# kubectl get service

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes 10.254.0.1 <none> 443/TCP 11h

redis-master 10.254.133.172 <none> 6379/TCP 25s

创建redis-slave的pod

[root@why-01 ~]# vi /etc/k8s_yaml/redis-slave-controller.yaml

[root@why-01 ~]# cat /etc/k8s_yaml/redis-slave-controller.yaml

apiVersion: v1

kind: ReplicationController

metadata:

name: redis-slave

labels:

name: redis-slave

spec:

replicas: 2

selector:

name: redis-slave

template:

metadata:

labels:

name: redis-slave

spec:

containers:

- name: slave

image: kubeguide/guestbook-redis-slave

env:

- name: GET_HOSTS_FROM

value: env

ports:

- containerPort: 6379

[root@why-01 ~]# kubectl create -f /etc/k8s_yaml/redis-slave-controller.yaml

replicationcontroller "redis-slave" created

[root@why-01 ~]# kubectl get rc redis-slave

NAME DESIRED CURRENT READY AGE

redis-slave 2 2 1 29s

[root@why-01 ~]# kubectl get rc

NAME DESIRED CURRENT READY AGE

redis-master 1 1 1 55m

redis-slave 2 2 2 1m

[root@why-01 ~]# kubectl describe pod redis-slave

Name: redis-slave-70cv0

Namespace: default

Node: why-03/10.181.5.146

Start Time: Wed, 18 Oct 2017 23:58:15 +0800

Labels: name=redis-slave

Status: Running

IP: 172.17.0.3

Controllers: ReplicationController/redis-slave

Containers:

slave:

Container ID: docker://6c942e568142ed0f7d2945423caa2f1f17b5400227a85477b5d84c4161805f2d

Image: kubeguide/guestbook-redis-slave

Image ID: docker-pullable://docker.io/kubeguide/guestbook-redis-slave@sha256:a36fec97659fe96b5b28750d88b5cfb84a45138bcf1397c8e237031b8855c58c

Port: 6379/TCP

State: Running

Started: Wed, 18 Oct 2017 23:58:40 +0800

Ready: True

Restart Count: 0

Volume Mounts: <none>

Environment Variables:

GET_HOSTS_FROM: env

Conditions:

Type Status

Initialized True

Ready True

PodScheduled True

No volumes.

QoS Class: BestEffort

Tolerations: <none>

Events:

FirstSeen LastSeen Count From SubObjectPath Type Reason Message

--------- -------- ----- ---- ------------- -------- ------ -------

1m 1m 1 {default-scheduler } Normal Scheduled Successfully assigned redis-slave-70cv0 to why-03

1m 1m 1 {kubelet why-03} spec.containers{slave} Normal Pulling pulling image "kubeguide/guestbook-redis-slave"

1m 1m 2 {kubelet why-03} Warning MissingClusterDNS kubelet does not have ClusterDNS IP configured and cannot create Pod using "ClusterFirst" policy. Falling back to DNSDefault policy.

1m 1m 1 {kubelet why-03} spec.containers{slave} Normal Pulled Successfully pulled image "kubeguide/guestbook-redis-slave"

1m 1m 1 {kubelet why-03} spec.containers{slave} Normal Created Created container with docker id 6c942e568142; Security:[seccomp=unconfined]

1m 1m 1 {kubelet why-03} spec.containers{slave} Normal Started Started container with docker id 6c942e568142

Name: redis-slave-tbmlh

Namespace: default

Node: why-02/10.181.13.57

Start Time: Wed, 18 Oct 2017 23:58:15 +0800

Labels: name=redis-slave

Status: Running

IP: 172.17.0.4

Controllers: ReplicationController/redis-slave

Containers:

slave:

Container ID: docker://c2d2540e35e23c1deb56efd97d83d02665b1c8e706cf0e70be7ccdfd385f6633

Image: kubeguide/guestbook-redis-slave

Image ID: docker-pullable://docker.io/kubeguide/guestbook-redis-slave@sha256:a36fec97659fe96b5b28750d88b5cfb84a45138bcf1397c8e237031b8855c58c

Port: 6379/TCP

State: Running

Started: Wed, 18 Oct 2017 23:58:49 +0800

Ready: True

Restart Count: 0

Volume Mounts: <none>

Environment Variables:

GET_HOSTS_FROM: env

Conditions:

Type Status

Initialized True

Ready True

PodScheduled True

No volumes.

QoS Class: BestEffort

Tolerations: <none>

Events:

FirstSeen LastSeen Count From SubObjectPath Type Reason Message

--------- -------- ----- ---- ------------- -------- ------ -------

1m 1m 1 {default-scheduler } Normal Scheduled Successfully assigned redis-slave-tbmlh to why-02

1m 1m 1 {kubelet why-02} spec.containers{slave} Normal Pulling pulling image "kubeguide/guestbook-redis-slave"

1m 1m 2 {kubelet why-02} Warning MissingClusterDNS kubelet does not have ClusterDNS IP configured and cannot create Pod using "ClusterFirst" policy. Falling back to DNSDefault policy.

1m 1m 1 {kubelet why-02} spec.containers{slave} Normal Pulled Successfully pulled image "kubeguide/guestbook-redis-slave"

1m 1m 1 {kubelet why-02} spec.containers{slave} Normal Created Created container with docker id c2d2540e35e2; Security:[seccomp=unconfined]

1m 1m 1 {kubelet why-02} spec.containers{slave} Normal Started Started container with docker id c2d2540e35e2

创建redis-slave的service

[root@why-01 ~]# kubectl create -f /etc/k8s_yaml/redis-slave-service.yaml

service "redis-slave" created

[root@why-01 ~]# kubectl get service

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes 10.254.0.1 <none> 443/TCP 11h

redis-master 10.254.133.172 <none> 6379/TCP 28m

redis-slave 10.254.135.38 <none> 6379/TCP 12s

创建frontend的pod

[root@why-01 ~]# vi /etc/k8s_yaml/frontend-controller.yaml

[root@why-01 ~]# cat /etc/k8s_yaml/frontend-controller.yaml

apiVersion: v1

kind: ReplicationController

metadata:

name: frontend

labels:

name: frontend

spec:

replicas: 3

selector:

name: frontend

template:

metadata:

labels:

name: frontend

spec:

containers:

- name: frontend

image: kubeguide/guestbook-php-frontend

env:

- name: GET_HOSTS_FROM

value: env

ports:

- containerPort: 80

[root@why-01 ~]# kubectl create -f /etc/k8s_yaml/frontend-controller.yaml

replicationcontroller "frontend" created

[root@why-01 ~]# kubectl describe pod frontend

Name: frontend-8hl11

Namespace: default

Node: why-02/10.181.13.57

Start Time: Thu, 19 Oct 2017 00:05:04 +0800

Labels: name=frontend

Status: Running

IP: 172.17.0.5

Controllers: ReplicationController/frontend

Containers:

frontend:

Container ID: docker://0e81a76c2e382949aa0a240b91abb42d7dbc830c3255593f386c13faf164f76a

Image: kubeguide/guestbook-php-frontend

Image ID: docker-pullable://docker.io/kubeguide/guestbook-php-frontend@sha256:195181e0263bcee4ae0c3e79352bbd3487224c0042f1b9ca8543b788962188ce

Port: 80/TCP

State: Running

Started: Thu, 19 Oct 2017 00:11:01 +0800

Ready: True

Restart Count: 0

Volume Mounts: <none>

Environment Variables:

GET_HOSTS_FROM: env

Conditions:

Type Status

Initialized True

Ready True

PodScheduled True

No volumes.

QoS Class: BestEffort

Tolerations: <none>

Events:

FirstSeen LastSeen Count From SubObjectPath Type Reason Message

--------- -------- ----- ---- ------------- -------- ------ -------

14m 14m 1 {default-scheduler } Normal Scheduled Successfully assigned frontend-8hl11 to why-02

14m 14m 1 {kubelet why-02} spec.containers{frontend} Normal Pulling pulling image "kubeguide/guestbook-php-frontend"

14m 8m 2 {kubelet why-02} Warning MissingClusterDNS kubelet does not have ClusterDNS IP configured and cannot create Pod using "ClusterFirst" policy. Falling back to DNSDefault policy.

8m 8m 1 {kubelet why-02} spec.containers{frontend} Normal Pulled Successfully pulled image "kubeguide/guestbook-php-frontend"

8m 8m 1 {kubelet why-02} spec.containers{frontend} Normal Created Created container with docker id 0e81a76c2e38; Security:[seccomp=unconfined]

8m 8m 1 {kubelet why-02} spec.containers{frontend} Normal Started Started container with docker id 0e81a76c2e38

Name: frontend-mh6pz

Namespace: default

Node: why-03/10.181.5.146

Start Time: Thu, 19 Oct 2017 00:05:04 +0800

Labels: name=frontend

Status: Running

IP: 172.17.0.4

Controllers: ReplicationController/frontend

Containers:

frontend:

Container ID: docker://9a2439f79699165883a33f9e057a1217bb0d25347e55c74f6ae29a332f9ac22d

Image: kubeguide/guestbook-php-frontend

Image ID: docker-pullable://docker.io/kubeguide/guestbook-php-frontend@sha256:195181e0263bcee4ae0c3e79352bbd3487224c0042f1b9ca8543b788962188ce

Port: 80/TCP

State: Running

Started: Thu, 19 Oct 2017 00:12:09 +0800

Ready: True

Restart Count: 0

Volume Mounts: <none>

Environment Variables:

GET_HOSTS_FROM: env

Conditions:

Type Status

Initialized True

Ready True

PodScheduled True

No volumes.

QoS Class: BestEffort

Tolerations: <none>

Events:

FirstSeen LastSeen Count From SubObjectPath Type Reason Message

--------- -------- ----- ---- ------------- -------- ------ -------

14m 14m 1 {default-scheduler } Normal Scheduled Successfully assigned frontend-mh6pz to why-03

14m 14m 1 {kubelet why-03} spec.containers{frontend} Normal Pulling pulling image "kubeguide/guestbook-php-frontend"

14m 7m 2 {kubelet why-03} Warning MissingClusterDNS kubelet does not have ClusterDNS IP configured and cannot create Pod using "ClusterFirst" policy. Falling back to DNSDefault policy.

7m 7m 1 {kubelet why-03} spec.containers{frontend} Normal Pulled Successfully pulled image "kubeguide/guestbook-php-frontend"

7m 7m 1 {kubelet why-03} spec.containers{frontend} Normal Created Created container with docker id 9a2439f79699; Security:[seccomp=unconfined]

7m 7m 1 {kubelet why-03} spec.containers{frontend} Normal Started Started container with docker id 9a2439f79699

Name: frontend-nwc6m

Namespace: default

Node: why-03/10.181.5.146

Start Time: Thu, 19 Oct 2017 00:05:04 +0800

Labels: name=frontend

Status: Running

IP: 172.17.0.5

Controllers: ReplicationController/frontend

Containers:

frontend:

Container ID: docker://e13419bf67be0847b7e5e1d088a64d3db544a1fb3fbb4e260a5c38ef25b68256

Image: kubeguide/guestbook-php-frontend

Image ID: docker-pullable://docker.io/kubeguide/guestbook-php-frontend@sha256:195181e0263bcee4ae0c3e79352bbd3487224c0042f1b9ca8543b788962188ce

Port: 80/TCP

State: Running

Started: Thu, 19 Oct 2017 00:12:13 +0800

Ready: True

Restart Count: 0

Volume Mounts: <none>

Environment Variables:

GET_HOSTS_FROM: env

Conditions:

Type Status

Initialized True

Ready True

PodScheduled True

No volumes.

QoS Class: BestEffort

Tolerations: <none>

Events:

FirstSeen LastSeen Count From SubObjectPath Type Reason Message

--------- -------- ----- ---- ------------- -------- ------ -------

14m 14m 1 {default-scheduler } Normal Scheduled Successfully assigned frontend-nwc6m to why-03

14m 14m 1 {kubelet why-03} spec.containers{frontend} Normal Pulling pulling image "kubeguide/guestbook-php-frontend"

14m 7m 2 {kubelet why-03} Warning MissingClusterDNS kubelet does not have ClusterDNS IP configured and cannot create Pod using "ClusterFirst" policy. Falling back to DNSDefault policy.

7m 7m 1 {kubelet why-03} spec.containers{frontend} Normal Pulled Successfully pulled image "kubeguide/guestbook-php-frontend"

7m 7m 1 {kubelet why-03} spec.containers{frontend} Normal Created Created container with docker id e13419bf67be; Security:[seccomp=unconfined]

7m 7m 1 {kubelet why-03} spec.containers{frontend} Normal Started Started container with docker id e13419bf67be

创建的frontend的service

[root@why-01 ~]# vi /etc/k8s_yaml/frontend-service.yaml

[root@why-01 ~]# cat /etc/k8s_yaml/frontend-service.yaml

apiVersion: v1

kind: Service

metadata:

name: frontend

labels:

name: frontend

spec:

type: NodePort

ports:

- port: 80

nodePort: 30001

selector:

name: frontend

[root@why-01 ~]# kubectl create -f /etc/k8s_yaml/frontend-service.yaml

service "frontend" created

可以看到三个service和六个pod都已经正常启动

[root@why-01 ~]# kubectl get service

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE

frontend 10.254.136.133 <nodes> 80:30001/TCP 2s

kubernetes 10.254.0.1 <none> 443/TCP 11h

redis-master 10.254.133.172 <none> 6379/TCP 29m

redis-slave 10.254.135.38 <none> 6379/TCP 37s

[root@why-01 ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

frontend-8hl11 1/1 Running 0 14m

frontend-mh6pz 1/1 Running 0 14m

frontend-nwc6m 1/1 Running 0 14m

nginx 1/1 Running 0 5h

redis-master-7jzcq 1/1 Running 0 1h

redis-slave-70cv0 1/1 Running 0 21m

redis-slave-tbmlh 1/1 Running 0 21m

对于出现以下错误

[root@why-01 ~]# vi /etc/k8s_yaml/frontend-controller.yaml

[root@why-01 ~]# kubectl create -f /etc/k8s_yaml/frontend-controller.yaml

Error from server (BadRequest): error when creating "/etc/k8s_yaml/frontend-controller.yaml": ReplicationController in version "v1" cannot be handled as a ReplicationController: [pos 177]: json: expect char '"' but got char '{'

[root@why-01 ~]# vi /etc/k8s_yaml/frontend-controller.yaml

[root@why-01 ~]# kubectl create -f /etc/k8s_yaml/frontend-controller.yaml

Error from server (BadRequest): error when creating "/etc/k8s_yaml/frontend-controller.yaml": ReplicationController in version "v1" cannot be handled as a ReplicationController: [pos 177]: json: expect char '"' but got char 'n'

更多都是配置文件写错导致的

然后可以看到在why-02和why-03两个node节点都创建的对外的30001端口

[root@why-02 ~]# ss -nlpt | grep 30001

LISTEN 0 128 :::30001 :::* users:(("kube-proxy",pid=12959,fd=8))

[root@why-03 ~]# ss -nlpt | grep 30001

LISTEN 0 128 :::30001 :::* users:(("kube-proxy",pid=23130,fd=7))

官方文档是使用了一个node,而我这边使用了两个node,就会出现一个node可以正常的写入数据,而另一个节点不能写入数据

也就是,同node的redis-master和redis-slave进行进行了主从同步,该node上的frontend既能写入redis-master,也能从redis-slave读取数据,而另一个node的redis-slave不能从redis-master节点进行主从同步,进而frontend无法写入数据也无法读取数据。

这其实是因为docker自身网络导致的,docker默认是通过本地的docker0网卡与宿主机进行通信,多个node之间网络不通的。

进入redis-master-5qxmr

[root@why-01 ~]# kubectl exec -it redis-master-5qxmr -- /bin/bash

[ root@redis-master-5qxmr:/data ]$ printenv

GIT_PS1_SHOWDIRTYSTATE=1

GREP_COLOR=1;31

FRONTEND_PORT_80_TCP_ADDR=10.254.44.223

REDIS_SLAVE_PORT_6379_TCP=tcp://10.254.195.218:6379

REDIS_SLAVE_SERVICE_HOST=10.254.195.218

HOSTNAME=redis-master-5qxmr

KUBERNETES_PORT_443_TCP_PORT=443

KUBERNETES_PORT=tcp://10.254.0.1:443

CLICOLOR=1

FRONTEND_PORT_80_TCP_PORT=80

REDIS_SLAVE_PORT=tcp://10.254.195.218:6379

KUBERNETES_SERVICE_PORT=443

KUBERNETES_SERVICE_HOST=10.254.0.1

LS_COLORS=di=34:ln=35:so=32:pi=33:ex=1;40:bd=34;40:cd=34;40:su=0;40:sg=0;40:tw=0;40:ow=0;40:

FRONTEND_PORT_80_TCP_PROTO=tcp

REDIS_MASTER_PORT_6379_TCP_ADDR=10.254.69.157

REDIS_MASTER_PORT_6379_TCP=tcp://10.254.69.157:6379

PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin

REDIS_SLAVE_PORT_6379_TCP_PROTO=tcp

REDIS_MASTER_SERVICE_PORT=6379

PWD=/data

REDIS_SLAVE_SERVICE_PORT=6379

FRONTEND_PORT=tcp://10.254.44.223:80

PS1=\[\033[40m\]\[\033[34m\][ \u@\H:\[\033[36m\]\w$(__git_ps1 " \[\033[35m\]{\[\033[32m\]%s\[\033[35m\]}")\[\033[34m\] ]$\[\033[0m\]

FRONTEND_SERVICE_PORT=80

REDIS_MASTER_SERVICE_HOST=10.254.69.157

SHLVL=1

HOME=/root

FRONTEND_SERVICE_HOST=10.254.44.223

REDIS_SLAVE_PORT_6379_TCP_ADDR=10.254.195.218

GREP_OPTIONS=--color=auto

KUBERNETES_PORT_443_TCP_PROTO=tcp

KUBERNETES_SERVICE_PORT_HTTPS=443

REDIS_MASTER_PORT_6379_TCP_PORT=6379

FRONTEND_PORT_80_TCP=tcp://10.254.44.223:80

REDIS_MASTER_PORT_6379_TCP_PROTO=tcp

REDIS_SLAVE_PORT_6379_TCP_PORT=6379

REDIS_MASTER_PORT=tcp://10.254.69.157:6379

KUBERNETES_PORT_443_TCP_ADDR=10.254.0.1

KUBERNETES_PORT_443_TCP=tcp://10.254.0.1:443

_=/usr/bin/printenv

[ root@redis-master-5qxmr:/data ]$ exit

可以看到指向的REDIS_SLAVE_PORT=tcp://10.254.195.218:6379

进入redis-slave-gbj1j

[root@why-01 ~]# kubectl exec -it redis-slave-gbj1j -- /bin/bash

root@redis-slave-gbj1j:/data# printenv

HOSTNAME=redis-slave-gbj1j

REDIS_DOWNLOAD_URL=http://download.redis.io/releases/redis-3.0.3.tar.gz

KUBERNETES_PORT=tcp://10.254.0.1:443

KUBERNETES_PORT_443_TCP_PORT=443

KUBERNETES_SERVICE_PORT=443

KUBERNETES_SERVICE_HOST=10.254.0.1

GET_HOSTS_FROM=env

REDIS_MASTER_PORT_6379_TCP_ADDR=10.254.69.157

REDIS_MASTER_PORT_6379_TCP=tcp://10.254.69.157:6379

PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin

REDIS_MASTER_SERVICE_PORT=6379

PWD=/data

REDIS_MASTER_SERVICE_HOST=10.254.69.157

SHLVL=1

HOME=/root

KUBERNETES_PORT_443_TCP_PROTO=tcp

REDIS_DOWNLOAD_SHA1=0e2d7707327986ae652df717059354b358b83358

REDIS_VERSION=3.0.3

KUBERNETES_SERVICE_PORT_HTTPS=443

REDIS_MASTER_PORT_6379_TCP_PORT=6379

REDIS_MASTER_PORT_6379_TCP_PROTO=tcp

REDIS_MASTER_PORT=tcp://10.254.69.157:6379

KUBERNETES_PORT_443_TCP_ADDR=10.254.0.1

KUBERNETES_PORT_443_TCP=tcp://10.254.0.1:443

_=/usr/bin/printenv

root@redis-slave-gbj1j:/data# redis-cli

127.0.0.1:6379> keys *

(empty list or set)

(4.11s)

127.0.0.1:6379> quit

root@redis-slave-gbj1j:/data# exit

指向的master为REDIS_MASTER_PORT=tcp://10.254.69.157:6379,但是没有从主节点同步到数据

进入redis-slave-z0h3g

[root@why-01 ~]# kubectl exec -it redis-slave-z0h3g -- /bin/bash

root@redis-slave-z0h3g:/data# printenv

FRONTEND_PORT_80_TCP_ADDR=10.254.44.223

REDIS_SLAVE_PORT_6379_TCP=tcp://10.254.195.218:6379

REDIS_SLAVE_SERVICE_HOST=10.254.195.218

HOSTNAME=redis-slave-z0h3g

REDIS_DOWNLOAD_URL=http://download.redis.io/releases/redis-3.0.3.tar.gz

KUBERNETES_PORT_443_TCP_PORT=443

KUBERNETES_PORT=tcp://10.254.0.1:443

REDIS_SLAVE_PORT=tcp://10.254.195.218:6379

FRONTEND_PORT_80_TCP_PORT=80

KUBERNETES_SERVICE_PORT=443

KUBERNETES_SERVICE_HOST=10.254.0.1

GET_HOSTS_FROM=env

FRONTEND_PORT_80_TCP_PROTO=tcp

REDIS_MASTER_PORT_6379_TCP_ADDR=10.254.69.157

REDIS_MASTER_PORT_6379_TCP=tcp://10.254.69.157:6379

PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin

REDIS_SLAVE_PORT_6379_TCP_PROTO=tcp

REDIS_MASTER_SERVICE_PORT=6379

PWD=/data

REDIS_SLAVE_SERVICE_PORT=6379

FRONTEND_PORT=tcp://10.254.44.223:80

FRONTEND_SERVICE_PORT=80

REDIS_MASTER_SERVICE_HOST=10.254.69.157

SHLVL=1

HOME=/root

FRONTEND_SERVICE_HOST=10.254.44.223

REDIS_SLAVE_PORT_6379_TCP_ADDR=10.254.195.218

KUBERNETES_PORT_443_TCP_PROTO=tcp

REDIS_DOWNLOAD_SHA1=0e2d7707327986ae652df717059354b358b83358

REDIS_VERSION=3.0.3

KUBERNETES_SERVICE_PORT_HTTPS=443

REDIS_MASTER_PORT_6379_TCP_PORT=6379

REDIS_SLAVE_PORT_6379_TCP_PORT=6379

REDIS_MASTER_PORT_6379_TCP_PROTO=tcp

FRONTEND_PORT_80_TCP=tcp://10.254.44.223:80

REDIS_MASTER_PORT=tcp://10.254.69.157:6379

KUBERNETES_PORT_443_TCP_ADDR=10.254.0.1

KUBERNETES_PORT_443_TCP=tcp://10.254.0.1:443

_=/usr/bin/printenv

root@redis-slave-z0h3g:/data# redis-cli

127.0.0.1:6379> keys *

1) "messages"

127.0.0.1:6379> quit

root@redis-slave-z0h3g:/data# exit

指向的master为REDIS_MASTER_PORT=tcp://10.254.69.157:6379,从主节点上同步到了数据

查看一下node的docker运行容器情况

[root@why-02 ~]# docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

416c3db0a12c kubeguide/guestbook-php-frontend "apache2-foreground" 20 minutes ago Up 20 minutes k8s_frontend.57642508_frontend-fq0sw_default_2fb89ebd-b424-11e7-b8d0-5254006db97b_a64cef5b

636dbbaf58ce gcr.io/google_containers/pause-amd64:3.0 "/pause" 20 minutes ago Up 20 minutes k8s_POD.b2390301_frontend-fq0sw_default_2fb89ebd-b424-11e7-b8d0-5254006db97b_82bdddd2

71d1a687f459 kubeguide/guestbook-redis-slave "/entrypoint.sh /bin/" 20 minutes ago Up 20 minutes k8s_slave.8efd23be_redis-slave-gbj1j_default_2604ced5-b424-11e7-b8d0-5254006db97b_677a878d

9ec83d641b99 gcr.io/google_containers/pause-amd64:3.0 "/pause" 20 minutes ago Up 20 minutes k8s_POD.2f630372_redis-slave-gbj1j_default_2604ced5-b424-11e7-b8d0-5254006db97b_5b8a464d

2741c4c1d52d nginx "nginx -g 'daemon off" 5 hours ago Up 5 hours k8s_nginx.156efd59_nginx_default_11fbff5b-b3f5-11e7-b8d0-5254006db97b_fb39092b

d0d9432e9d74 gcr.io/google_containers/pause-amd64:3.0 "/pause" 5 hours ago Up 5 hours k8s_POD.b2390301_nginx_default_11fbff5b-b3f5-11e7-b8d0-5254006db97b_4c4b91dc

[root@why-03 ~]# docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

d452fe1d8ff3 kubeguide/guestbook-php-frontend "apache2-foreground" 3 minutes ago Up 3 minutes k8s_frontend.57642508_frontend-2lcd8_default_2fb850f0-b424-11e7-b8d0-5254006db97b_02740bd6

d02ff0a53194 kubeguide/guestbook-php-frontend "apache2-foreground" 3 minutes ago Up 3 minutes k8s_frontend.57642508_frontend-sx6j4_default_2fb8933c-b424-11e7-b8d0-5254006db97b_cf40612e

b563a931dba2 kubeguide/guestbook-redis-slave "/entrypoint.sh /bin/" 4 minutes ago Up 3 minutes k8s_slave.8efd23be_redis-slave-z0h3g_default_2604ddfd-b424-11e7-b8d0-5254006db97b_01b8daf7

cade2d915fb1 kubeguide/redis-master "redis-server /etc/re" 4 minutes ago Up 4 minutes k8s_master.dd7e04d1_redis-master-5qxmr_default_1ce5f62c-b424-11e7-b8d0-5254006db97b_e9a39a1b

42c93943f07c gcr.io/google_containers/pause-amd64:3.0 "/pause" 5 minutes ago Up 5 minutes k8s_POD.b2390301_frontend-sx6j4_default_2fb8933c-b424-11e7-b8d0-5254006db97b_0614567b

0c0fd72f0e14 gcr.io/google_containers/pause-amd64:3.0 "/pause" 5 minutes ago Up 5 minutes k8s_POD.b2390301_frontend-2lcd8_default_2fb850f0-b424-11e7-b8d0-5254006db97b_ffc5c407

888e156fd08a gcr.io/google_containers/pause-amd64:3.0 "/pause" 5 minutes ago Up 5 minutes k8s_POD.2f630372_redis-slave-z0h3g_default_2604ddfd-b424-11e7-b8d0-5254006db97b_7a322e1b

47fb44ce9302 gcr.io/google_containers/pause-amd64:3.0 "/pause" 5 minutes ago Up 5 minutes k8s_POD.2f630372_redis-master-5qxmr_default_1ce5f62c-b424-11e7-b8d0-5254006db97b_d6432ea8

可以看到每个Pod其实是运行了gcr.io/google_containers/pause-amd64:3.0和其上服务两个镜像

redis-slave-z0h3g正式和redis-master在同一个node上,而另一个redis-slave因为在另一个node上,docker默认的网络依赖的是docker0的网桥,不能跨node之间通信

FLANNELD网络

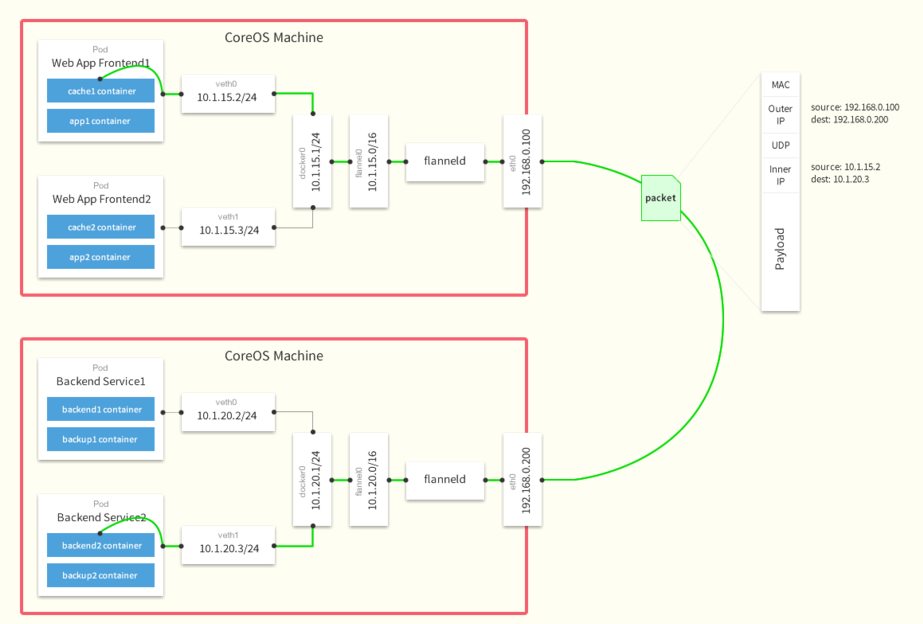

FLANNELD网络架构

数据通信原理图

最原始数据是在起始节点的Flannel服务上进行UDP封装的,投递到目的节点后被另一端的Flanned服务还愿成原始的数据包,两边的docker是感觉不到该进程的存在的。

- 数据从源容器中发出后,经由所在主机的docker0虚拟网卡转发到flannel0虚拟网卡,这是个P2P的虚拟网卡,flanneld服务监听在网卡的另外一端。

- Flannel通过Etcd服务维护了一张节点间的路由表,在稍后的配置部分我们会介绍其中的内容。

- 源主机的flanneld服务将原本的数据内容UDP封装后根据自己的路由表投递给目的节点的flanneld服务,数据到达以后被解包,然后直接进入目的节点的flannel0虚拟网卡, 然后被转发到目的主机的docker0虚拟网卡,最后就像本机容器通信一下的有docker0路由到达目标容器。

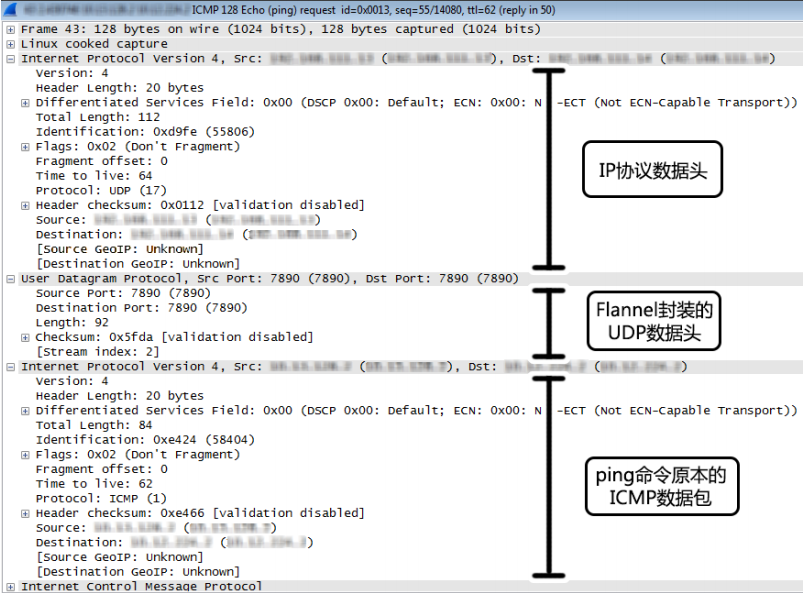

这样整个数据包的传递就完成了,这里需要解释三个问题:

- UDP封装是怎么回事?

在UDP的数据内容部分其实是另一个ICMP(也就是ping命令)的数据包。原始数据是在起始节点的Flannel服务上进行UDP封装的,投递到目的节点后就被另一端的Flannel服务 还原成了原始的数据包,两边的Docker服务都感觉不到这个过程的存在。

- 为什么每个节点上的Docker会使用不同的IP地址段?

这个事情看起来很诡异,但真相十分简单。其实只是单纯的因为Flannel通过Etcd分配了每个节点可用的IP地址段后,偷偷的修改了Docker的启动参数。

在运行了Flannel服务的节点上可以查看到Docker服务进程运行参数ps aux|grep docker|grep "bip",例如“--bip=182.48.25.1/24”这个参数,它限制了所在节

点容器获得的IP范围。这个IP范围是由Flannel自动分配的,由Flannel通过保存在Etcd服务中的记录确保它们不会重复。

- 为什么在发送节点上的数据会从docker0路由到flannel0虚拟网卡,在目的节点会从flannel0路由到docker0虚拟网卡?

例如现在有一个数据包要从IP为172.17.18.2的容器发到IP为172.17.46.2的容器。根据数据发送节点的路由表,它只与172.17.0.0/16匹配这条记录匹配,因此数据从docker0

出来以后就被投递到了flannel0。同理在目标节点,由于投递的地址是一个容器,因此目的地址一定会落在docker0对于的172.17.46.0/24这个记录上,自然的被投递到了docker0网卡。

在etcd中定义网段

[root@why-01 ~]# etcdctl set /coreos.com/network/config '{ "Network": "10.1.0.0/16" }'

{ "Network": "10.1.0.0/16" }

node安装flannel

[root@why-02 ~]# yum install -y flannel

[root@why-02 ~]# vi /etc/sysconfig/flanneld

FLANNEL_ETCD_ENDPOINTS="http://140.143.187.188:2379"

FLANNEL_ETCD_PREFIX="/coreos.com/network"

注意这里指向的是etcd和在etcd中定义的网段

启动flannel服务

[root@why-02 ~]# systemctl restart flanneld.service

[root@why-02 ~]# systemctl status flanneld.service

● flanneld.service - Flanneld overlay address etcd agent

Loaded: loaded (/usr/lib/systemd/system/flanneld.service; disabled; vendor preset: disabled)

Active: active (running) since Thu 2017-10-19 19:15:52 CST; 2s ago

Process: 30558 ExecStartPost=/usr/libexec/flannel/mk-docker-opts.sh -k DOCKER_NETWORK_OPTIONS -d /run/flannel/docker (code=exited, status=0/SUCCESS)

Main PID: 30552 (flanneld)

Memory: 6.4M

CGroup: /system.slice/flanneld.service

└─30552 /usr/bin/flanneld -etcd-endpoints=http://140.143.187.188:2379 -etcd-prefix=/coreos.com/network

Oct 19 19:15:52 why-02 systemd[1]: Starting Flanneld overlay address etcd agent...

Oct 19 19:15:52 why-02 flanneld-start[30552]: I1019 19:15:52.601384 30552 main.go:132] Installing signal handlers

Oct 19 19:15:52 why-02 flanneld-start[30552]: I1019 19:15:52.601449 30552 manager.go:136] Determining IP address of default interface

Oct 19 19:15:52 why-02 flanneld-start[30552]: I1019 19:15:52.601591 30552 manager.go:149] Using interface with name eth0 and address 10.181.13.57

Oct 19 19:15:52 why-02 flanneld-start[30552]: I1019 19:15:52.601602 30552 manager.go:166] Defaulting external address to interface address (10.181.13.57)

Oct 19 19:15:52 why-02 flanneld-start[30552]: I1019 19:15:52.609340 30552 local_manager.go:134] Found lease (10.1.93.0/24) for current IP (10.181.13.57), reusing

Oct 19 19:15:52 why-02 flanneld-start[30552]: I1019 19:15:52.612253 30552 manager.go:250] Lease acquired: 10.1.93.0/24

Oct 19 19:15:52 why-02 flanneld-start[30552]: I1019 19:15:52.612423 30552 network.go:98] Watching for new subnet leases

Oct 19 19:15:52 why-02 flanneld-start[30552]: I1019 19:15:52.615813 30552 network.go:191] Subnet added: 10.1.45.0/24

Oct 19 19:15:52 why-02 systemd[1]: Started Flanneld overlay address etcd agent.

[root@why-02 ~]# ps -ef|grep flannel

root 30552 1 0 19:15 ? 00:00:00 /usr/bin/flanneld -etcd-endpoints=http://140.143.187.188:2379 -etcd-prefix=/coreos.com/network

root 31354 12435 0 19:16 pts/1 00:00:00 grep --color=auto flannel

查看一下网卡

[root@why-02 ~]# ifconfig

docker0: flags=4099<UP,BROADCAST,MULTICAST> mtu 1500

inet 172.17.0.1 netmask 255.255.0.0 broadcast 0.0.0.0

ether 02:42:38:ab:74:39 txqueuelen 0 (Ethernet)

RX packets 502 bytes 253487 (247.5 KiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 516 bytes 61070 (59.6 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

eth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 10.181.13.57 netmask 255.255.128.0 broadcast 10.181.127.255

ether 52:54:00:55:0a:7f txqueuelen 1000 (Ethernet)

RX packets 1572917 bytes 881973732 (841.1 MiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 1255904 bytes 138616884 (132.1 MiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

flannel0: flags=4305<UP,POINTOPOINT,RUNNING,NOARP,MULTICAST> mtu 1472

inet 10.1.93.0 netmask 255.255.0.0 destination 10.1.93.0

unspec 00-00-00-00-00-00-00-00-00-00-00-00-00-00-00-00 txqueuelen 500 (UNSPEC)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536

inet 127.0.0.1 netmask 255.0.0.0

loop txqueuelen 1 (Local Loopback)

RX packets 40529 bytes 81078926 (77.3 MiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 40529 bytes 81078926 (77.3 MiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

可以看到flannel0的网卡就在当时我们定义的10.1.0.0/16网段。

重启docker

[root@why-02 ~]# systemctl restart docker

[root@why-02 ~]# ifconfig

docker0: flags=4099<UP,BROADCAST,MULTICAST> mtu 1500

inet 10.1.93.1 netmask 255.255.255.0 broadcast 0.0.0.0

ether 02:42:38:ab:74:39 txqueuelen 0 (Ethernet)

RX packets 502 bytes 253487 (247.5 KiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 516 bytes 61070 (59.6 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

eth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 10.181.13.57 netmask 255.255.128.0 broadcast 10.181.127.255

ether 52:54:00:55:0a:7f txqueuelen 1000 (Ethernet)

RX packets 1573157 bytes 882030815 (841.1 MiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 1256129 bytes 138646230 (132.2 MiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

flannel0: flags=4305<UP,POINTOPOINT,RUNNING,NOARP,MULTICAST> mtu 1472

inet 10.1.93.0 netmask 255.255.0.0 destination 10.1.93.0

unspec 00-00-00-00-00-00-00-00-00-00-00-00-00-00-00-00 txqueuelen 500 (UNSPEC)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536

inet 127.0.0.1 netmask 255.0.0.0

loop txqueuelen 1 (Local Loopback)

RX packets 40531 bytes 81079026 (77.3 MiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 40531 bytes 81079026 (77.3 MiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

可以看到docker0网卡使用的flannel0的网段

而docker启动的方式中也视同了bip参数

[root@why-02 ~]# ps aux|grep docker|grep "bip"

root 31516 0.1 5.0 835804 51244 ? Ssl 19:17 0:01 /usr/bin/dockerd-current --add-runtime docker-runc=/usr/libexec/docker/docker-runc-current --default-runtime=docker-runc --exec-opt native.cgroupdriver=systemd --userland-proxy-path=/usr/libexec/docker/docker-proxy-current --selinux-enabled --log-driver=journald --signature-verification=false --bip=10.1.93.1/24 --ip-masq=true --mtu=1472

在这个过程中,如果stop docker,会造成kubelet进程关闭,进而kube显示node为NoReady

重新启动一下kubelet进程即可。

如果是restart docker则没有什么问题。

service之间交互方式

redis主从同步的实现方式

[root@why-01 ~]# kubectl exec -it redis-slave-4k60b -- /bin/bash

root@redis-slave-4k60b:/data# cat /run.sh

#!/bin/bash

# Copyright 2014 The Kubernetes Authors All rights reserved.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

if [[ ${GET_HOSTS_FROM:-dns} == "env" ]]; then

redis-server --slaveof ${REDIS_MASTER_SERVICE_HOST} 6379

else

redis-server --slaveof redis-master 6379

fi

root@redis-slave-4k60b:/data# env | grep REDIS_MASTER_SERVICE_HOST

REDIS_MASTER_SERVICE_HOST=10.254.69.157

可以看到redis-slave镜像启动的时候是运行的run.sh脚本,run.sh脚本从环境变量中获取了REDIS_MASTER_SERVICE_HOST,也就是主节点的IP地址,进行从主节点进行了同步。

frontend进行读写分离实现方式

[root@why-01 ~]# kubectl exec -it frontend-0rzd1 -- /bin/bash

root@frontend-0rzd1:/var/www/html# cat guestbook.php

<?

set_include_path('.:/usr/local/lib/php');

error_reporting(E_ALL);

ini_set('display_errors', 1);

require 'Predis/Autoloader.php';

Predis\Autoloader::register();

if (isset($_GET['cmd']) === true) {

$host = 'redis-master';

if (getenv('GET_HOSTS_FROM') == 'env') {

$host = getenv('REDIS_MASTER_SERVICE_HOST');

}

header('Content-Type: application/json');

if ($_GET['cmd'] == 'set') {

$client = new Predis\Client([

'scheme' => 'tcp',

'host' => $host,

'port' => 6379,

]);

$client->set($_GET['key'], $_GET['value']);

print('{"message": "Updated"}');

} else {

$host = 'redis-slave';

if (getenv('GET_HOSTS_FROM') == 'env') {

$host = getenv('REDIS_SLAVE_SERVICE_HOST');

}

$client = new Predis\Client([

'scheme' => 'tcp',

'host' => $host,

'port' => 6379,

]);

$value = $client->get($_GET['key']);

print('{"data": "' . $value . '"}');

}

} else {

phpinfo();

} ?>

root@frontend-0rzd1:/var/www/html# env | grep 'REDIS_MASTER_SERVICE_HOST'

REDIS_MASTER_SERVICE_HOST=10.254.69.157

root@frontend-0rzd1:/var/www/html# env | grep 'REDIS_SLAVE_SERVICE_HOST'

REDIS_SLAVE_SERVICE_HOST=10.254.195.218

也可以看到php代码中对读和写分别指向了REDIS_MASTER_SERVICE_HOST和REDIS_SLAVE_SERVICE_HOST

对于flannel的网络只是用于node之间通信,而不影响kubernetes的ip分配,环境变量中依然是kubernetes中定义的网段,即10.254.0.0/16的网段

flannel网络性能相对路由的方式,性能不是很好

不过在可控的网络中,使用路由配合quagga来做会更好。

服务注册和服务发现通过每个服务分配的不变的虚拟IP和端口实现,系统env变量中有每个服务名称到IP的映射

负载均衡通过每个node通过kube-proxy服务进行代理实现

部署过程中,确定部署的实例数,系统自行进行调度

自动监控,自我修复,当pod挂掉,service重新调度pod

集中配置,并且配置实时生效,通过etcd和zookeeper

[root@why-01 ~]# kubectl exec -it frontend-l8bpq -- /bin/bash

root@frontend-l8bpq:/var/www/html# env | grep 'REDIS_MASTER_SERVICE_HOST'

REDIS_MASTER_SERVICE_HOST=10.254.69.157

root@frontend-l8bpq:/var/www/html# env | grep 'REDIS_SLAVE_SERVICE_HOST'

REDIS_SLAVE_SERVICE_HOST=10.254.195.218

root@frontend-l8bpq:/var/www/html# exit

[root@why-01 ~]# kubectl exec -it frontend- -- /bin/bash

frontend-0rzd1 frontend-l8bpq frontend-sk1wm

[root@why-01 ~]# kubectl exec -it frontend-sk1wm -- /bin/bash

root@frontend-sk1wm:/var/www/html# env | grep 'REDIS_MASTER_SERVICE_HOST'

REDIS_MASTER_SERVICE_HOST=10.254.69.157

root@frontend-sk1wm:/var/www/html# 9.157

bash: 9.157: command not found

root@frontend-sk1wm:/var/www/html# env | grep 'REDIS_SLAVE_SERVICE_HOST'

REDIS_SLAVE_SERVICE_HOST=10.254.195.218

root@frontend-sk1wm:/var/www/html# exit

[root@why-01 ~]# kubectl exec -it frontend- -- /bin/bash

frontend-0rzd1 frontend-l8bpq frontend-sk1wm

[root@why-01 ~]# kubectl exec -it frontend-0rzd1 -- /bin/bash

root@frontend-0rzd1:/var/www/html# env | grep 'REDIS_SLAVE_SERVICE_HOST'

REDIS_SLAVE_SERVICE_HOST=10.254.195.218

root@frontend-0rzd1:/var/www/html# exit

可以看到都是指向了相同的SLAVE,这个IP为虚拟的HOST_IP,到了kube_proxy

kubernetes在etcd中存储的方式

etcd提供的功能

[root@why-01 ~]# etcdctl --help

NAME:

etcdctl - A simple command line client for etcd.

WARNING:

Environment variable ETCDCTL_API is not set; defaults to etcdctl v2.

Set environment variable ETCDCTL_API=3 to use v3 API or ETCDCTL_API=2 to use v2 API.

USAGE:

etcdctl [global options] command [command options] [arguments...]

VERSION:

3.2.5

COMMANDS:

backup backup an etcd directory

cluster-health check the health of the etcd cluster

mk make a new key with a given value

mkdir make a new directory

rm remove a key or a directory

rmdir removes the key if it is an empty directory or a key-value pair

get retrieve the value of a key

ls retrieve a directory

set set the value of a key

setdir create a new directory or update an existing directory TTL

update update an existing key with a given value

updatedir update an existing directory

watch watch a key for changes

exec-watch watch a key for changes and exec an executable

member member add, remove and list subcommands

user user add, grant and revoke subcommands

role role add, grant and revoke subcommands

auth overall auth controls

help, h Shows a list of commands or help for one command

GLOBAL OPTIONS:

--debug output cURL commands which can be used to reproduce the request

--no-sync don't synchronize cluster information before sending request

--output simple, -o simple output response in the given format (simple, `extended` or `json`) (default: "simple")

--discovery-srv value, -D value domain name to query for SRV records describing cluster endpoints

--insecure-discovery accept insecure SRV records describing cluster endpoints

--peers value, -C value DEPRECATED - "--endpoints" should be used instead

--endpoint value DEPRECATED - "--endpoints" should be used instead

--endpoints value a comma-delimited list of machine addresses in the cluster (default: "http://127.0.0.1:2379,http://127.0.0.1:4001")

--cert-file value identify HTTPS client using this SSL certificate file

--key-file value identify HTTPS client using this SSL key file

--ca-file value verify certificates of HTTPS-enabled servers using this CA bundle

--username value, -u value provide username[:password] and prompt if password is not supplied.

--timeout value connection timeout per request (default: 2s)

--total-timeout value timeout for the command execution (except watch) (default: 5s)

--help, -h show help

--version, -v print the version

可以先看一下etcd中都有什么,和zookeeper里边是差不多的

[root@why-01 ~]# etcdctl ls

/registry

/coreos.com

registry就是存储kubernetes信息的位置

pods

[root@why-01 ~]# etcdctl ls /registry

/registry/ranges

/registry/serviceaccounts

/registry/clusterrolebindings

/registry/events

/registry/namespaces

/registry/pods

/registry/services

/registry/clusterroles

/registry/controllers

/registry/minions

[root@why-01 ~]# etcdctl ls /registry/pods

/registry/pods/default

[root@why-01 ~]# etcdctl ls /registry/pods/default

/registry/pods/default/redis-master-8g9zf

/registry/pods/default/redis-slave-4k60b

/registry/pods/default/frontend-0rzd1

/registry/pods/default/frontend-sk1wm

/registry/pods/default/frontend-l8bpq

/registry/pods/default/redis-slave-x8tvb

[root@why-01 ~]# etcdctl ls /registry/pods/default/frontend-0rzd1

/registry/pods/default/frontend-0rzd1

[root@why-01 ~]# etcdctl get /registry/pods/default/frontend-0rzd1

{"kind":"Pod","apiVersion":"v1","metadata":{"name":"frontend-0rzd1","generateName":"frontend-","namespace":"default","selfLink":"/api/v1/namespaces/default/pods/frontend-0rzd1","uid":"2759ee5c-b4c0-11e7-b8d0-5254006db97b","creationTimestamp":"2017-10-19T11:25:04Z","labels":{"name":"frontend"},"annotations":{"kubernetes.io/created-by":"{\"kind\":\"SerializedReference\",\"apiVersion\":\"v1\",\"reference\":{\"kind\":\"ReplicationController\",\"namespace\":\"default\",\"name\":\"frontend\",\"uid\":\"2fb7837c-b424-11e7-b8d0-5254006db97b\",\"apiVersion\":\"v1\",\"resourceVersion\":\"121085\"}}\n"},"ownerReferences":[{"apiVersion":"v1","kind":"ReplicationController","name":"frontend","uid":"2fb7837c-b424-11e7-b8d0-5254006db97b","controller":true}]},"spec":{"containers":[{"name":"frontend","image":"kubeguide/guestbook-php-frontend","ports":[{"containerPort":80,"protocol":"TCP"}],"env":[{"name":"GET_HOSTS_FROM","value":"env"}],"resources":{},"terminationMessagePath":"/dev/termination-log","imagePullPolicy":"Always"}],"restartPolicy":"Always","terminationGracePeriodSeconds":30,"dnsPolicy":"ClusterFirst","nodeName":"why-02","securityContext":{}},"status":{"phase":"Running","conditions":[{"type":"Initialized","status":"True","lastProbeTime":null,"lastTransitionTime":"2017-10-19T11:25:04Z"},{"type":"Ready","status":"True","lastProbeTime":null,"lastTransitionTime":"2017-10-19T11:25:09Z"},{"type":"PodScheduled","status":"True","lastProbeTime":null,"lastTransitionTime":"2017-10-19T11:25:04Z"}],"hostIP":"10.181.13.57","podIP":"10.1.93.4","startTime":"2017-10-19T11:25:04Z","containerStatuses":[{"name":"frontend","state":{"running":{"startedAt":"2017-10-19T11:25:08Z"}},"lastState":{},"ready":true,"restartCount":0,"image":"kubeguide/guestbook-php-frontend","imageID":"docker-pullable://docker.io/kubeguide/guestbook-php-frontend@sha256:195181e0263bcee4ae0c3e79352bbd3487224c0042f1b9ca8543b788962188ce","containerID":"docker://956b264df571674876ecfe3f1d4b6b5814264b8cc4538858b33d782a93cfb236"}]}}

[root@why-01 ~]# etcdctl get /registry/pods/default/redis-slave-x8tvb

{"kind":"Pod","apiVersion":"v1","metadata":{"name":"redis-slave-x8tvb","generateName":"redis-slave-","namespace":"default","selfLink":"/api/v1/namespaces/default/pods/redis-slave-x8tvb","uid":"22e4a727-b4c0-11e7-b8d0-5254006db97b","creationTimestamp":"2017-10-19T11:24:57Z","labels":{"name":"redis-slave"},"annotations":{"kubernetes.io/created-by":"{\"kind\":\"SerializedReference\",\"apiVersion\":\"v1\",\"reference\":{\"kind\":\"ReplicationController\",\"namespace\":\"default\",\"name\":\"redis-slave\",\"uid\":\"26044c0d-b424-11e7-b8d0-5254006db97b\",\"apiVersion\":\"v1\",\"resourceVersion\":\"121536\"}}\n"},"ownerReferences":[{"apiVersion":"v1","kind":"ReplicationController","name":"redis-slave","uid":"26044c0d-b424-11e7-b8d0-5254006db97b","controller":true}]},"spec":{"containers":[{"name":"slave","image":"kubeguide/guestbook-redis-slave","ports":[{"containerPort":6379,"protocol":"TCP"}],"env":[{"name":"GET_HOSTS_FROM","value":"env"}],"resources":{},"terminationMessagePath":"/dev/termination-log","imagePullPolicy":"Always"}],"restartPolicy":"Always","terminationGracePeriodSeconds":30,"dnsPolicy":"ClusterFirst","nodeName":"why-03","securityContext":{}},"status":{"phase":"Running","conditions":[{"type":"Initialized","status":"True","lastProbeTime":null,"lastTransitionTime":"2017-10-19T11:24:57Z"},{"type":"Ready","status":"True","lastProbeTime":null,"lastTransitionTime":"2017-10-19T11:25:03Z"},{"type":"PodScheduled","status":"True","lastProbeTime":null,"lastTransitionTime":"2017-10-19T11:24:57Z"}],"hostIP":"10.181.5.146","podIP":"10.1.45.7","startTime":"2017-10-19T11:24:57Z","containerStatuses":[{"name":"slave","state":{"running":{"startedAt":"2017-10-19T11:25:02Z"}},"lastState":{},"ready":true,"restartCount":0,"image":"kubeguide/guestbook-redis-slave","imageID":"docker-pullable://docker.io/kubeguide/guestbook-redis-slave@sha256:a36fec97659fe96b5b28750d88b5cfb84a45138bcf1397c8e237031b8855c58c","containerID":"docker://b32a3d5bd5062c0ad0069966f47c1f765899f20f2889d84681dbdd404d4d601a"}]}}

service

specs

[root@why-01 ~]# etcdctl ls /registry/services

/registry/services/endpoints

/registry/services/specs

[root@why-01 ~]# etcdctl ls /registry/services/specs

/registry/services/specs/default

[root@why-01 ~]# etcdctl ls /registry/services/specs/default

/registry/services/specs/default/frontend

/registry/services/specs/default/kubernetes

/registry/services/specs/default/redis-master

/registry/services/specs/default/redis-slave

[root@why-01 ~]# etcdctl ls /registry/services/specs/default/frontend

/registry/services/specs/default/frontend

[root@why-01 ~]# etcdctl get /registry/services/specs/default/frontend

{"kind":"Service","apiVersion":"v1","metadata":{"name":"frontend","namespace":"default","uid":"2fa19c5d-b424-11e7-b8d0-5254006db97b","creationTimestamp":"2017-10-18T16:48:36Z","labels":{"name":"frontend"}},"spec":{"ports":[{"protocol":"TCP","port":80,"targetPort":80,"nodePort":30001}],"selector":{"name":"frontend"},"clusterIP":"10.254.44.223","type":"NodePort","sessionAffinity":"None"},"status":{"loadBalancer":{}}}

endpoints

[root@why-01 ~]# etcdctl ls /registry/services/endpoints/default

/registry/services/endpoints/default/frontend

/registry/services/endpoints/default/kubernetes

/registry/services/endpoints/default/redis-master

/registry/services/endpoints/default/redis-slave

[root@why-01 ~]# etcdctl ls /registry/services/endpoints/default/frontend

/registry/services/endpoints/default/frontend

[root@why-01 ~]# etcdctl get /registry/services/endpoints/default/frontend

{"kind":"Endpoints","apiVersion":"v1","metadata":{"name":"frontend","namespace":"default","selfLink":"/api/v1/namespaces/default/endpoints/frontend","uid":"2fa294cf-b424-11e7-b8d0-5254006db97b","creationTimestamp":"2017-10-18T16:48:36Z","labels":{"name":"frontend"}},"subsets":[{"addresses":[{"ip":"10.1.45.4","nodeName":"why-03","targetRef":{"kind":"Pod","namespace":"default","name":"frontend-sk1wm","uid":"2ef6a73c-b4c0-11e7-b8d0-5254006db97b","resourceVersion":"121666"}},{"ip":"10.1.93.4","nodeName":"why-02","targetRef":{"kind":"Pod","namespace":"default","name":"frontend-0rzd1","uid":"2759ee5c-b4c0-11e7-b8d0-5254006db97b","resourceVersion":"121597"}},{"ip":"10.1.93.5","nodeName":"why-02","targetRef":{"kind":"Pod","namespace":"default","name":"frontend-l8bpq","uid":"2b8cbbe5-b4c0-11e7-b8d0-5254006db97b","resourceVersion":"121629"}}],"ports":[{"port":80,"protocol":"TCP"}]}]}

minions

[root@why-01 ~]# etcdctl ls /registry/minions

/registry/minions/why-02

/registry/minions/why-03

[root@why-01 ~]# etcdctl ls /registry/minions/why-02

/registry/minions/why-02

[root@why-01 ~]# etcdctl get /registry/minions/why-02

{"kind":"Node","apiVersion":"v1","metadata":{"name":"why-02","selfLink":"/api/v1/nodes/why-02","uid":"1debd0dd-b3d2-11e7-9884-5254006db97b","creationTimestamp":"2017-10-18T07:01:08Z","labels":{"beta.kubernetes.io/arch":"amd64","beta.kubernetes.io/os":"linux","kubernetes.io/hostname":"why-02"},"annotations":{"volumes.kubernetes.io/controller-managed-attach-detach":"true"}},"spec":{"externalID":"why-02"},"status":{"capacity":{"alpha.kubernetes.io/nvidia-gpu":"0","cpu":"1","memory":"1016516Ki","pods":"110"},"allocatable":{"alpha.kubernetes.io/nvidia-gpu":"0","cpu":"1","memory":"1016516Ki","pods":"110"},"conditions":[{"type":"OutOfDisk","status":"False","lastHeartbeatTime":"2017-10-20T11:04:26Z","lastTransitionTime":"2017-10-19T11:22:34Z","reason":"KubeletHasSufficientDisk","message":"kubelet has sufficient disk space available"},{"type":"MemoryPressure","status":"False","lastHeartbeatTime":"2017-10-20T11:04:26Z","lastTransitionTime":"2017-10-18T07:01:08Z","reason":"KubeletHasSufficientMemory","message":"kubelet has sufficient memory available"},{"type":"DiskPressure","status":"False","lastHeartbeatTime":"2017-10-20T11:04:26Z","lastTransitionTime":"2017-10-18T07:01:08Z","reason":"KubeletHasNoDiskPressure","message":"kubelet has no disk pressure"},{"type":"Ready","status":"True","lastHeartbeatTime":"2017-10-20T11:04:26Z","lastTransitionTime":"2017-10-19T11:22:34Z","reason":"KubeletReady","message":"kubelet is posting ready status"}],"addresses":[{"type":"LegacyHostIP","address":"10.181.13.57"},{"type":"InternalIP","address":"10.181.13.57"},{"type":"Hostname","address":"why-02"}],"daemonEndpoints":{"kubeletEndpoint":{"Port":10250}},"nodeInfo":{"machineID":"f9d400c5e1e8c3a8209e990d887d4ac1","systemUUID":"F2624563-5BE4-45D5-8AE4-AD1148FDE199","bootID":"798f26bd-d7d3-4550-a6f3-1907c826aafe","kernelVersion":"3.10.0-514.26.2.el7.x86_64","osImage":"CentOS Linux 7 (Core)","containerRuntimeVersion":"docker://1.12.6","kubeletVersion":"v1.5.2","kubeProxyVersion":"v1.5.2","operatingSystem":"linux","architecture":"amd64"},"images":[{"names":["docker.io/kubeguide/guestbook-php-frontend@sha256:195181e0263bcee4ae0c3e79352bbd3487224c0042f1b9ca8543b788962188ce","docker.io/kubeguide/guestbook-php-frontend:latest"],"sizeBytes":510011333},{"names":["docker.io/kubeguide/redis-master@sha256:e11eae36476b02a195693689f88a325b30540f5c15adbf531caaecceb65f5b4d","docker.io/kubeguide/redis-master:latest"],"sizeBytes":419112448},{"names":["docker.io/kubeguide/guestbook-redis-slave@sha256:a36fec97659fe96b5b28750d88b5cfb84a45138bcf1397c8e237031b8855c58c","docker.io/kubeguide/guestbook-redis-slave:latest"],"sizeBytes":109461757},{"names":["docker.io/nginx@sha256:004ac1d5e791e705f12a17c80d7bb1e8f7f01aa7dca7deee6e65a03465392072","docker.io/nginx:latest"],"sizeBytes":108275923},{"names":["gcr.io/google_containers/pause-amd64:3.0"],"sizeBytes":746888}]}}

controllers

[root@why-01 ~]# /registry/controllers

-bash: /registry/controllers: No such file or directory

[root@why-01 ~]# etcdctl ls /registry/controllers

/registry/controllers/default

[root@why-01 ~]# etcdctl ls /registry/controllers/default

/registry/controllers/default/frontend

/registry/controllers/default/redis-master

/registry/controllers/default/redis-slave

[root@why-01 ~]# etcdctl ls /registry/controllers/default/redis-slave

/registry/controllers/default/redis-slave

[root@why-01 ~]# etcdctl get /registry/controllers/default/redis-slave

{"kind":"ReplicationController","apiVersion":"v1","metadata":{"name":"redis-slave","namespace":"default","selfLink":"/api/v1/namespaces/default/replicationcontrollers/redis-slave","uid":"26044c0d-b424-11e7-b8d0-5254006db97b","generation":1,"creationTimestamp":"2017-10-18T16:48:20Z","labels":{"name":"redis-slave"}},"spec":{"replicas":2,"selector":{"name":"redis-slave"},"template":{"metadata":{"creationTimestamp":null,"labels":{"name":"redis-slave"}},"spec":{"containers":[{"name":"slave","image":"kubeguide/guestbook-redis-slave","ports":[{"containerPort":6379,"protocol":"TCP"}],"env":[{"name":"GET_HOSTS_FROM","value":"env"}],"resources":{},"terminationMessagePath":"/dev/termination-log","imagePullPolicy":"Always"}],"restartPolicy":"Always","terminationGracePeriodSeconds":30,"dnsPolicy":"ClusterFirst","securityContext":{}}}},"status":{"replicas":2,"fullyLabeledReplicas":2,"readyReplicas":2,"availableReplicas":2,"observedGeneration":1}}

[root@why-01 ~]# etcdctl get /registry/controllers/default/frontend

{"kind":"ReplicationController","apiVersion":"v1","metadata":{"name":"frontend","namespace":"default","selfLink":"/api/v1/namespaces/default/replicationcontrollers/frontend","uid":"2fb7837c-b424-11e7-b8d0-5254006db97b","generation":1,"creationTimestamp":"2017-10-18T16:48:37Z","labels":{"name":"frontend"}},"spec":{"replicas":3,"selector":{"name":"frontend"},"template":{"metadata":{"creationTimestamp":null,"labels":{"name":"frontend"}},"spec":{"containers":[{"name":"frontend","image":"kubeguide/guestbook-php-frontend","ports":[{"containerPort":80,"protocol":"TCP"}],"env":[{"name":"GET_HOSTS_FROM","value":"env"}],"resources":{},"terminationMessagePath":"/dev/termination-log","imagePullPolicy":"Always"}],"restartPolicy":"Always","terminationGracePeriodSeconds":30,"dnsPolicy":"ClusterFirst","securityContext":{}}}},"status":{"replicas":3,"fullyLabeledReplicas":3,"readyReplicas":3,"availableReplicas":3,"observedGeneration":1}}

namespaces

[root@why-01 ~]# etcdctl ls /registry/namespaces

/registry/namespaces/default

/registry/namespaces/kube-system

[root@why-01 ~]# etcdctl ls /registry/namespaces/default

/registry/namespaces/default

[root@why-01 ~]# etcdctl get /registry/namespaces/default

{"kind":"Namespace","apiVersion":"v1","metadata":{"name":"default","uid":"0484ae2d-b3bb-11e7-9e1c-5254006db97b","creationTimestamp":"2017-10-18T04:15:47Z"},"spec":{"finalizers":["kubernetes"]},"status":{"phase":"Active"}}

集群运维中常见问题

因为并没有用在生产环境,这边留作记录

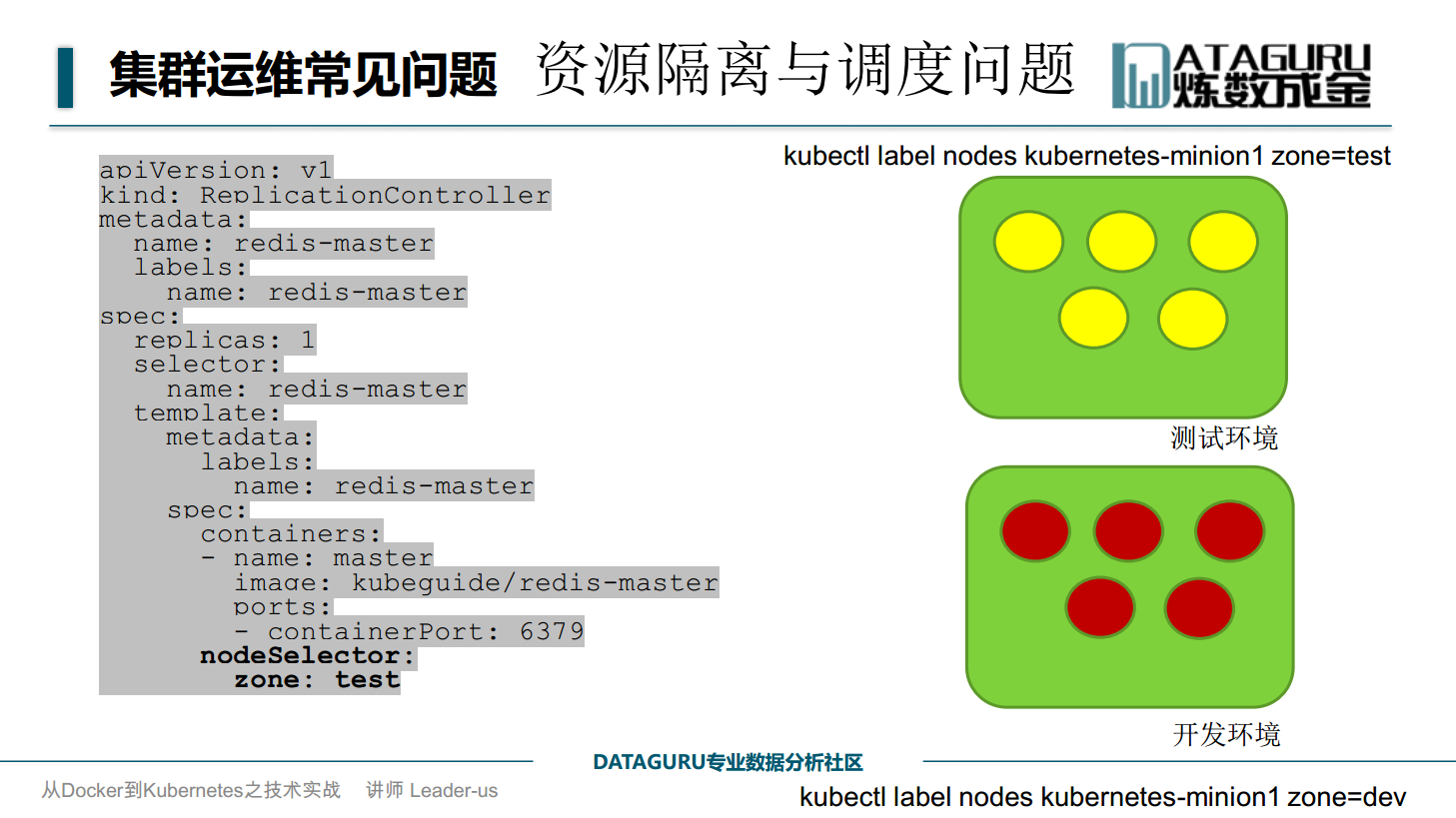

资源隔离与调度问题

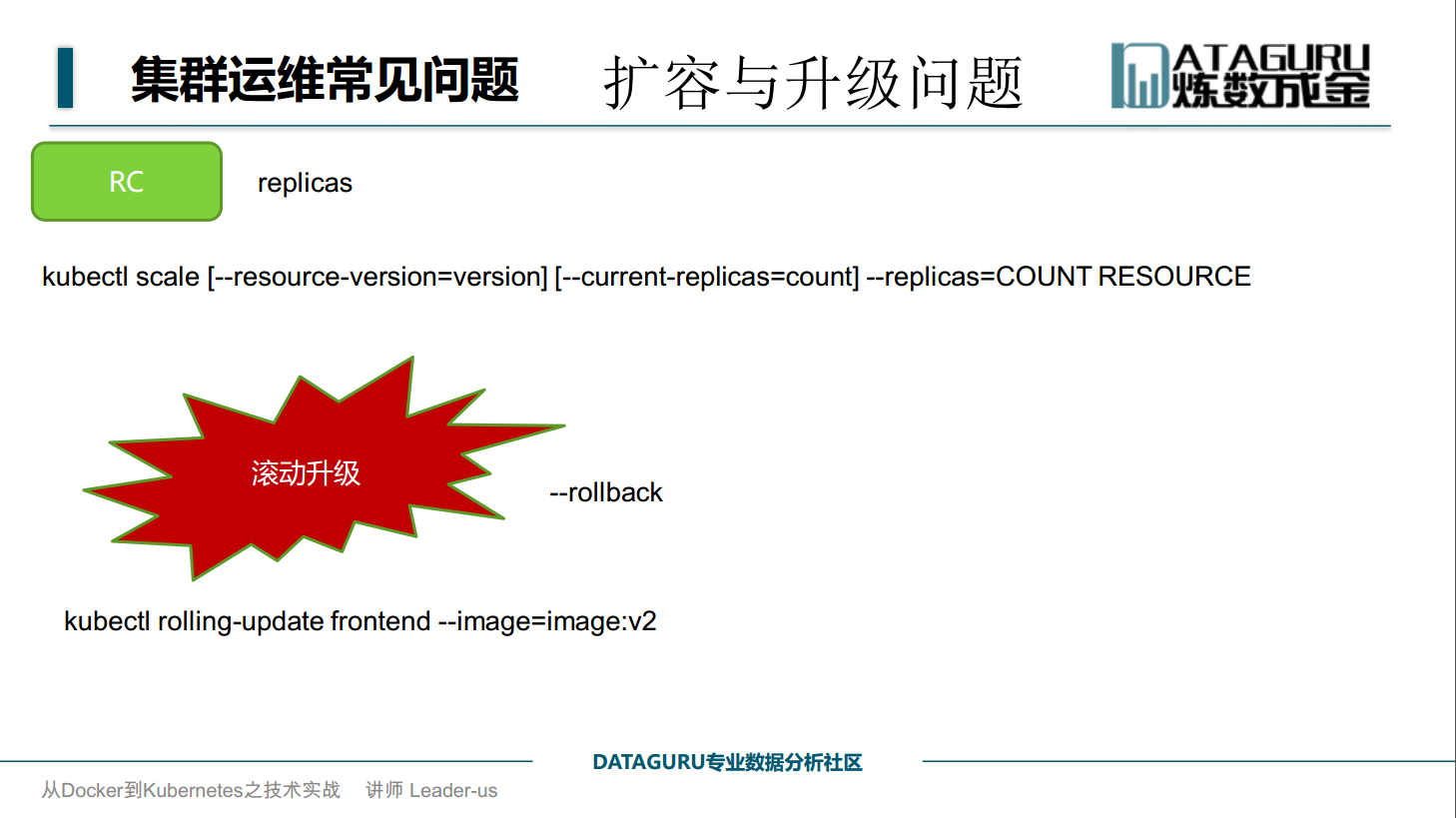

扩容和升级问题

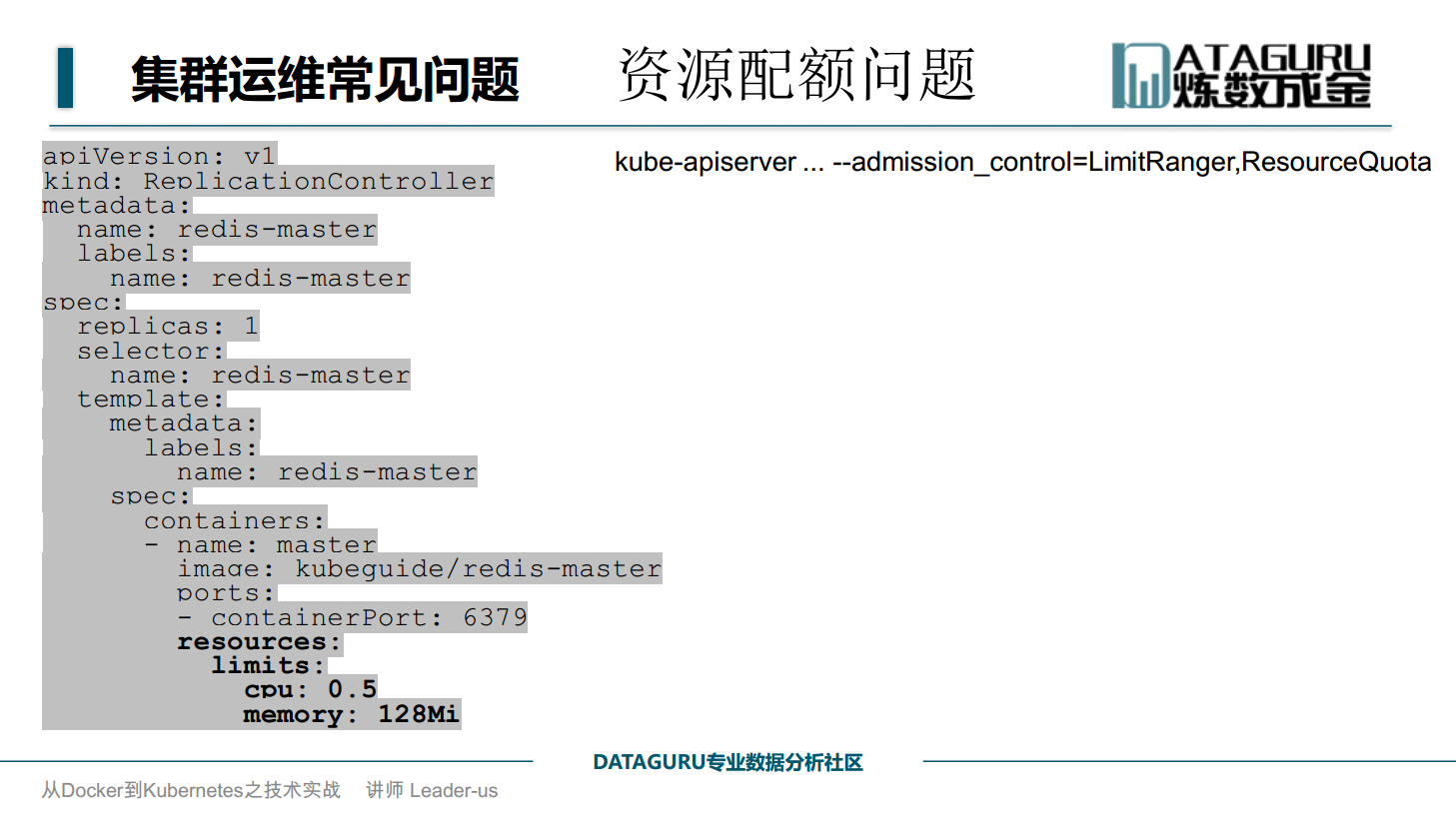

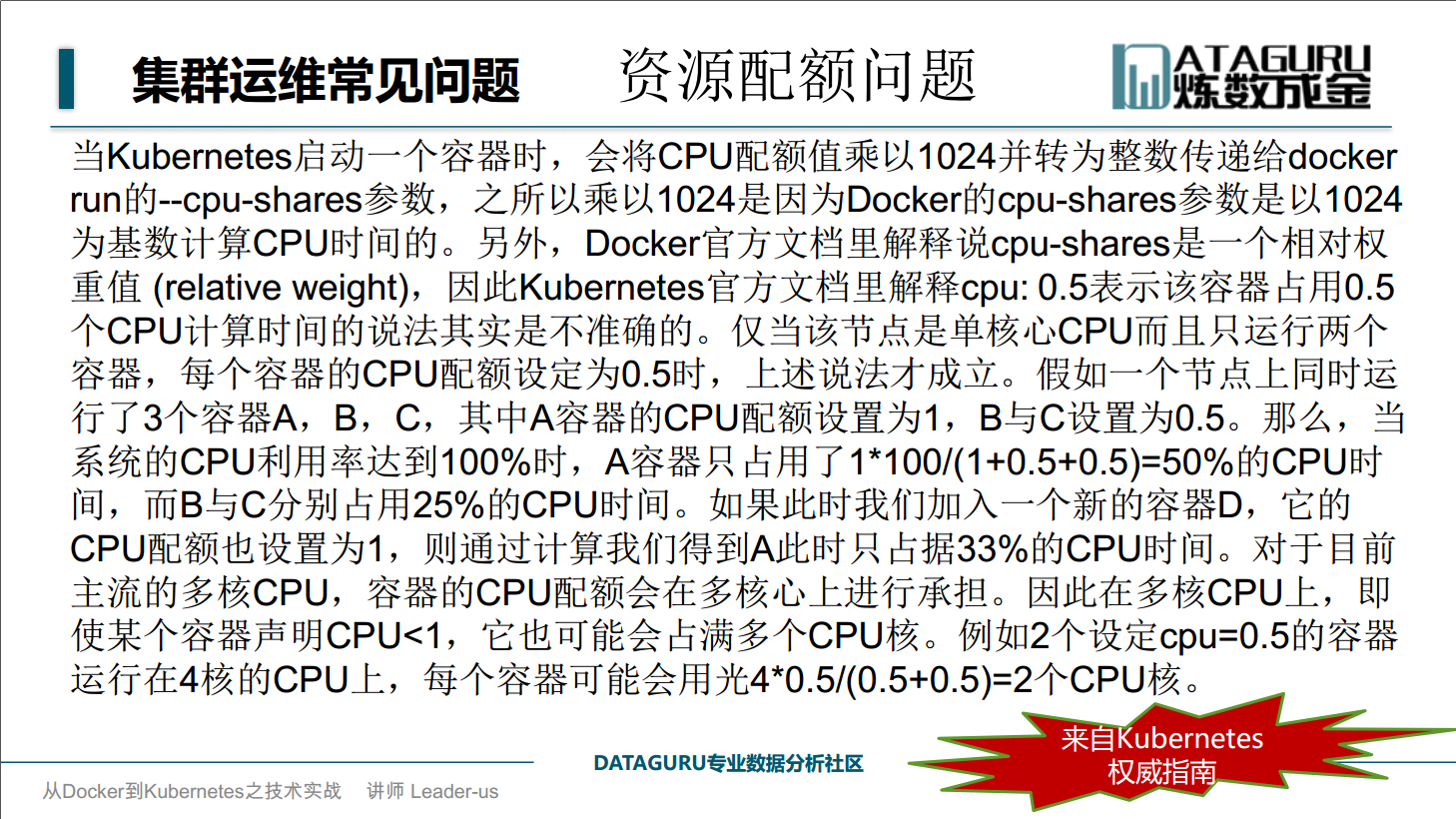

资源配额问题

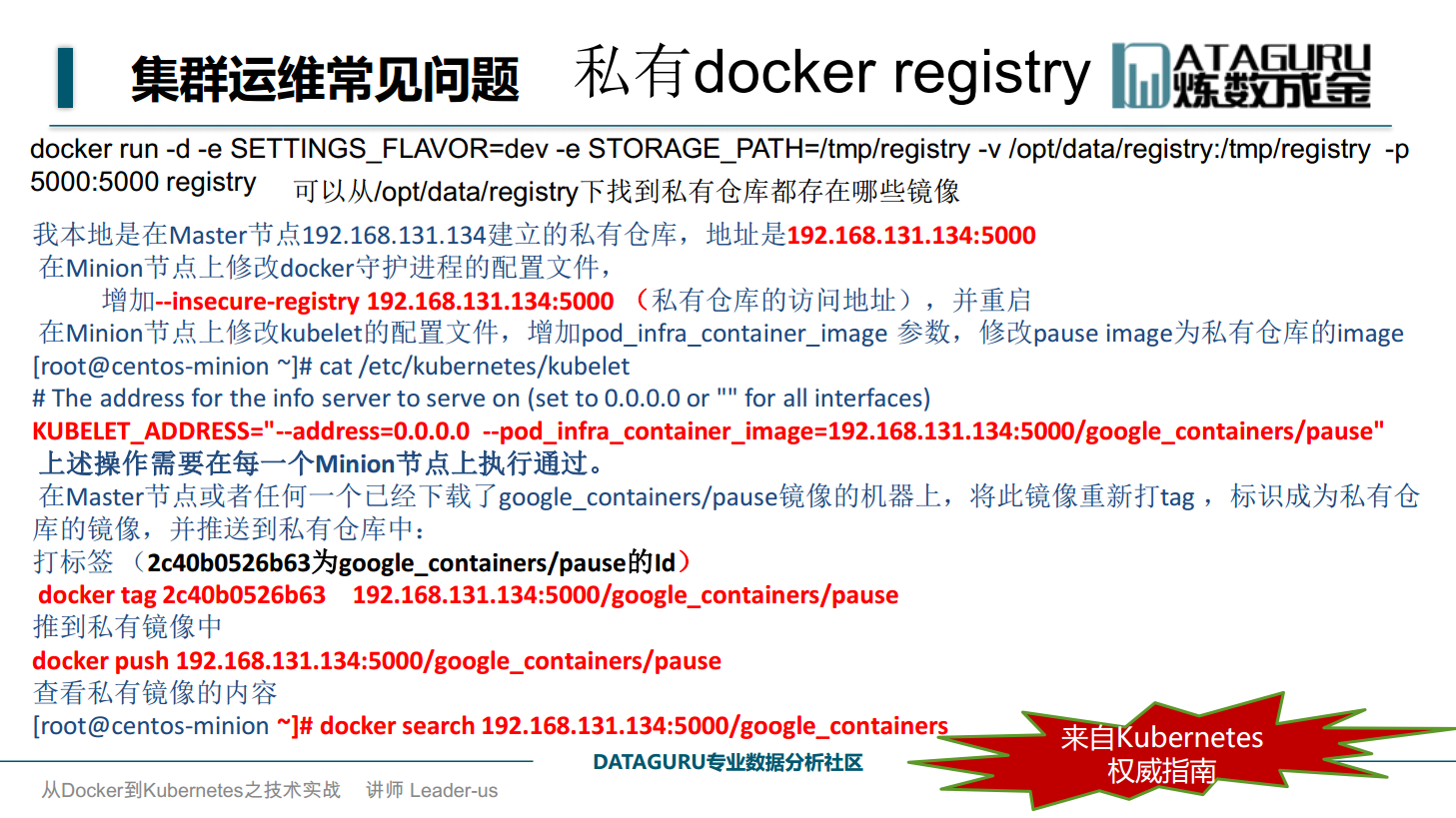

私有docker registry