<服务>HAproxy

目录:

HAproxy

HAproxy是一个开源的高性能的基于TCP和HTTP协议的高可用,负载均衡服务软件,它支持双机热备,高可用,负载均衡,虚拟主机,基于TCP和HTTP的应用代理,图形化界面查看等信息功能,其配置简单,其配置简单,维护方便,很好的对服务器节点的健康状态进行检查,如果服务器节点出现故障就进行摘除,当服务正常后再进行加入。

与LVS和nginx相比,HAproxy更适合高负载,访问量大的业务,但是有需要进行会话保持以及七层应用的代理的业务应用,HAproxy不具备web服务功能,lvs的会话保持需要用p的参数,而nginx可能需要ip_hash或第三方算法进行会话保持,不过这些都会造成负载不均的情况,HAproxy就可以在会话中添加session的形式实现,式LVS的DR模式,节点最好是公网IP,HAproxy的代理模式类似于LVS的NAT模式,只要配置为私网即可。

具备frontend和backend的功能,frontend(acl规则匹配)可以根据HTTP请求头的内容做规则匹配,然后把请求定向到相关的backend

HAproxy官网链接http://www.haproxy.org

安装HAproxy

[root@why-2 ~]# wget http://www.haproxy.org/download/1.4/src/haproxy-1.4.24.tar.gz

[root@why-2 ~]# tar xf haproxy-1.4.24.tar.gz

[root@why-2 ~]# uname -a

Linux why-2 2.6.32-431.el6.x86_64 #1 SMP Sun Nov 10 22:19:54 EST 2013 x86_64 x86_64 x86_64 GNU/Linux

[root@why-2 ~]# cd haproxy-1.4.24

[root@why-2 haproxy-1.4.24]# make TARGET=linux2628 ARCH=x86_64 #具体编译可以根据README进行修改,版本大于2.6.28就可以linux2628

[root@why-2 haproxy-1.4.24]# make PREFIX=/usr/local/haproxy-1.4.24 install

开启内核转发

haproxy并不依赖内核转发,不过最好还是开启

[root@why-2 haproxy-1.4.24]# vi /etc/sysctl.conf

net.ipv4.ip_forward = 1

[root@why-2 haproxy-1.4.24]# sysctl -p

net.ipv4.ip_forward = 1

net.ipv4.conf.default.rp_filter = 1

net.ipv4.conf.default.accept_source_route = 0

kernel.sysrq = 0

kernel.core_uses_pid = 1

net.ipv4.tcp_syncookies = 1

net.bridge.bridge-nf-call-ip6tables = 0

net.bridge.bridge-nf-call-iptables = 0

net.bridge.bridge-nf-call-arptables = 0

kernel.msgmnb = 65536

kernel.msgmax = 65536

kernel.shmmax = 68719476736

kernel.shmall = 4294967296

配置HAproxy

[root@why-2 haproxy-1.4.24]# ln -s /usr/local/haproxy-1.4.24 /usr/local/haproxy

[root@why-2 haproxy-1.4.24]# tree !$

tree /usr/local/haproxy

/usr/local/haproxy

|-- doc

| `-- haproxy

| |-- architecture.txt

| |-- configuration.txt

| |-- haproxy-en.txt

| `-- haproxy-fr.txt

|-- sbin

| `-- haproxy

`-- share

`-- man

`-- man1

`-- haproxy.1

6 directories, 6 files

[root@why-2 haproxy-1.4.24]# cd /usr/local/haproxy

[root@why-2 haproxy]# mkdir -p bin conf logs var/run var/chroot

[root@why-2 haproxy]# cd conf/

[root@why-2 conf]# vi haproxy.conf

global #全局配置参数段,负责HAproxy启动前的进行和系统相关设置

chroot /usr/local/haproxy/var/chroot #chroot

daemon #以守护进程的方式运行

group haproxy

user haproxy

log 127.0.0.1:514 local0 warning #全局日志配置,指定本地syslog中的local0设备接管(514端口),日志级别为warning

pidfile /usr/local/haproxy/var/run/haproxy.pid #进程号文件和路径

maxconn 20000 #定义每个haproxy的最大连接数,由于每个连接都包括服务端和客户端,所以是TCP回话的2倍

spread-checks 3 #

nbproc 8 #设定haproxy启动的进程数,一般和服务器的cpu核心数保持一致,不过最好小于核心数

defaults #配置一些默认参数,front,backend,listen等段未设置时使用default配置

log global

mode http #haproxy的模式,http为7层,tcp为4层,health为监控检查

retries 3 #连接后端服务器失败重连的次数,超过该次数后端服务器记录为不可用

option redispatch #当使用cookie,会将请求的serverID插入到cookie中,保证session的持久性,如果后端服务器挂掉,强制定向到其他服务器

contimeout 5000 #连接一台服务器的最长等待时间,单位为毫秒

clitimeout 50000 #设置连接客户端发送数据时连接最长等待时间,单位为毫秒

srvtimeout 50000 #设置连接服务端回应客户端数据发送的最长等待时间,单位为毫秒

listen why

bind *:80 #设置连接的VIP和端口

# mode http

mode tcp

# stats enable #HAproxy状态信息的web界面

# stats uri /admin?stats

# stats auth proxy:why123456

balance roundrobin #负载均衡策略

# option httpclose

# option forwardfor #后端记录访问IP

# option httpchk HEAD /check.html HTTP/1.0 #HTTP健康检查

server www01 192.168.0.201:22 check port 22 inter 5000 fall 5 #后端真实的服务,检查间隔5000毫秒,超过5次检测失败剔除节点

检查配置文件

[root@why-2 conf]# ../sbin/haproxy -f ./haproxy.conf -c

Configuration file is valid

对于超时时间,负载均衡器响应时间,加服务器响应时间,加上数据库响应时间一定要要小于浏览器的超时时间,或者我们希望提供请求的时间,如果时间过短,就会出现502和504等问题,当然还是要提供后端服务的响应时间

添加HAproxy用户

[root@why-2 conf]# id haproxy

id: haproxy: No such user

[root@why-2 conf]# useradd haproxy -s /sbin/nologin

[root@why-2 conf]# id haproxy

uid=505(haproxy) gid=505(haproxy) groups=505(haproxy)

启动服务验证

[root@why-2 conf]# ../sbin/haproxy -f ./haproxy.conf -D

[root@why-2 conf]# ssh -p80 192.168.0.202

The authenticity of host '[192.168.0.202]:80 ([192.168.0.202]:80)' can't be established.

RSA key fingerprint is 8e:c6:93:52:57:44:3f:d6:3a:2e:95:69:56:70:bf:96.

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added '[192.168.0.202]:80' (RSA) to the list of known hosts.

root@192.168.0.202's password:

Last login: Mon Mar 6 01:34:33 2017 from 192.168.0.101

[root@why-1 ~]# ip addr | grep 192.168.0

inet 192.168.0.201/24 brd 192.168.0.255 scope global eth0

[root@why-1 ~]# Connection to 192.168.0.202 closed by remote host.

Connection to 192.168.0.202 closed.

可以看到我们通过ssh连接192.168.0.202的80端口被转发到192.168.0.201的22端口了,成功的ssh连接到192.168.0.201主机

如果出现

[root@why-2 conf]# ../sbin/haproxy -f ./haproxy.conf -D

[ALERT] 069/192915 (16939) : Starting proxy why: cannot bind socket

可能为配置中的端口被占用了

其他参数

[root@why-2 ~]# /usr/local/haproxy/sbin/haproxy

HA-Proxy version 1.4.24 2013/06/17

Copyright 2000-2013 Willy Tarreau <w@1wt.eu>

Usage : haproxy [-f <cfgfile>]* [ -vdVD ] [ -n <maxconn> ] [ -N <maxpconn> ] #-f指定配置文件

[ -p <pidfile> ] [ -m <max megs> ]

-v displays version ; -vv shows known build options.

-d enters debug mode ; -db only disables background mode.

-V enters verbose mode (disables quiet mode) #守护进程

-D goes daemon

-q quiet mode : don't display messages #不显示警告

-c check mode : only check config files and exit #检查配置文件

-n sets the maximum total # of connections (2000) #并发连接数,这个可以在配置文件中指定

-m limits the usable amount of memory (in MB) #使用内存的大小限制

-N sets the default, per-proxy maximum # of connections (2000)

-p writes pids of all children to this file #指定pid文件,这个可以在配置文件中指定

-de disables epoll() usage even when available

-ds disables speculative epoll() usage even when available

-dp disables poll() usage even when available

-dS disables splice usage (broken on old kernels)

-sf/-st [pid ]* finishes/terminates old pids. Must be last arguments. #reload功能

配置日志服务

[root@why-2 conf]# vi /etc/rsyslog.conf

取消以下两行的注释

$ModLoad imudp

$UDPServerRun 514

添加

local0.* /usr/local/haproxy/logs/haproxy.log

[root@why-2 conf]# tail -1 /etc/rsyslog.conf

local0.* /usr/local/haproxy/logs/haproxy.log

[root@why-2 conf]# vi /etc/sysconfig/rsyslog

[root@why-2 conf]# tail -2 /etc/sysconfig/rsyslog

#SYSLOGD_OPTIONS="-c 5"

SYSLOGD_OPTIONS="-c 2 -m 0 -r -x" #-r为激活远程记录日志,-x不进行DNS解析

[root@why-2 conf]# service rsyslog restart

关闭系统日志记录器: [确定]

启动系统日志记录器: [确定]

[root@why-2 conf]# ss -nlput | grep 514

udp UNCONN 0 0 *:514 *:* users:(("rsyslogd",17442,3))

udp UNCONN 0 0 :::514 :::* users:(("rsyslogd",17442,4))

[root@why-2 conf]# ll /usr/local/haproxy/logs/haproxy.log

-rw------- 1 root root 0 Mar 11 21:29 /usr/local/haproxy/logs/haproxy.log

注意要禁用selinux,否则日志无法写入

配置

[root@why-2 conf]# cp haproxy.conf haproxy.conf.tcp

[root@why-2 conf]# vi haproxy.conf

[root@why-2 conf]# cat haproxy.conf

global

chroot /usr/local/haproxy/var/chroot

daemon

group apache

user apache

log 127.0.0.1:514 local0 warning

pidfile /usr/local/haproxy/var/run/haproxy.pid

maxconn 20000

spread-checks 3

nbproc 8

defaults

log global

mode http

retries 3

option redispatch

contimeout 5000

clitimeout 50000

srvtimeout 50000

listen why

bind *:80

mode http

stats enable

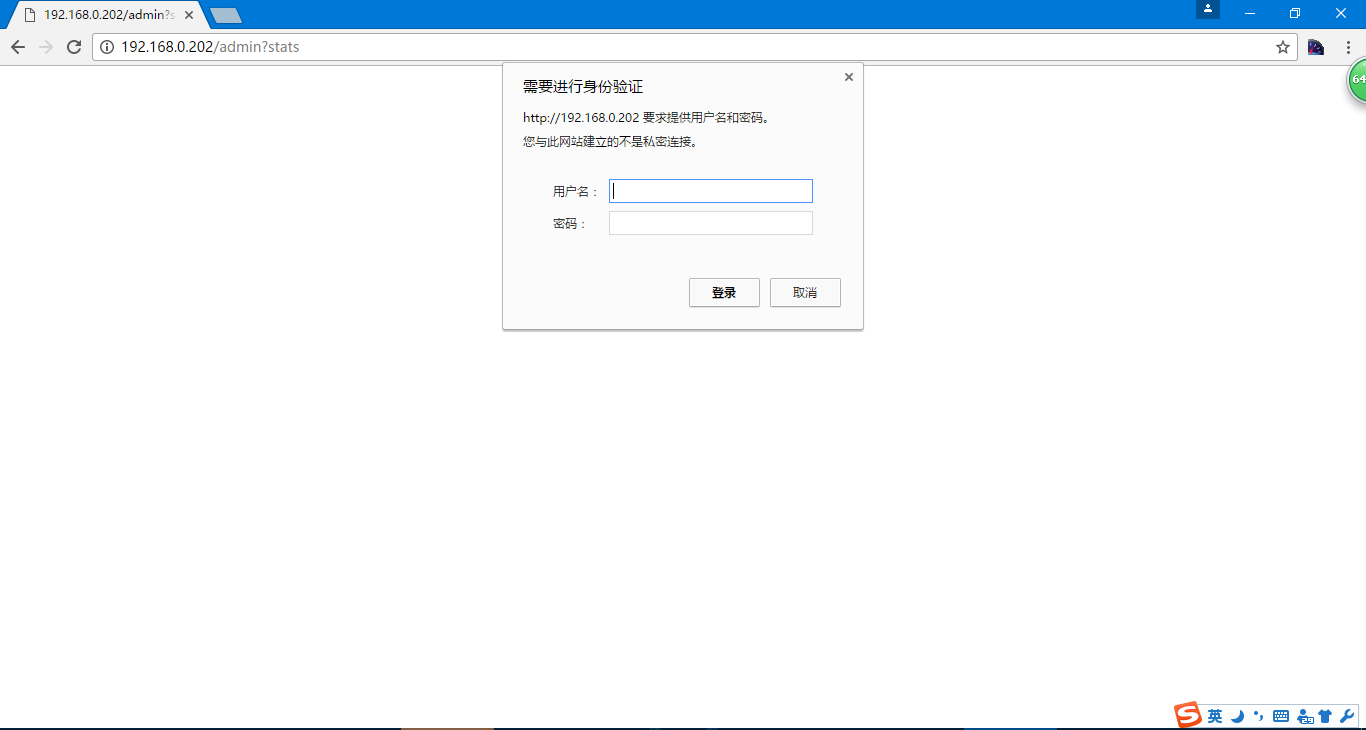

stats uri /admin?stats # 默认url为haproxy?stats

stats auth admin:admin

balance roundrobin

option httpclose

option forwardfor

# option httpchk HEAD /check.html HTTP/1.0

server www01 192.168.0.201:80 check port 80 inter 5000 fall 5

server www02 192.168.0.203:80 check port 80 inter 5000 fall 5

[root@why-2 conf]# ../sbin/haproxy -f ./haproxy.conf -D

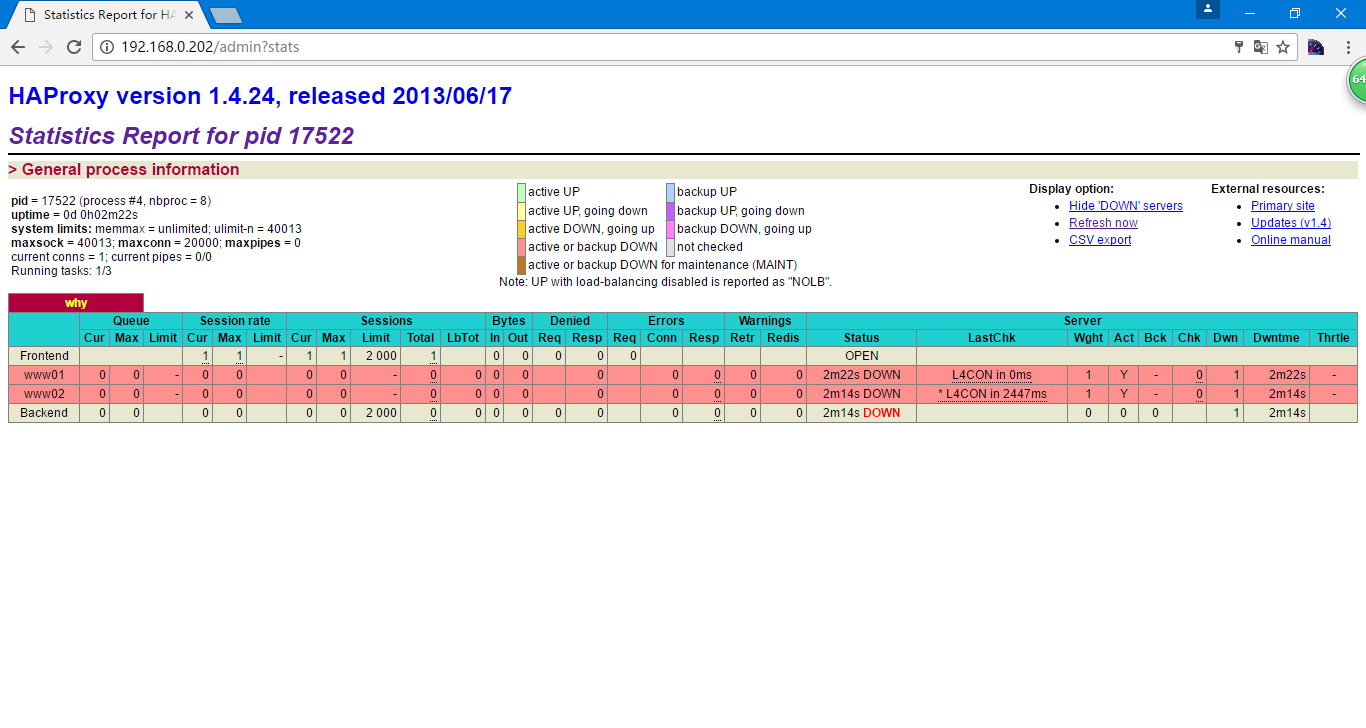

[WARNING] 069/215308 (17518) : Proxy 'why': in multi-process mode, stats will be limited to process assigned to the current request.

报多进程模式的警告

[root@why-2 conf]#

Message from syslogd@127.0.0.1 at Mar 11 21:53:14 ...

haproxy[17523]: proxy why has no server available!

Message from syslogd@127.0.0.1 at Mar 11 21:53:14 ...

haproxy[17521]: proxy why has no server available!

Message from syslogd@127.0.0.1 at Mar 11 21:53:14 ...

haproxy[17520]: proxy why has no server available!

Message from syslogd@127.0.0.1 at Mar 11 21:53:16 ...

haproxy[17524]: proxy why has no server available!

Message from syslogd@127.0.0.1 at Mar 11 21:53:16 ...

haproxy[17522]: proxy why has no server available!

Message from syslogd@127.0.0.1 at Mar 11 21:53:16 ...

haproxy[17525]: proxy why has no server available!

Message from syslogd@127.0.0.1 at Mar 11 21:53:16 ...

haproxy[17519]: proxy why has no server available!

Message from syslogd@127.0.0.1 at Mar 11 21:53:16 ...

haproxy[17526]: proxy why has no server available!

这些警告是因为后端服务没有启动导致的

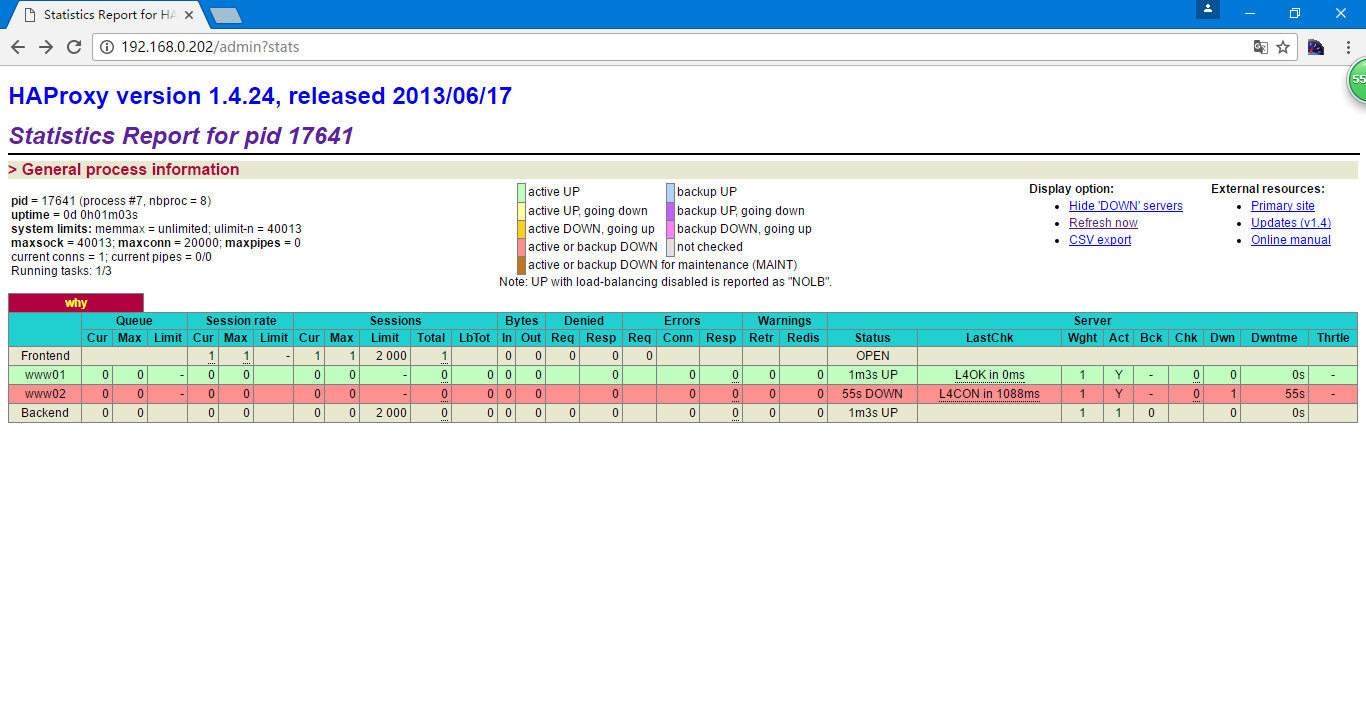

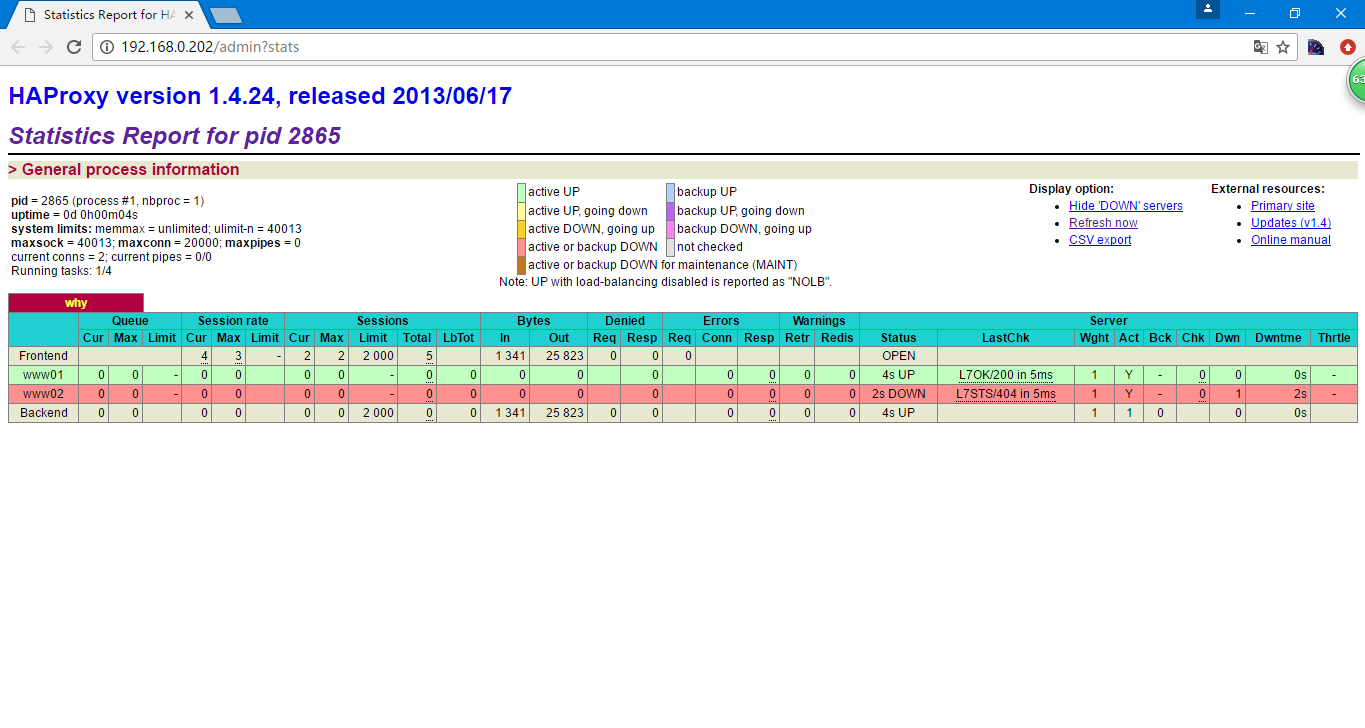

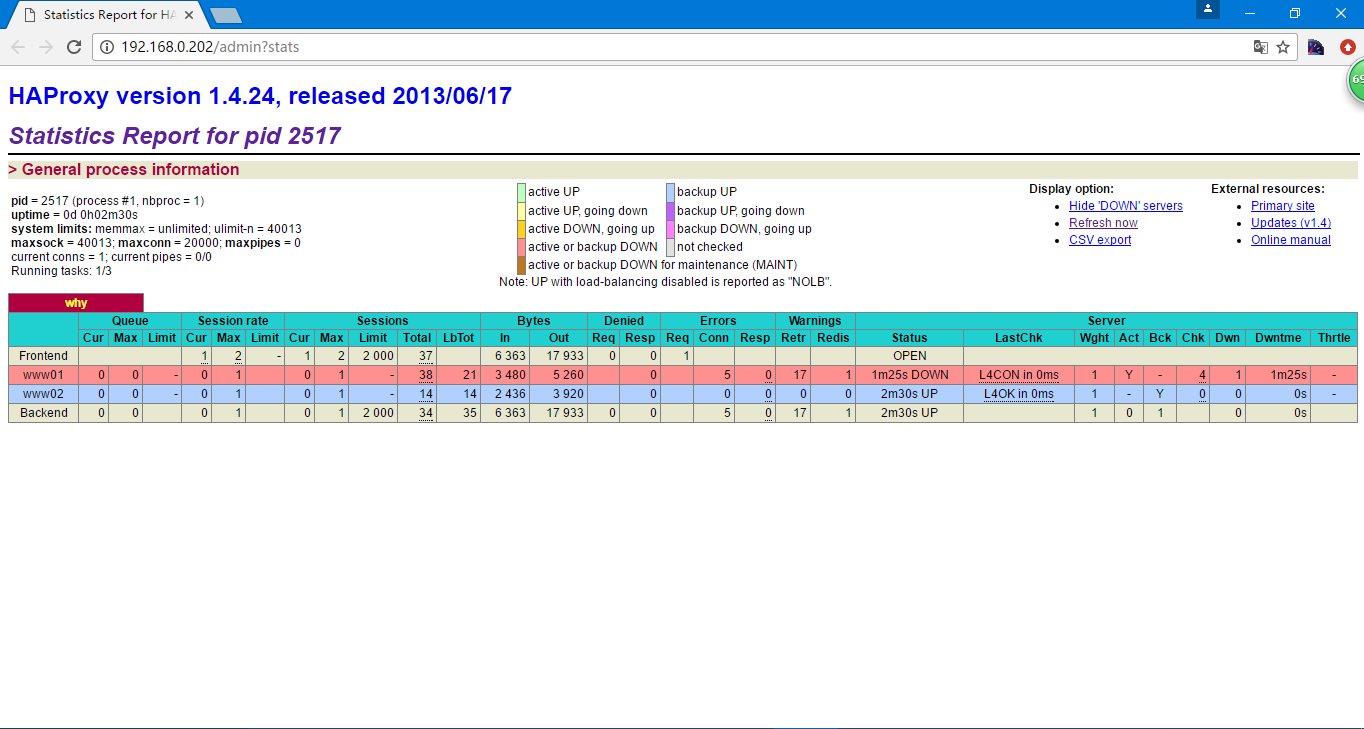

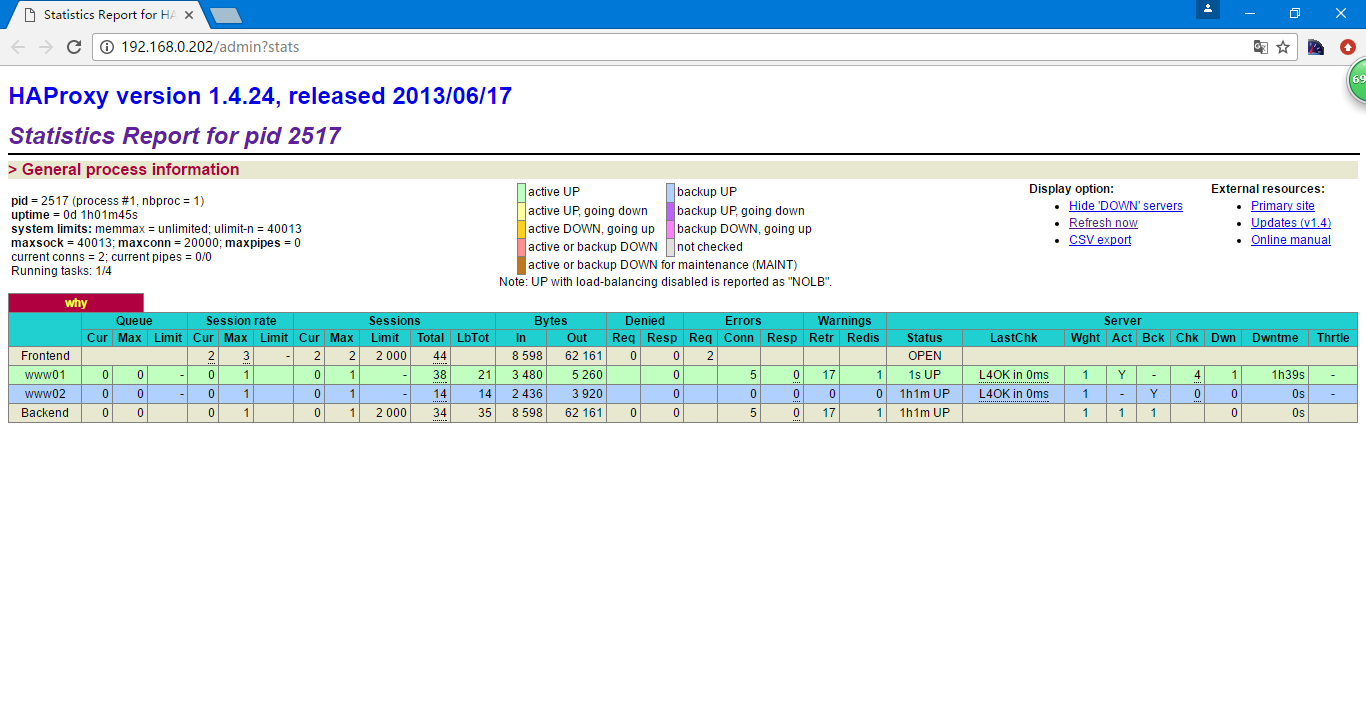

这些红色服务代表宕机,正常的服务应该为绿色,主要是用来监控一些session,字节,失败次数,宕机时间等

添加web服务主页

[root@why-1 ~]# cd /var/www/html

[root@why-1 html]# vi index.html

[root@why-1 html]# cat index.html

192.168.0.201

[root@why-1 html]# service httpd start

正在启动 httpd:httpd: apr_sockaddr_info_get() failed for why-1

httpd: Could not reliably determine the server's fully qualified domain name, using 127.0.0.1 for ServerName

[确定]

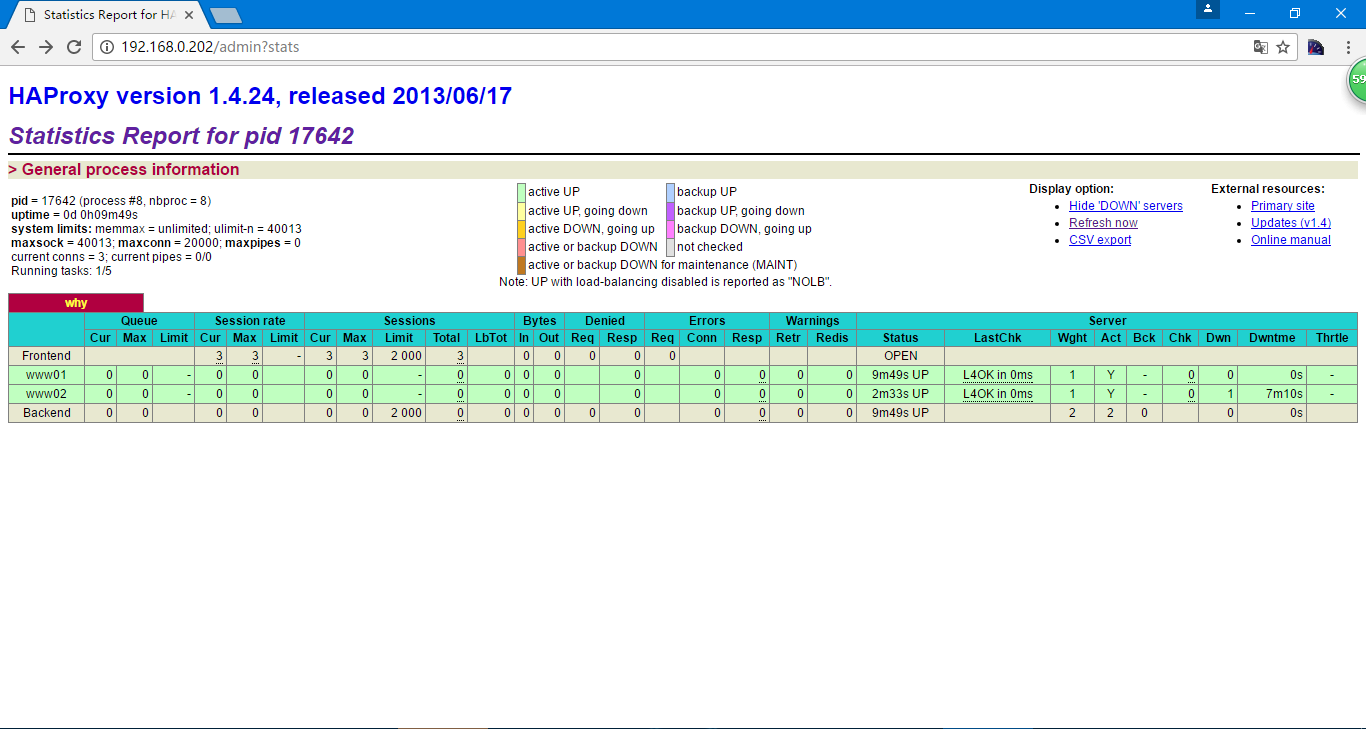

然后在192.168.0.203主机上启动服务就能看到两个后端都正常的服务了

[root@why-2 ~]# for i in {1..100};do curl 192.168.0.202;sleep 1;done

192.168.0.201

192.168.0.203

192.168.0.201

192.168.0.203

192.168.0.201

192.168.0.203

192.168.0.201

192.168.0.203

192.168.0.201

192.168.0.203

192.168.0.201

192.168.0.203

192.168.0.201

192.168.0.203

192.168.0.201

192.168.0.203

192.168.0.201

192.168.0.203

192.168.0.201

192.168.0.203

192.168.0.201

192.168.0.203

^C

可以看到访问的时候是使用的轮询的方式

HAproxy健康检查

第一种就是

server www01 192.168.0.201:80 check port 80 inter 5000 fall 5

server www02 192.168.0.203:80 check port 80 inter 5000 fall 5

check port 80表示对80端口进行检查,也可以直接写成check,inter 5000 fall 5 表示每5000毫秒进行一次检查,连续5次检查有问题就会剔除掉该节点,不加此配置则是每2秒检查一次,一共检查3次

相关的参数

- inter 2000 检查时间间隔为2000毫秒

- fall 3 检查三次falsee,认为宕机,剔除集群

- rise 2 在后端服务宕机恢复后,检查两次OK,认为存活,加入到集群中

- maxconn 2048 最大连接数

- weight 1 权重为1,权重也是按照百分比进行接收请求

- maxqueue 0 最大的队列数,默认为0

- minconn 1024 最小的连接数,一般用于负载,防止有些节点没有连接

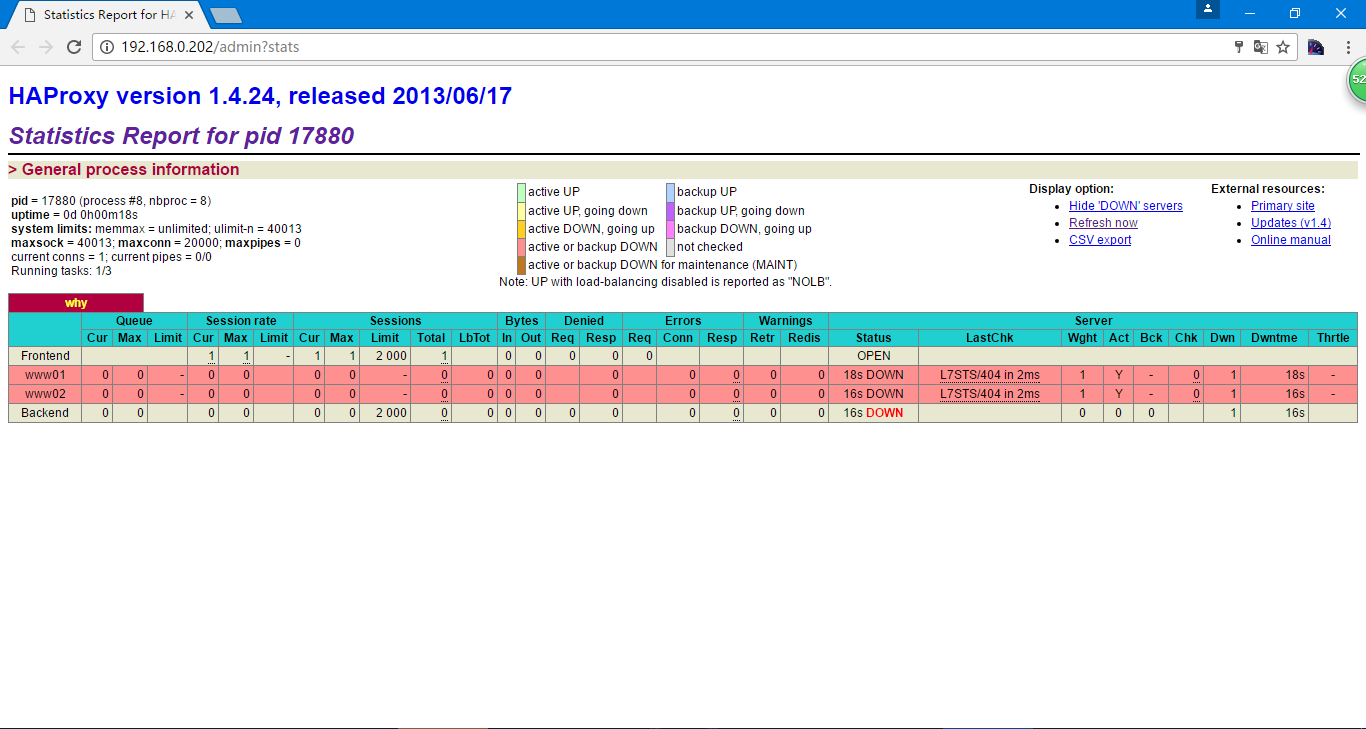

第二种为

option httpchk HEAD /check.html HTTP/1.1

通过检查html等文件进行检查

[root@why-2 conf]# ../sbin/haproxy -f ./haproxy.conf -D

[WARNING] 069/232050 (17881) : Proxy 'why': in multi-process mode, stats will be limited to process assigned to the current request.

[root@why-2 conf]#

Message from syslogd@127.0.0.1 at Mar 11 23:20:50 ...

haproxy[17880]: proxy why has no server available!

Message from syslogd@127.0.0.1 at Mar 11 23:20:50 ...

haproxy[17875]: proxy why has no server available!

在对应位置创建文件

[root@why-1 html]# touch check.html

[root@why-2 conf]# curl -I 192.168.0.201/check.html

HTTP/1.1 200 OK

Date: Sat, 11 Mar 2017 15:23:05 GMT

Server: Apache/2.2.15 (CentOS)

Last-Modified: Sat, 11 Mar 2017 15:22:32 GMT

ETag: "c0184-0-54a760c0a03db"

Accept-Ranges: bytes

Connection: close

Content-Type: text/html; charset=UTF-8

option httpchk GET /check.html

如果区分域名则可以用以下配置 option httpchk HEAD /check.html HTTP/1.1\r\nHost:\ www.whysdomain.com 如果是其他服务,例如mysql就是mysql-check,具体的就可以参考doc/haproxy/configuration.txt

健康检查

一般的情况下做端口检查即可,更复杂的也可以是url检查,生产中也需要根据实际的文件等等进行检查,例如jsp文件,php文件,或者需要开发进行详细的连接数据库,静态文件连接的html进行检查,要求最高的也就3秒检查一次,允许失败1次,而正常的使用默认即可,CDN的公司就可以是3秒一次,允许失败10次,可以配置备用节点

option httpchk HEAD /check.html HTTP/1.0

server www01 192.168.0.201:80 check port 80 inter 5000 fall 5

server www02 192.168.0.203:80 check port 80 inter 5000 fall 5 backup

按照上述修改配置重启服务

[root@why-2 conf]# vi haproxy.conf

[root@why-2 conf]# ../sbin/haproxy -f ./haproxy.conf -sf `cat ../var/run/haproxy.pid`

[root@why-2 ~]# for i in {1..100};do curl 192.168.0.202;sleep 1;done

192.168.0.201

192.168.0.201

192.168.0.201

192.168.0.201

192.168.0.201

192.168.0.201

192.168.0.201

192.168.0.201

192.168.0.201

192.168.0.201

192.168.0.201

192.168.0.201

192.168.0.201

192.168.0.201

192.168.0.201

<html><body><h1>503 Service Unavailable</h1>

No server is available to handle this request.

</body></html>

<html><body><h1>503 Service Unavailable</h1>

No server is available to handle this request.

</body></html>

<html><body><h1>503 Service Unavailable</h1>

No server is available to handle this request.

</body></html>

<html><body><h1>503 Service Unavailable</h1>

No server is available to handle this request.

</body></html>

<html><body><h1>503 Service Unavailable</h1>

No server is available to handle this request.

</body></html>

192.168.0.203

192.168.0.203

192.168.0.203

192.168.0.203

192.168.0.203

192.168.0.203

192.168.0.203

192.168.0.203

192.168.0.203

192.168.0.203

192.168.0.203

192.168.0.203

192.168.0.203

192.168.0.203

^C

可以看到检测了5次503的错误,就是我们配置的fall 5

而这种backup只有所有节点宕机才会接管,如果是有节点宕机就进行接管就需要加入

option allbackups

forward

可以参考nginx中的forward内容<服务>Nginx反向代理中的反向代理

通过heartbeat配置haproxy高可用

[root@heartbeat1 ~]# tar xf haproxy-1.4.24.tar.gz

[root@heartbeat1 ~]# cd haproxy-1.4.24

[root@heartbeat1 haproxy-1.4.24]# make TARGET=linux2628 ARCH=x86_64

[root@heartbeat1 haproxy-1.4.24]# make PREFIX=/usr/local/haproxy-1.4.24 install

[root@heartbeat1 haproxy-1.4.24]# ln -s /usr/local/haproxy-1.4.24 /usr/local/haproxy

[root@heartbeat1 haproxy-1.4.24]# cd /usr/local/haproxy

[root@heartbeat1 haproxy]# mkdir -p bin conf logs var/run var/chroot

[root@heartbeat1 haproxy]# cd conf/

[root@heartbeat1 conf]# vi haproxy.conf

global

chroot /usr/local/haproxy/var/chroot

daemon

group haproxy

user haproxy

log 127.0.0.1:514 local0 warning

pidfile /usr/local/haproxy/var/run/haproxy.pid

maxconn 20000

spread-checks 3

nbproc 8

defaults

log global

mode http

retries 3

option redispatch

contimeout 5000

clitimeout 50000

srvtimeout 50000

listen why

bind 192.168.0.211:80

mode http

# mode tcp

stats enable

stats uri /admin?stats

stats auth proxy:why123456

balance roundrobin

# option httpclose

# option forwardfor

# option httpchk HEAD /check.html HTTP/1.0

server www01 192.168.0.201:80 check port 80 inter 5000 fall 5

[root@heartbeat1 conf]# useradd haproxy -s /sbin/nologin

[root@heartbeat1 conf]# ../sbin/haproxy -f ./haproxy.conf -c

[WARNING] 071/180143 (16346) : Proxy 'why': in multi-process mode, stats will be limited to process assigned to the current request.

Configuration file is valid

[root@heartbeat2 conf]# echo 'net.ipv4.ip_nonlocal_bind = 1' >> /etc/sysctl.conf

[root@heartbeat2 conf]# tail -1 /etc/sysctl.conf

net.ipv4.ip_nonlocal_bind = 1

[root@heartbeat2 conf]# sysctl -p

net.ipv4.ip_forward = 0

net.ipv4.conf.default.rp_filter = 1

net.ipv4.conf.default.accept_source_route = 0

kernel.sysrq = 0

kernel.core_uses_pid = 1

net.ipv4.tcp_syncookies = 1

net.bridge.bridge-nf-call-ip6tables = 0

net.bridge.bridge-nf-call-iptables = 0

net.bridge.bridge-nf-call-arptables = 0

kernel.msgmnb = 65536

kernel.msgmax = 65536

kernel.shmmax = 68719476736

kernel.shmall = 4294967296

net.ipv4.ip_nonlocal_bind = 1

heartbeat

heartbeat的配置参考<服务>heartbeat

[root@heartbeat1 conf]# ../sbin/haproxy -f ./haproxy.conf -D

[WARNING] 071/180404 (16351) : Proxy 'why': in multi-process mode, stats will be limited to process assigned to the current request.

[ALERT] 071/180404 (16351) : Starting proxy why: cannot bind socket

heartbeat配置

[root@heartbeat1 ~]# tail -1 /etc/ha.d/haresources

heartbeat1 IPaddr::192.168.0.211/24/eth0

[root@heartbeat2 ~]# tail -1 /etc/ha.d/haresources

heartbeat1 IPaddr::192.168.0.211/24/eth0

启动hearbeat

[root@heartbeat2 ~]# service heartbeat start

Starting High-Availability services: INFO: Resource is stopped

Done.

[root@heartbeat2 ~]# ip addr | grep 211

[root@heartbeat1 ~]# service heartbeat start

Starting High-Availability services: INFO: Resource is stopped

Done.

[root@heartbeat1 ~]# ip addr | grep 211

inet 192.168.0.211/24 brd 192.168.0.255 scope global secondary eth0

[root@heartbeat1 ~]# curl 192.168.0.211

192.168.0.201

[root@heartbeat1 ~]# service heartbeat stop

Stopping High-Availability services: Done.

[root@heartbeat1 ~]# curl 192.168.0.211

192.168.0.201

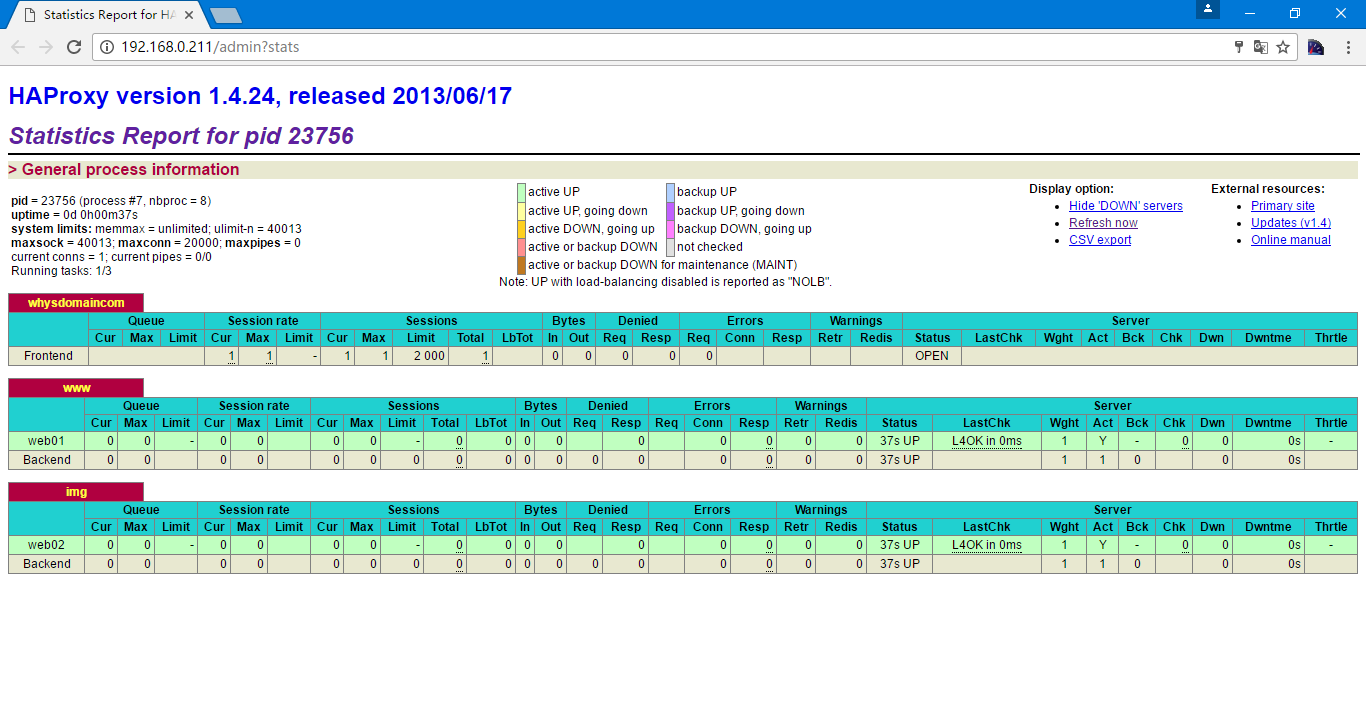

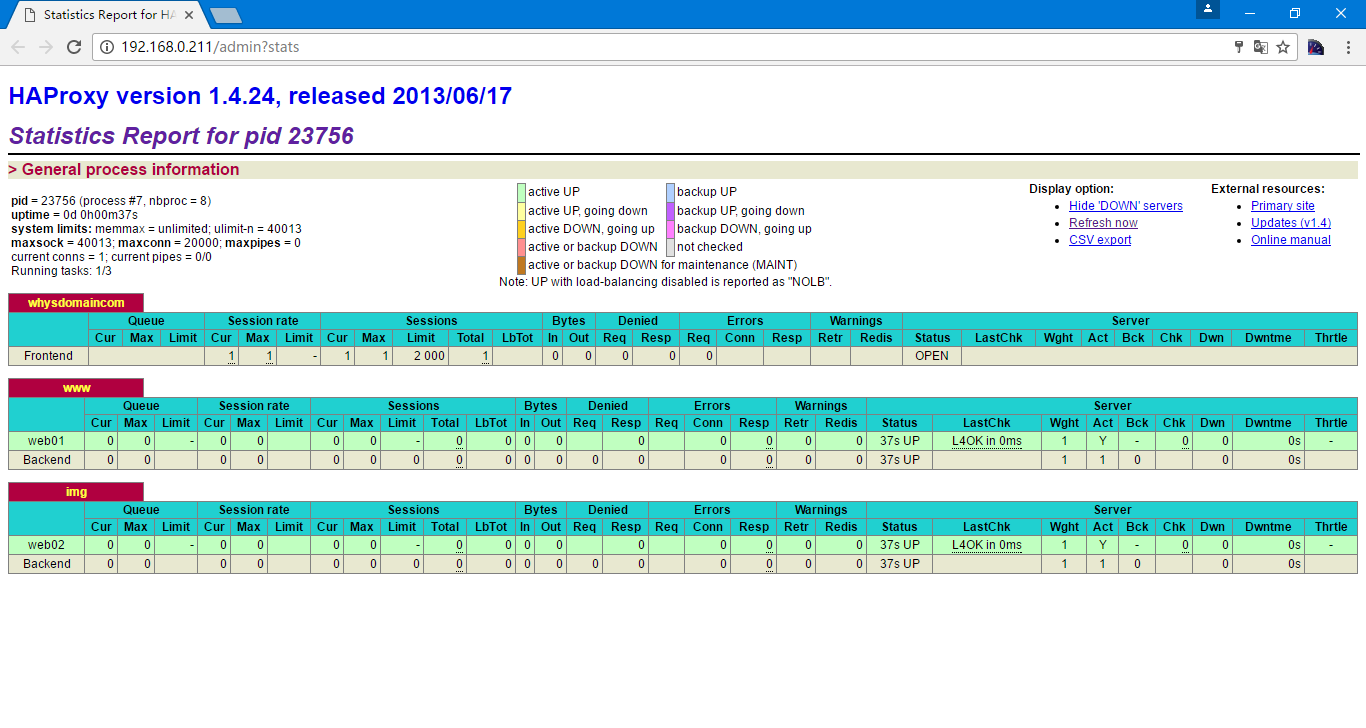

在后端可以看到VIP对应的服务

如果多个业务就可以在haproxy上配置多个listen标签,对应的名字不一样即可,如果listen中重复的选项可以写入到default中,在heartbeat中添加VIP即可

内核调优

可以参考nginx中的web服务优化<服务>Nginx反向代理中的web服务优化

haproxy的acl规则

先配置两个虚拟主机

[root@why-1 ~]# vi /etc/httpd/conf/httpd.conf

Listen 80

Listen 81

NameVirtualHost *:80

NameVirtualHost *:81

<VirtualHost *:80>

DocumentRoot /var/www/html

ServerName www.whysdomain.com

</VirtualHost>

<VirtualHost *:81>

DocumentRoot /var/www/img

ServerName image.whysdomain.com

</VirtualHost>

haproxy配置

[root@heartbeat2 conf]# vi haproxy.conf

global

chroot /usr/local/haproxy/var/chroot

daemon

group haproxy

user haproxy

log 127.0.0.1:514 local0 warning

pidfile /usr/local/haproxy/var/run/haproxy.pid

maxconn 20000

spread-checks 3

nbproc 8

defaults

log global

mode http

retries 3

option redispatch

contimeout 5000

clitimeout 50000

srvtimeout 50000

mode http

stats enable

stats uri /admin?stats

stats auth admin:admin

frontend whysdomaincom #用来匹配接收客户所请求的域名和url等

bind 192.168.0.211:80

acl img_dom hdr(host) -i image.whysdomain.com #acl后的img_dom为匹配规则名称,hdr(host)匹配请求的主机名

acl 301_dom hdr(host) -i 51cto.whysdomain.com

redirect prefix http://www.51cto.com code 301 if 301_dom

use_backend img if img_dom

default_backend www

backend www #定义后端服务器集群,以及对后端服务器的一些权重,队列,连接数等选项的设置

balance leastconn

option httpclose

option forwardfor

server web01 192.168.0.201:80 check port 80 inter 1000 fall 2

backend img

balance leastconn

option httpclose

option forwardfor

server web02 192.168.0.201:81 check port 81 inter 1000 fall 2

frontend就类似nginx的location url,而backend就类似nginx的upstream

重启haproxy服务即可

[root@heartbeat2 conf]# ../sbin/haproxy -f ./haproxy.conf -sf `cat ../var/run/haproxy.pid`

[WARNING] 071/235718 (23749) : Proxy 'whysdomaincom': in multi-process mode, stats will be limited to process assigned to the current request.

[root@why-2 ~]# vi /etc/hosts

[root@why-2 ~]# tail -1 /etc/hosts

192.168.0.211 www.whysdomain.com image.whysdomain.com 51cto.whysdomain.com

[root@why-2 ~]# curl www.whysdomain.com

192.168.0.201

[root@why-2 ~]# curl image.whysdomain.com

192.168.0.201--img

[root@why-2 ~]# curl www.51cto.com

然后看到的一堆前端代码

文档中是这样介绍的

acl <aclname> <criterion> [flags] [operator] <value> ...

Declare or complete an access list.

May be used in sections : defaults | frontend | listen | backend

no | yes | yes | yes

Example:

acl invalid_src src 0.0.0.0/7 224.0.0.0/3

acl invalid_src src_port 0:1023

acl local_dst hdr(host) -i localhost

See section 7 about ACL usage.

基于路径

acl path_beg /blog/

基于文件扩展名的跳转

acl path_end .png .git .jpg .css .js

其他的

if a or b

七层跳转

hdr_sub(user-agent) -i iphone

hdr_sub(user-agent) -i android

基于来源IP和端口的访问控制

acl valib_ip src 192.168.0.0/24

block if !valib_ip

192.168.0.0/24网段的访问就会被drop,会返回一个403的错误

错误页面的403,502等

可以返回一个页面

errorfile 403 /etc/haproxy/errorfiles/403.http

也可以返回一个服务器

errorloc 403 /403.html

errorloc 502 http://192.168.0.201/error502.html

注意测试的过程中不要用curl,还有404不能定义

如果访问一个web页面访问超过20s,就会进行关闭,如果超时时间设置的短,就一定要确保后端由足够多的主机来进行响应。

CDN服务可以就是35000ms,大网站可能就会是120000ms

另外降低健康检查的频率,日志级别一定要在info以下,例如waring,一定不要是info和info以上

算法

roundrobin 轮询

static-rr

leastconn 最小连接数算法

source 源地址hash

uri url算法 len30 depth 4

url_param