<服务>MongoDB

目录:

MongoDB

MongoDB属于NoSQL,基于文档的NoSQL,而NoSQL含义为不仅仅是关系型数据库。MongoDB即具有缓存数据库性能,又有很强的扩展性。

在大数据时代,单台数据库根本无法支持那么大的数据量,只能是数据库分片然后进行并行计算,而商用数据库就会需要很高的成本,但是查询的时候依然要联表或者跨表查询,依然会消耗很大的资源,而NoSQL以其弱一致性,高吞吐量,水平扩展,低端硬件集群进而大大的节约了成本。

特点为

- 适用于海量数据存储

- 基于文档,利用json格式组织和存储数据

- 最终的一致性2PC(非ACID)

- 使用C++开发

- 支持索引,支持原子性,不支持事务

- 支持复制,基于副本进行复制,可以内部选举主节点

- 支持自动分片。

- 有商业支持

- 语法格式为json格式的bson

- 支持类似Mapreduce的分布式处理,并行查询

适用场景为

- web站点,非常适合实时的插入,更新,查询,并具备网站实时数据存储所需的复制和高伸缩性

- 缓存,由于性能高,也可以做信息基础设施的缓存层

- 较大数据量

- 用于对象及Json数据的存储,bson数据格式非常适合文档格式化的存储及查询

- 对扩展性要求高的场景,适合由数十台或数百台组成的数据库

mongodb的引擎分为MMAPv1和WiredTige

- MMAPv1:适应于所有MongoDB版本,MongoDB3.0的默认引擎,这种引擎会自动预分配空间,在删除后也不回收,在添加数据的时候直接使用原来的空间

- WiredTiger:仅支持64位MongoDB,MongoDB3.2的默认引擎,这种引擎的压缩比例比较高,增加了文件级锁

如果在3.2版本前需要使用WiredTiger引擎,启动的时候指定--storageEngine=wiredTiger参数或者在配置文件中加入即可。

安装MongoDB

下载页https://www.mongodb.com/download-center#community

[root@why ~]# tar xf mongodb-linux-x86_64-rhel62-3.2.8.gz

[root@why ~]# mv mongodb-linux-x86_64-rhel62-3.2.8 /usr/local/mongodb

[root@why ~]# cd /usr/local/mongodb

[root@why mongodb]# mkdir data

[root@why mongodb]# touch log

[root@why mongodb]# /usr/local/mongodb/bin/mongod --dbpath=/usr/local/mongodb/data --logpath=/usr/local/mongodb/log --logappend --port=27017 --fork --rest --httpinterface

about to fork child process, waiting until server is ready for connections.

forked process: 20442

child process started successfully, parent exiting

[root@why mongodb]# ss -nlptu | egrep '27017|28017'

tcp LISTEN 0 128 *:28017 *:* users:(("mongod",20442,8))

tcp LISTEN 0 128 *:27017 *:* users:(("mongod",20442,6))

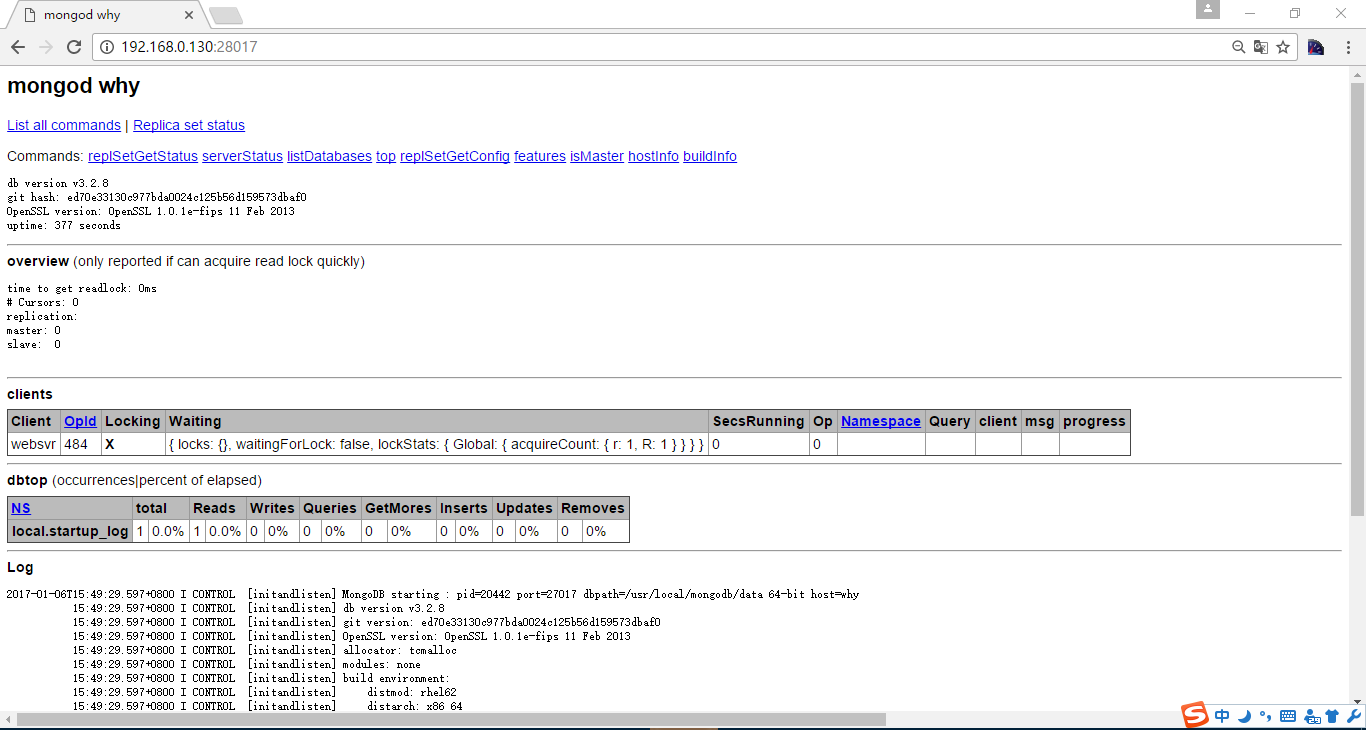

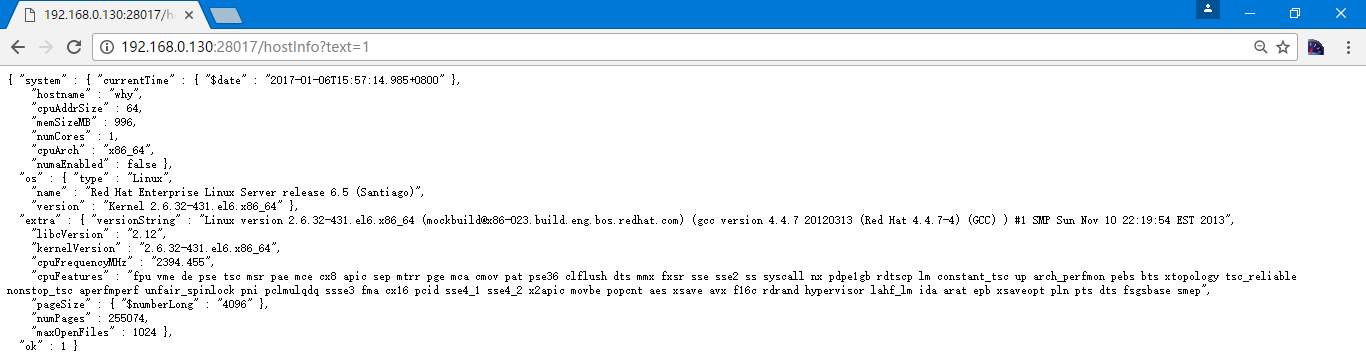

另外访问对应的28017端口可以通过网页看到MongoDB服务的相关信息

MongoDB使用

启动MongoDB

[root@why ~]# /usr/local/mongodb/bin/mongo --host 192.168.0.130

MongoDB shell version: 3.2.8

connecting to: 192.168.0.130:27017/test

Welcome to the MongoDB shell.

For interactive help, type "help".

For more comprehensive documentation, see

http://docs.mongodb.org/

Questions? Try the support group

http://groups.google.com/group/mongodb-user

Server has startup warnings:

2017-01-06T15:49:30.098+0800 I CONTROL [initandlisten] ** WARNING: You are running this process as the root user, which is not recommended.

2017-01-06T15:49:30.098+0800 I CONTROL [initandlisten]

2017-01-06T15:49:30.098+0800 I CONTROL [initandlisten]

2017-01-06T15:49:30.098+0800 I CONTROL [initandlisten] ** WARNING: /sys/kernel/mm/transparent_hugepage/enabled is 'always'.

2017-01-06T15:49:30.098+0800 I CONTROL [initandlisten] ** We suggest setting it to 'never'

2017-01-06T15:49:30.098+0800 I CONTROL [initandlisten]

2017-01-06T15:49:30.098+0800 I CONTROL [initandlisten] ** WARNING: /sys/kernel/mm/transparent_hugepage/defrag is 'always'.

2017-01-06T15:49:30.098+0800 I CONTROL [initandlisten] ** We suggest setting it to 'never'

2017-01-06T15:49:30.098+0800 I CONTROL [initandlisten]

显示数据库

> show dbs

local 0.000GB

#使用数据库,该库不需要被创建,直接使用即为创建

> use testdb

switched to db testdb

> show users

> show logs

global

startupWarnings

> db.help()

DB methods:

db.adminCommand(nameOrDocument) - switches to 'admin' db, and runs command [ just calls db.runCommand(...) ]

db.auth(username, password)

db.cloneDatabase(fromhost)

db.commandHelp(name) returns the help for the command

db.copyDatabase(fromdb, todb, fromhost)

db.createCollection(name, { size : ..., capped : ..., max : ... } )

db.createUser(userDocument)

db.currentOp() displays currently executing operations in the db

db.dropDatabase() #删库

db.eval() - deprecated

db.fsyncLock() flush data to disk and lock server for backups

db.fsyncUnlock() unlocks server following a db.fsyncLock()

db.getCollection(cname) same as db['cname'] or db.cname

db.getCollectionInfos([filter]) - returns a list that contains the names and options of the db's collections

db.getCollectionNames()

db.getLastError() - just returns the err msg string

db.getLastErrorObj() - return full status object

db.getLogComponents()

db.getMongo() get the server connection object

db.getMongo().setSlaveOk() allow queries on a replication slave server

db.getName()

db.getPrevError()

db.getProfilingLevel() - deprecated

db.getProfilingStatus() - returns if profiling is on and slow threshold

db.getReplicationInfo()

db.getSiblingDB(name) get the db at the same server as this one

db.getWriteConcern() - returns the write concern used for any operations on this db, inherited from server object if set

db.hostInfo() get details about the server's host

db.isMaster() check replica primary status

db.killOp(opid) kills the current operation in the db

db.listCommands() lists all the db commands

db.loadServerScripts() loads all the scripts in db.system.js

db.logout()

db.printCollectionStats()

db.printReplicationInfo()

db.printShardingStatus()

db.printSlaveReplicationInfo()

db.dropUser(username)

db.repairDatabase()

db.resetError()

db.runCommand(cmdObj) run a database command. if cmdObj is a string, turns it into { cmdObj : 1 }

db.serverStatus()

db.setLogLevel(level,<component>)

db.setProfilingLevel(level,<slowms>) 0=off 1=slow 2=all

db.setWriteConcern( <write concern doc> ) - sets the write concern for writes to the db

db.unsetWriteConcern( <write concern doc> ) - unsets the write concern for writes to the db

db.setVerboseShell(flag) display extra information in shell output

db.shutdownServer()

db.stats()

db.version() current version of the server

数据库状态

> db.stats()

{

"db" : "testdb",

"collections" : 0,

"objects" : 0,

"avgObjSize" : 0,

"dataSize" : 0,

"storageSize" : 0,

"numExtents" : 0,

"indexes" : 0,

"indexSize" : 0,

"fileSize" : 0,

"ok" : 1

}

> db.version()

3.2.8

数据库服务状态

> db.serverStatus()

有点长不贴了

文档帮助

> db.mycoll.help()

DBCollection help

db.mycoll.find().help() - show DBCursor help

db.mycoll.bulkWrite( operations, <optional params> ) - bulk execute write operations, optional parameters are: w, wtimeout, j

db.mycoll.count( query = {}, <optional params> ) - count the number of documents that matches the query, optional parameters are: limit, skip, hint, maxTimeMS

db.mycoll.copyTo(newColl) - duplicates collection by copying all documents to newColl; no indexes are copied.

db.mycoll.convertToCapped(maxBytes) - calls {convertToCapped:'mycoll', size:maxBytes}} command

db.mycoll.createIndex(keypattern[,options])

db.mycoll.createIndexes([keypatterns], <options>)

db.mycoll.dataSize()

db.mycoll.deleteOne( filter, <optional params> ) - delete first matching document, optional parameters are: w, wtimeout, j

db.mycoll.deleteMany( filter, <optional params> ) - delete all matching documents, optional parameters are: w, wtimeout, j

db.mycoll.distinct( key, query, <optional params> ) - e.g. db.mycoll.distinct( 'x' ), optional parameters are: maxTimeMS

db.mycoll.drop() drop the collection

db.mycoll.dropIndex(index) - e.g. db.mycoll.dropIndex( "indexName" ) or db.mycoll.dropIndex( { "indexKey" : 1 } ) #删除索引

db.mycoll.dropIndexes() #删除所有索引

db.mycoll.ensureIndex(keypattern[,options]) - DEPRECATED, use createIndex() instead #创建索引

db.mycoll.explain().help() - show explain help

db.mycoll.reIndex() #重建索引

db.mycoll.find([query],[fields]) - query is an optional query filter. fields is optional set of fields to return.

e.g. db.mycoll.find( {x:77} , {name:1, x:1} )

db.mycoll.find(...).count()

db.mycoll.find(...).limit(n)

db.mycoll.find(...).skip(n)

db.mycoll.find(...).sort(...)

db.mycoll.findOne([query], [fields], [options], [readConcern])

db.mycoll.findOneAndDelete( filter, <optional params> ) - delete first matching document, optional parameters are: projection, sort, maxTimeMS

db.mycoll.findOneAndReplace( filter, replacement, <optional params> ) - replace first matching document, optional parameters are: projection, sort, maxTimeMS, upsert, returnNewDocument

db.mycoll.findOneAndUpdate( filter, update, <optional params> ) - update first matching document, optional parameters are: projection, sort, maxTimeMS, upsert, returnNewDocument

db.mycoll.getDB() get DB object associated with collection

db.mycoll.getPlanCache() get query plan cache associated with collection

db.mycoll.getIndexes()

db.mycoll.group( { key : ..., initial: ..., reduce : ...[, cond: ...] } )

db.mycoll.insert(obj) #插入数据

db.mycoll.insertOne( obj, <optional params> ) - insert a document, optional parameters are: w, wtimeout, j

db.mycoll.insertMany( [objects], <optional params> ) - insert multiple documents, optional parameters are: w, wtimeout, j

db.mycoll.mapReduce( mapFunction , reduceFunction , <optional params> )

db.mycoll.aggregate( [pipeline], <optional params> ) - performs an aggregation on a collection; returns a cursor

db.mycoll.remove(query)

db.mycoll.replaceOne( filter, replacement, <optional params> ) - replace the first matching document, optional parameters are: upsert, w, wtimeout, j

db.mycoll.renameCollection( newName , <dropTarget> ) renames the collection.

db.mycoll.runCommand( name , <options> ) runs a db command with the given name where the first param is the collection name

db.mycoll.save(obj)

db.mycoll.stats({scale: N, indexDetails: true/false, indexDetailsKey: <index key>, indexDetailsName: <index name>})

db.mycoll.storageSize() - includes free space allocated to this collection

db.mycoll.totalIndexSize() - size in bytes of all the indexes

db.mycoll.totalSize() - storage allocated for all data and indexes

db.mycoll.update( query, object[, upsert_bool, multi_bool] ) - instead of two flags, you can pass an object with fields: upsert, multi

db.mycoll.updateOne( filter, update, <optional params> ) - update the first matching document, optional parameters are: upsert, w, wtimeout, j

db.mycoll.updateMany( filter, update, <optional params> ) - update all matching documents, optional parameters are: upsert, w, wtimeout, j

db.mycoll.validate( <full> ) - SLOW

db.mycoll.getShardVersion() - only for use with sharding

db.mycoll.getShardDistribution() - prints statistics about data distribution in the cluster

db.mycoll.getSplitKeysForChunks( <maxChunkSize> ) - calculates split points over all chunks and returns splitter function

db.mycoll.getWriteConcern() - returns the write concern used for any operations on this collection, inherited from server/db if set

db.mycoll.setWriteConcern( <write concern doc> ) - sets the write concern for writes to the collection

db.mycoll.unsetWriteConcern( <write concern doc> ) - unsets the write concern for writes to the collection

数据操作

> db.students.insert({name:"why",age:24}) #插入数据

WriteResult({ "nInserted" : 1 })

> show collections

students

testcoll

> show dbs

local 0.000GB

testdb 0.000GB

> db.students.stats() #文档状态

{

"ns" : "testdb.students",

"count" : 1,

"size" : 49,

"avgObjSize" : 49,

"storageSize" : 16384,

"capped" : false,

"wiredTiger" : {

省略部分

> db.getCollectionNames() #获取库中文档

[ "students", "testcoll" ]

> db.students.insert({name:"mabiao",age:25,gender:"M"})

WriteResult({ "nInserted" : 1 })

> db.students.stats()

{

"ns" : "testdb.students",

"count" : 2, #可以看到这变为2了

"size" : 115,

"avgObjSize" : 57,

"storageSize" : 32768,

"capped" : false,

"wiredTiger" : {

省略部分

> db.mycoll.find().help() #查看文档检索帮助

find(<predicate>, <projection>) modifiers

.sort({...})

.limit(<n>)

.skip(<n>)

.batchSize(<n>) - sets the number of docs to return per getMore

.hint({...})

.readConcern(<level>)

.readPref(<mode>, <tagset>)

.count(<applySkipLimit>) - total # of objects matching query. by default ignores skip,limit

.size() - total # of objects cursor would return, honors skip,limit

.explain(<verbosity>) - accepted verbosities are {'queryPlanner', 'executionStats', 'allPlansExecution'}

.min({...})

.max({...})

.maxScan(<n>)

.maxTimeMS(<n>)

.comment(<comment>)

.snapshot()

.tailable(<isAwaitData>)

.noCursorTimeout()

.allowPartialResults()

.returnKey()

.showRecordId() - adds a $recordId field to each returned object

Cursor methods

.toArray() - iterates through docs and returns an array of the results

.forEach(<func>)

.map(<func>)

.hasNext()

.next()

.close()

.objsLeftInBatch() - returns count of docs left in current batch (when exhausted, a new getMore will be issued)

.itcount() - iterates through documents and counts them

.getQueryPlan() - get query plans associated with shape. To get more info on query plans, call getQueryPlan().help().

.pretty() - pretty print each document, possibly over multiple lines

> db.students.find()

{ "_id" : ObjectId("586f56b4ad09abf0d2797c18"), "name" : "why", "age" : 24 }

{ "_id" : ObjectId("586f5834ad09abf0d2797c19"), "name" : "mabiao", "age" : 25, "gender" : "M" }

> db.students.count() #查看总数

2

> db.students.insert({name:"yanwei",age:26,course:"dage"})

WriteResult({ "nInserted" : 1 })

> db.students.insert({name:"pqt",age:23,course:"xueba"})

WriteResult({ "nInserted" : 1 })

> db.students.find({age:{$gt: 24}}) #比较操作

{ "_id" : ObjectId("586f5834ad09abf0d2797c19"), "name" : "mabiao", "age" : 25, "gender" : "M" }

{ "_id" : ObjectId("586f5aeead09abf0d2797c1a"), "name" : "yanwei", "age" : 26, "course" : "dage" }

> db.students.find({age:{$in: [24,26]}}) #

{ "_id" : ObjectId("586f56b4ad09abf0d2797c18"), "name" : "why", "age" : 24 }

{ "_id" : ObjectId("586f5aeead09abf0d2797c1a"), "name" : "yanwei", "age" : 26, "course" : "dage" }

> db.students.find({age:{$nin: [24,26]}})

{ "_id" : ObjectId("586f5834ad09abf0d2797c19"), "name" : "mabiao", "age" : 25, "gender" : "M" }

{ "_id" : ObjectId("586f5b03ad09abf0d2797c1b"), "name" : "pqt", "age" : 23, "course" : "xueba" }

> db.students.find({$or: [{age: {$nin: [23,24]}},{age: {$nin: [25,26]}}]})

{ "_id" : ObjectId("586f56b4ad09abf0d2797c18"), "name" : "why", "age" : 24 }

{ "_id" : ObjectId("586f5834ad09abf0d2797c19"), "name" : "mabiao", "age" : 25, "gender" : "M" }

{ "_id" : ObjectId("586f5aeead09abf0d2797c1a"), "name" : "yanwei", "age" : 26, "course" : "dage" }

{ "_id" : ObjectId("586f5b03ad09abf0d2797c1b"), "name" : "pqt", "age" : 23, "course" : "xueba" }

> db.students.find({gender: {$exists: true}})

{ "_id" : ObjectId("586f5834ad09abf0d2797c19"), "name" : "mabiao", "age" : 25, "gender" : "M" }

> db.students.find({gender: {$exists: false}})

{ "_id" : ObjectId("586f56b4ad09abf0d2797c18"), "name" : "why", "age" : 24 }

{ "_id" : ObjectId("586f5aeead09abf0d2797c1a"), "name" : "yanwei", "age" : 26, "course" : "dage" }

{ "_id" : ObjectId("586f5b03ad09abf0d2797c1b"), "name" : "pqt", "age" : 23, "course" : "xueba" }

> db.students.update({name: "yanwei"},{$set: {age: 40}}) #更新操作,把name为yanwei的年龄替换为40

WriteResult({ "nMatched" : 1, "nUpserted" : 0, "nModified" : 1 })

> db.students.find()

{ "_id" : ObjectId("586f56b4ad09abf0d2797c18"), "name" : "why", "age" : 24 }

{ "_id" : ObjectId("586f5834ad09abf0d2797c19"), "name" : "mabiao", "age" : 25, "gender" : "M" }

{ "_id" : ObjectId("586f5aeead09abf0d2797c1a"), "name" : "yanwei", "age" : 40, "course" : "dage" }

{ "_id" : ObjectId("586f5b03ad09abf0d2797c1b"), "name" : "pqt", "age" : 23, "course" : "xueba" }

> db.students.remove({age: 40}) #删除操作,删除age为40的

WriteResult({ "nRemoved" : 1 })

> db.students.find()

{ "_id" : ObjectId("586f56b4ad09abf0d2797c18"), "name" : "why", "age" : 24 }

{ "_id" : ObjectId("586f5834ad09abf0d2797c19"), "name" : "mabiao", "age" : 25, "gender" : "M" }

{ "_id" : ObjectId("586f5b03ad09abf0d2797c1b"), "name" : "pqt", "age" : 23, "course" : "xueba" }

> db.students.findOne({age: {$gt: 5}})

{ "_id" : ObjectId("586f56b4ad09abf0d2797c18"), "name" : "why", "age" : 24 }

> db.dropDatabase() #删库

{ "dropped" : "testdb", "ok" : 1 }

> show dbs

local 0.000GB

> exit

bye

[root@why ~]# /usr/local/mongodb/bin/mongod --shutdown --dbpath=/usr/local/mongodb/data/

killing process with pid: 21790

可以看到库和表不需要单独创建,在使用的时候就进行了创建

更多的操作

- $gt:大于

- $lt:小于

- $gte:大于或等于

- $lte:小于或等于

- $ne:不等于

- $mod:取模

- $exists:语法格式

- $or:或运算

- $and:与运算

- $not:非运算

- $nor:反运算

- $type:指定字段值的文档,有Double,String,Object,Array,Birnary data,Underfined,Boolean,Date,Null,Regular Expression,JavaScript,Timestamp

Mongodb索引

https://docs.mongodb.com/manual/indexes/

创建索引

db.mycoll.ensureIndex({username:1},{backgroup:true})

唯一索引

db.mycoll.ensureIndex({username:1},{unique:true,dropDups:true})

需要注意的是,指定了dropDups,会删除索引值相同的数据

稀疏索引

db.mycoll.ensureIndex({username:1},{sparse:true})

不过稀疏索引要求数据存储按照顺序,相同索引数值的放在一起,进而使索引指向一个区域

[root@why ~]# /usr/local/mongodb/bin/mongo --host 192.168.0.130

> use testdb

switched to db testdb

> for (i=1;i<=10000;i++) db.students.insert({name:"student"+i,age:(i%5),school:"Num"+(i%3)+"School"}) #生成数据

WriteResult({ "nInserted" : 1 })

> db.students.find().count()

10000

> db.students.find()

{ "_id" : ObjectId("586f78ade9f2c32fab697d40"), "name" : "student1", "age" : 1, "school" : "Num1School" }

{ "_id" : ObjectId("586f78ade9f2c32fab697d41"), "name" : "student2", "age" : 2, "school" : "Num2School" }

{ "_id" : ObjectId("586f78ade9f2c32fab697d42"), "name" : "student3", "age" : 3, "school" : "Num0School" }

{ "_id" : ObjectId("586f78ade9f2c32fab697d43"), "name" : "student4", "age" : 4, "school" : "Num1School" }

{ "_id" : ObjectId("586f78ade9f2c32fab697d44"), "name" : "student5", "age" : 0, "school" : "Num2School" }

{ "_id" : ObjectId("586f78ade9f2c32fab697d45"), "name" : "student6", "age" : 1, "school" : "Num0School" }

{ "_id" : ObjectId("586f78ade9f2c32fab697d46"), "name" : "student7", "age" : 2, "school" : "Num1School" }

{ "_id" : ObjectId("586f78ade9f2c32fab697d47"), "name" : "student8", "age" : 3, "school" : "Num2School" }

{ "_id" : ObjectId("586f78ade9f2c32fab697d48"), "name" : "student9", "age" : 4, "school" : "Num0School" }

{ "_id" : ObjectId("586f78ade9f2c32fab697d49"), "name" : "student10", "age" : 0, "school" : "Num1School" }

{ "_id" : ObjectId("586f78ade9f2c32fab697d4a"), "name" : "student11", "age" : 1, "school" : "Num2School" }

{ "_id" : ObjectId("586f78ade9f2c32fab697d4b"), "name" : "student12", "age" : 2, "school" : "Num0School" }

{ "_id" : ObjectId("586f78ade9f2c32fab697d4c"), "name" : "student13", "age" : 3, "school" : "Num1School" }

{ "_id" : ObjectId("586f78ade9f2c32fab697d4d"), "name" : "student14", "age" : 4, "school" : "Num2School" }

{ "_id" : ObjectId("586f78ade9f2c32fab697d4e"), "name" : "student15", "age" : 0, "school" : "Num0School" }

{ "_id" : ObjectId("586f78ade9f2c32fab697d4f"), "name" : "student16", "age" : 1, "school" : "Num1School" }

{ "_id" : ObjectId("586f78ade9f2c32fab697d50"), "name" : "student17", "age" : 2, "school" : "Num2School" }

{ "_id" : ObjectId("586f78ade9f2c32fab697d51"), "name" : "student18", "age" : 3, "school" : "Num0School" }

{ "_id" : ObjectId("586f78ade9f2c32fab697d52"), "name" : "student19", "age" : 4, "school" : "Num1School" }

{ "_id" : ObjectId("586f78ade9f2c32fab697d53"), "name" : "student20", "age" : 0, "school" : "Num2School" }

Type "it" for more

> it

{ "_id" : ObjectId("586f78ade9f2c32fab697d54"), "name" : "student21", "age" : 1, "school" : "Num0School" }

{ "_id" : ObjectId("586f78ade9f2c32fab697d55"), "name" : "student22", "age" : 2, "school" : "Num1School" }

{ "_id" : ObjectId("586f78ade9f2c32fab697d56"), "name" : "student23", "age" : 3, "school" : "Num2School" }

{ "_id" : ObjectId("586f78ade9f2c32fab697d57"), "name" : "student24", "age" : 4, "school" : "Num0School" }

{ "_id" : ObjectId("586f78ade9f2c32fab697d58"), "name" : "student25", "age" : 0, "school" : "Num1School" }

{ "_id" : ObjectId("586f78ade9f2c32fab697d59"), "name" : "student26", "age" : 1, "school" : "Num2School" }

{ "_id" : ObjectId("586f78ade9f2c32fab697d5a"), "name" : "student27", "age" : 2, "school" : "Num0School" }

{ "_id" : ObjectId("586f78ade9f2c32fab697d5b"), "name" : "student28", "age" : 3, "school" : "Num1School" }

{ "_id" : ObjectId("586f78ade9f2c32fab697d5c"), "name" : "student29", "age" : 4, "school" : "Num2School" }

{ "_id" : ObjectId("586f78ade9f2c32fab697d5d"), "name" : "student30", "age" : 0, "school" : "Num0School" }

{ "_id" : ObjectId("586f78ade9f2c32fab697d5e"), "name" : "student31", "age" : 1, "school" : "Num1School" }

{ "_id" : ObjectId("586f78ade9f2c32fab697d5f"), "name" : "student32", "age" : 2, "school" : "Num2School" }

{ "_id" : ObjectId("586f78ade9f2c32fab697d60"), "name" : "student33", "age" : 3, "school" : "Num0School" }

{ "_id" : ObjectId("586f78ade9f2c32fab697d61"), "name" : "student34", "age" : 4, "school" : "Num1School" }

{ "_id" : ObjectId("586f78ade9f2c32fab697d62"), "name" : "student35", "age" : 0, "school" : "Num2School" }

{ "_id" : ObjectId("586f78ade9f2c32fab697d63"), "name" : "student36", "age" : 1, "school" : "Num0School" }

{ "_id" : ObjectId("586f78ade9f2c32fab697d64"), "name" : "student37", "age" : 2, "school" : "Num1School" }

{ "_id" : ObjectId("586f78ade9f2c32fab697d65"), "name" : "student38", "age" : 3, "school" : "Num2School" }

{ "_id" : ObjectId("586f78ade9f2c32fab697d66"), "name" : "student39", "age" : 4, "school" : "Num0School" }

{ "_id" : ObjectId("586f78ade9f2c32fab697d67"), "name" : "student40", "age" : 0, "school" : "Num1School" }

Type "it" for more

> db.students.ensureIndex({name:1}) #添加以name为索引

{

"createdCollectionAutomatically" : false,

"numIndexesBefore" : 1,

"numIndexesAfter" : 2,

"ok" : 1

}

> db.students.getIndexes() #索引状态

[

{

"v" : 1,

"key" : {

"_id" : 1 #原有索引,根据id创建

},

"name" : "_id_",

"ns" : "testdb.students"

},

{

"v" : 1,

"key" : {

"name" : 1 #刚创建的索引,基于name创建

},

"name" : "name_1", #索引名字

"ns" : "testdb.students" #

}

]

> db.students.dropIndex({"name_1"}) #删除索引名字为name_1的索引

{ "nIndexesWas" : 2, "ok" : 1 }

> db.students.getIndexes()

[

{

"v" : 1,

"key" : {

"_id" : 1

},

"name" : "_id_",

"ns" : "testdb.students"

}

]

> db.students.ensureIndex({name:1},{unique:true,dropDups:true}) #创建唯一索引

{

"createdCollectionAutomatically" : false,

"numIndexesBefore" : 1,

"numIndexesAfter" : 2,

"ok" : 1

}

> db.students.getIndexes()

[

{

"v" : 1,

"key" : {

"_id" : 1

},

"name" : "_id_",

"ns" : "testdb.students"

},

{

"v" : 1,

"unique" : true,

"key" : {

"name" : 1

},

"name" : "name_1",

"ns" : "testdb.students"

}

]

> db.students.insert({name:"student200",age:3}) #创建已有的数据会报错

WriteResult({

"nInserted" : 0,

"writeError" : {

"code" : 11000,

"errmsg" : "E11000 duplicate key error collection: testdb.students index: name_1 dup key: { : \"student200\" }"

}

})

> db.students.find({name: "student5000"}).explain() #查看索引的生效流程

{

"queryPlanner" : {

"plannerVersion" : 1,

"namespace" : "testdb.students",

"indexFilterSet" : false,

"parsedQuery" : {

"name" : {

"$eq" : "student5000"

}

},

"winningPlan" : {

"stage" : "FETCH",

"inputStage" : {

"stage" : "IXSCAN",

"keyPattern" : {

"name" : 1

},

"indexName" : "name_1",

"isMultiKey" : false,

"isUnique" : true,

"isSparse" : false,

"isPartial" : false,

"indexVersion" : 1,

"direction" : "forward",

"indexBounds" : {

"name" : [

"[\"student5000\", \"student5000\"]"

]

}

}

},

"rejectedPlans" : [ ]

},

"serverInfo" : {

"host" : "why",

"port" : 27017,

"version" : "3.2.8",

"gitVersion" : "ed70e33130c977bda0024c125b56d159573dbaf0"

},

"ok" : 1

}

> db.students.find({name: {$gt: "students5000"}}).explain() #索引生效流程

{

"queryPlanner" : {

"plannerVersion" : 1,

"namespace" : "testdb.students",

"indexFilterSet" : false,

"parsedQuery" : {

"name" : {

"$gt" : "students5000"

}

},

"winningPlan" : {

"stage" : "FETCH",

"inputStage" : {

"stage" : "IXSCAN",

"keyPattern" : {

"name" : 1

},

"indexName" : "name_1",

"isMultiKey" : false,

"isUnique" : true,

"isSparse" : false,

"isPartial" : false,

"indexVersion" : 1,

"direction" : "forward",

"indexBounds" : {

"name" : [

"(\"students5000\", {})"

]

}

}

},

"rejectedPlans" : [ ]

},

"serverInfo" : {

"host" : "why",

"port" : 27017,

"version" : "3.2.8",

"gitVersion" : "ed70e33130c977bda0024c125b56d159573dbaf0"

},

"ok" : 1

}

> db.students.find({name: {$gt: "student5000"}}).count()

5551

这个5551是按照字符排序,5001~9999都是大于5000的,还有501~999,51~99,6~9,4999+499+49+4=5551

MongoDB参数

mongodb可以通过-f指定配置文件启动,所以可以把需要的参数写入配置文件,不需要加前面的'--'即可

[root@why ~]# /usr/local/mongodb/bin/mongod --help

Options:

General options: #通用选项

-h [ --help ] show this usage information

--version show version information

-f [ --config ] arg configuration file specifying

additional options

-v [ --verbose ] [=arg(=v)] be more verbose (include multiple times

for more verbosity e.g. -vvvvv)

--quiet quieter output

--port arg specify port number - 27017 by default #监听端口,默认为27017

--bind_ip arg comma separated list of ip addresses to #监听地址,默认为本机所有IP

listen on - all local ips by default

--ipv6 enable IPv6 support (disabled by

default)

--maxConns arg max number of simultaneous connections #支持的最大连接数

- 1000000 by default

--logpath arg log file to send write to instead of #指定日志位置

stdout - has to be a file, not

directory

--syslog log to system's syslog facility instead

of file or stdout

--syslogFacility arg syslog facility used for mongodb syslog #由syslog收集日志

message

--logappend append to logpath instead of #支持日志滚动,即日志追加

over-writing

--logRotate arg set the log rotation behavior

(rename|reopen)

--timeStampFormat arg Desired format for timestamps in log

messages. One of ctime, iso8601-utc or

iso8601-local

--pidfilepath arg full path to pidfile (if not set, no

pidfile is created)

--keyFile arg private key for cluster authentication #私钥文件位置

--noauth run without security

--setParameter arg Set a configurable parameter #设定一个配置参数

--httpinterface enable http interface #是否启动内置端口,如不启用,28017就不会启动

--clusterAuthMode arg Authentication mode used for cluster

authentication. Alternatives are

(keyFile|sendKeyFile|sendX509|x509)

--nounixsocket disable listening on unix sockets #是否支持sock

--unixSocketPrefix arg alternative directory for UNIX domain

sockets (defaults to /tmp)

--filePermissions arg permissions to set on UNIX domain

socket file - 0700 by default

--fork fork server process #fork代表mongod后台运行,可以配置为true或者false

--auth run with security #通过认证访问

--jsonp allow JSONP access via http (has

security implications)

--rest turn on simple rest api

--slowms arg (=100) value of slow for profile and console #慢查询界定,超过该时间为慢查询

log

--profile arg 0=off 1=slow, 2=all #性能评估,0表示关闭,1表示评估慢查询,2表示启动所有查询,也用于调试

--cpu periodically show cpu and iowait #周期的显示cpu和iowait利用率,一般用于调试

utilization

--sysinfo print some diagnostic system #显示系统级别的整段信息,一般用于调试

information

--noIndexBuildRetry don't retry any index builds that were

interrupted by shutdown

--noscripting disable scripting engine

--notablescan do not allow table scans

--shutdown kill a running server (for init

scripts)

Replication options: #复制选项

--oplogSize arg size to use (in MB) for replication op #oplog大小

log. default is 5% of disk space (i.e.

large is good)

Master/slave options (old; use replica sets instead): #主从复制选项

--master master mode

--slave slave mode

--source arg when slave: specify master as

<server:port>

--only arg when slave: specify a single database

to replicate

--slavedelay arg specify delay (in seconds) to be used

when applying master ops to slave

--autoresync automatically resync if slave data is

stale

Replica set options: #副本集选项

--replSet arg arg is <setname>[/<optionalseedhostlist #副本集名称

>]

--replIndexPrefetch arg specify index prefetching behavior (if #副本集索引预取,none不预取,_id_only只预取id,all代表预取全部,只在从节点生效

secondary) [none|_id_only|all]

--enableMajorityReadConcern enables majority readConcern

Sharding options: #切片选项

--configsvr declare this is a config db of a

cluster; default port 27019; default

dir /data/configdb

--configsvrMode arg Controls what config server protocol is

in use. When set to "sccc" keeps server

in legacy SyncClusterConnection mode

even when the service is running as a

replSet

--shardsvr declare this is a shard db of a

cluster; default port 27018

SSL options:

--sslOnNormalPorts use ssl on configured ports

--sslMode arg set the SSL operation mode

(disabled|allowSSL|preferSSL|requireSSL

)

--sslPEMKeyFile arg PEM file for ssl

--sslPEMKeyPassword arg PEM file password

--sslClusterFile arg Key file for internal SSL

authentication

--sslClusterPassword arg Internal authentication key file

password

--sslCAFile arg Certificate Authority file for SSL

--sslCRLFile arg Certificate Revocation List file for

SSL

--sslDisabledProtocols arg Comma separated list of TLS protocols

to disable [TLS1_0,TLS1_1,TLS1_2]

--sslWeakCertificateValidation allow client to connect without

presenting a certificate

--sslAllowConnectionsWithoutCertificates

allow client to connect without

presenting a certificate

--sslAllowInvalidHostnames Allow server certificates to provide

non-matching hostnames

--sslAllowInvalidCertificates allow connections to servers with

invalid certificates

--sslFIPSMode activate FIPS 140-2 mode at startup

Storage options:

--storageEngine arg what storage engine to use - defaults

to wiredTiger if no data files present

--dbpath arg directory for datafiles - defaults to

/data/db

--directoryperdb each database will be stored in a

separate directory

--noprealloc disable data file preallocation - will

often hurt performance

--nssize arg (=16) .ns file size (in MB) for new databases

--quota limits each database to a certain

number of files (8 default)

--quotaFiles arg number of files allowed per db, implies

--quota

--smallfiles use a smaller default file size

--syncdelay arg (=60) seconds between disk syncs (0=never,

but not recommended)

--upgrade upgrade db if needed

--repair run repair on all dbs #启动时修复数据库,把未写入文件的数据写入(在意外断电或者关闭)

--repairpath arg root directory for repair files -

defaults to dbpath

--journal enable journaling

--nojournal disable journaling (journaling is on by #是否启动日志,类似事务日志,用于记录数据的写入等

default for 64 bit)

--journalOptions arg journal diagnostic options

--journalCommitInterval arg how often to group/batch commit (ms) #日志的提交间隔,这样可以把随机IO写入改为顺序IO写入

WiredTiger options:

--wiredTigerCacheSizeGB arg maximum amount of memory to allocate

for cache; defaults to 1/2 of physical

RAM

--wiredTigerStatisticsLogDelaySecs arg (=0)

seconds to wait between each write to a

statistics file in the dbpath; 0 means

do not log statistics

--wiredTigerJournalCompressor arg (=snappy)

use a compressor for log records

[none|snappy|zlib]

--wiredTigerDirectoryForIndexes Put indexes and data in different

directories

--wiredTigerCollectionBlockCompressor arg (=snappy)

block compression algorithm for

collection data [none|snappy|zlib]

--wiredTigerIndexPrefixCompression arg (=1)

use prefix compression on row-store

leaf pages

MongoDB的复制

MongoDB有两种复制模式,服务于同一数据的一组MongoDB的实例,每个节点都持用同样的数据,一是传统的Master/Slave(已经被舍弃),另一种为replice副本集,不过副本集可以实现自动故障转移和恢复。

一个复制集,只能有一个主节点,主节点将数据修改过程写入oplog,从节点进行同步,如果有多个从节点,在主节点宕机后通过优先级判断新的主节点。所有就必须有三台MongoDB实例或以上数量实例才能组成一个复制集,所以三个实例,也就可以方式两个节点可能产生的裂脑。当然也可以选择使用两个实例,第三台机器只需要启动MongoDB实例,并且配置为低优先级,不负责同步。

复制集优先级 0优先级,冷备节点,不会被选举为主节点,但可以参与选举,用于异地容灾,一主一备在同一机房,冷备节点在其他机房,用于容灾,并且也可以被访问,但是一般不用于读 隐藏从节点,与0优先级一样,不过对客户端不可见 延迟复制节点,与0优先级一样,且复制时间落后于主节点一个固定时长 arbiter,与0优先级一样,不过不同步数据,只负责选举

复制架构 heardbeat负责检测心跳,oplog复制同步数据。

oplog是一个大小固定的文件,存储在local数据库,启动数据库的时候就会初始化oplog,一般为所在文件系统大小的5%,如果计算结果小于1G则使用1G,所以oplog无法存储所有的数据,MongoDB无法进行同步全量,必须先进行初始化同步所有数据,再通过oplog进行同步。不过也可以自定义,通过oplogSize参数设定。

所以一个新实例进行同步就会经历初始化同步,回滚后追赶,切分块迁移,一般在一个新的实例没有任何数据或者实例丢失副本集复制历史

local数据库存放oplog(oplog.rs的collection)和元数据,是不会被复制的

[root@why ~]# /usr/local/mongodb/bin/mongod --dbpath=/usr/local/mongodb/data --logpath=/usr/local/mongodb/log --logappend --port=27017 --fork --rest --httpinterface --replSet=test #必须--replSet制定数据集

about to fork child process, waiting until server is ready for connections.

forked process: 22896

child process started successfully, parent exiting

[root@why ~]# /usr/local/mongodb/bin/mongo --host 192.168.0.130

MongoDB shell version: 3.2.8

connecting to: 192.168.0.130:27017/test

> rs.help()

rs.status() { replSetGetStatus : 1 } checks repl set status #获取rs状态信息

rs.initiate() { replSetInitiate : null } initiates set with default settings #当前副本集初始化

rs.initiate(cfg) { replSetInitiate : cfg } initiates set with configuration cfg #以指定配置文件副本集初始化

rs.conf() get the current configuration object from local.system.replset #获取当前副本集配置

rs.reconfig(cfg) updates the configuration of a running replica set with cfg (disconnects)

rs.add(hostportstr) add a new member to the set with default attributes (disconnects)

rs.add(membercfgobj) add a new member to the set with extra attributes (disconnects)

rs.addArb(hostportstr) add a new member which is arbiterOnly:true (disconnects)

rs.stepDown([stepdownSecs, catchUpSecs]) step down as primary (disconnects)

rs.syncFrom(hostportstr) make a secondary sync from the given member

rs.freeze(secs) make a node ineligible to become primary for the time specified

rs.remove(hostportstr) remove a host from the replica set (disconnects)

rs.slaveOk() allow queries on secondary nodes

rs.printReplicationInfo() check oplog size and time range

rs.printSlaveReplicationInfo() check replica set members and replication lag

db.isMaster() check who is primary

reconfiguration helpers disconnect from the database so the shell will display

an error, even if the command succeeds.

> rs.status()

{

"info" : "run rs.initiate(...) if not yet done for the set",

"ok" : 0,

"errmsg" : "no replset config has been received",

"code" : 94

}

> config = { _id:"test", members:[{_id:0,host:"192.168.0.130:27017"},{_id:1,host:"192.168.0.201:27017"},{_id:2,host:"192.168.0.202:27017"}]} #生成参数,指定主要同步的主机

{

"_id" : "test",

"members" : [

{

"_id" : 0,

"host" : "192.168.0.130:27017"

},

{

"_id" : 1,

"host" : "192.168.0.201:27017"

},

{

"_id" : 2,

"host" : "192.168.0.202:27017"

}

]

}

> rs.initiate(config) #初始化副本集,使用配置参数

{ "ok" : 1 }

test:OTHER> rs.status() #副本集状态

{

"set" : "test",

"date" : ISODate("2017-01-06T17:43:39.586Z"),

"myState" : 1,

"term" : NumberLong(1),

"heartbeatIntervalMillis" : NumberLong(2000),

"members" : [

{

"_id" : 0,

"name" : "192.168.0.130:27017",

"health" : 1, #是否健康,如果服务无法正常连接,处于离线状态,该值为0

"state" : 1,

"stateStr" : "PRIMARY",

"uptime" : 1797,

"optime" : {

"ts" : Timestamp(1483724575, 1),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2017-01-06T17:42:55Z"),

"infoMessage" : "could not find member to sync from",

"electionTime" : Timestamp(1483724574, 1),

"electionDate" : ISODate("2017-01-06T17:42:54Z"),

"configVersion" : 1,

"self" : true #是否为自身

},

{

"_id" : 1,

"name" : "192.168.0.201:27017",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY",

"uptime" : 55,

"optime" : {

"ts" : Timestamp(1483724575, 1),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2017-01-06T17:42:55Z"),

"lastHeartbeat" : ISODate("2017-01-06T17:43:38.913Z"),

"lastHeartbeatRecv" : ISODate("2017-01-06T17:43:38.603Z"),

"pingMs" : NumberLong(0),

"syncingTo" : "192.168.0.130:27017",

"configVersion" : 1

},

{

"_id" : 2,

"name" : "192.168.0.202:27017",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY", #如果在数据追赶的过程中显示为STARTUP2

"uptime" : 55,

"optime" : {

"ts" : Timestamp(1483724575, 1),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2017-01-06T17:42:55Z"),

"lastHeartbeat" : ISODate("2017-01-06T17:43:38.924Z"),

"lastHeartbeatRecv" : ISODate("2017-01-06T17:43:38.822Z"),

"pingMs" : NumberLong(0),

"syncingTo" : "192.168.0.130:27017",

"configVersion" : 1

}

],

"ok" : 1

}

如果需要添加节点可以rs.add("192.168.0.203")

从节点查看

[root@why-2 mongodb]# /usr/local/mongodb/bin/mongo --host 192.168.0.202

MongoDB shell version: 3.2.8

connecting to: 192.168.0.202:27017/test

test:SECONDARY> show dbs

2017-03-04T17:18:32.332+0800 E QUERY [thread1] Error: listDatabases failed:{ "ok" : 0, "errmsg" : "not master and slaveOk=false", "code" : 13435 } :

_getErrorWithCode@src/mongo/shell/utils.js:25:13

Mongo.prototype.getDBs@src/mongo/shell/mongo.js:62:1

shellHelper.show@src/mongo/shell/utils.js:761:19

shellHelper@src/mongo/shell/utils.js:651:15

test:SECONDARY> db.getMongo().setSlaveOk()

test:SECONDARY> show dbs

local 0.000GB

testdb 0.000GB

test:SECONDARY> use testdb

switched to db testdb

test:SECONDARY> db.students.find() #可以看到主节点同步而来的数据

{ "_id" : ObjectId("586f78ade9f2c32fab697d40"), "name" : "student1", "age" : 1, "school" : "Num1School" }

{ "_id" : ObjectId("586f78ade9f2c32fab697d41"), "name" : "student2", "age" : 2, "school" : "Num2School" }

{ "_id" : ObjectId("586f78ade9f2c32fab697d42"), "name" : "student3", "age" : 3, "school" : "Num0School" }

{ "_id" : ObjectId("586f78ade9f2c32fab697d43"), "name" : "student4", "age" : 4, "school" : "Num1School" }

{ "_id" : ObjectId("586f78ade9f2c32fab697d44"), "name" : "student5", "age" : 0, "school" : "Num2School" }

{ "_id" : ObjectId("586f78ade9f2c32fab697d45"), "name" : "student6", "age" : 1, "school" : "Num0School" }

{ "_id" : ObjectId("586f78ade9f2c32fab697d46"), "name" : "student7", "age" : 2, "school" : "Num1School" }

{ "_id" : ObjectId("586f78ade9f2c32fab697d47"), "name" : "student8", "age" : 3, "school" : "Num2School" }

{ "_id" : ObjectId("586f78ade9f2c32fab697d48"), "name" : "student9", "age" : 4, "school" : "Num0School" }

{ "_id" : ObjectId("586f78ade9f2c32fab697d49"), "name" : "student10", "age" : 0, "school" : "Num1School" }

{ "_id" : ObjectId("586f78ade9f2c32fab697d4a"), "name" : "student11", "age" : 1, "school" : "Num2School" }

{ "_id" : ObjectId("586f78ade9f2c32fab697d4b"), "name" : "student12", "age" : 2, "school" : "Num0School" }

{ "_id" : ObjectId("586f78ade9f2c32fab697d4c"), "name" : "student13", "age" : 3, "school" : "Num1School" }

{ "_id" : ObjectId("586f78ade9f2c32fab697d4d"), "name" : "student14", "age" : 4, "school" : "Num2School" }

{ "_id" : ObjectId("586f78ade9f2c32fab697d4e"), "name" : "student15", "age" : 0, "school" : "Num0School" }

{ "_id" : ObjectId("586f78ade9f2c32fab697d4f"), "name" : "student16", "age" : 1, "school" : "Num1School" }

{ "_id" : ObjectId("586f78ade9f2c32fab697d50"), "name" : "student17", "age" : 2, "school" : "Num2School" }

{ "_id" : ObjectId("586f78ade9f2c32fab697d51"), "name" : "student18", "age" : 3, "school" : "Num0School" }

{ "_id" : ObjectId("586f78ade9f2c32fab697d52"), "name" : "student19", "age" : 4, "school" : "Num1School" }

{ "_id" : ObjectId("586f78ade9f2c32fab697d53"), "name" : "student20", "age" : 0, "school" : "Num2School" }

Type "it" for more

test:SECONDARY> rs.isMaster()

{

"hosts" : [

"192.168.0.130:27017",

"192.168.0.201:27017",

"192.168.0.202:27017"

],

"setName" : "test",

"setVersion" : 1,

"ismaster" : false,

"secondary" : true,

"primary" : "192.168.0.130:27017",

"me" : "192.168.0.202:27017",

"maxBsonObjectSize" : 16777216,

"maxMessageSizeBytes" : 48000000,

"maxWriteBatchSize" : 1000,

"localTime" : ISODate("2017-03-04T09:26:26.378Z"),

"maxWireVersion" : 4,

"minWireVersion" : 0,

"ok" : 1

}

数据写入测试

test:PRIMARY> use testdb #不使用则为test库

switched to db testdb

test:PRIMARY> db.classes.insert({class:"One",numbers:50})

WriteResult({ "nInserted" : 1 })

可以看到主节点可以进行写入

test:SECONDARY> use test

switched to db test

test:SECONDARY> db.classes.find()

{ "_id" : ObjectId("586fdc6d7b57d9bc65607134"), "class" : "One", "numbers" : 50 }

test:SECONDARY> db.classes.insert({class:"Two",numbers:66})

WriteResult({ "writeError" : { "code" : 10107, "errmsg" : "not master" } })

从节点可以查询到,但是无法进行写入

关闭主节点测试

test:PRIMARY> rs.stepDown() #强制成为主节点

2017-01-07T04:00:41.716+0800 E QUERY [thread1] Error: error doing query: failed: network error while attempting to run command 'replSetStepDown' on host '192.168.0.130:27017' :

DB.prototype.runCommand@src/mongo/shell/db.js:135:1

DB.prototype.adminCommand@src/mongo/shell/db.js:153:16

rs.stepDown@src/mongo/shell/utils.js:1182:12

@(shell):1:1

2017-01-07T04:00:41.724+0800 I NETWORK [thread1] trying reconnect to 192.168.0.130:27017 (192.168.0.130) failed

2017-01-07T04:00:41.726+0800 I NETWORK [thread1] reconnect 192.168.0.130:27017 (192.168.0.130) ok

test:SECONDARY> rs.status()

{

"set" : "test",

"date" : ISODate("2017-01-06T20:01:07.336Z"),

"myState" : 2,

"term" : NumberLong(2),

"syncingTo" : "192.168.0.201:27017",

"heartbeatIntervalMillis" : NumberLong(2000),

"members" : [

{

"_id" : 0,

"name" : "192.168.0.130:27017",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY",

"uptime" : 10045,

"optime" : {

"ts" : Timestamp(1488627088, 2),

"t" : NumberLong(2)

},

"optimeDate" : ISODate("2017-03-04T11:31:28Z"),

"syncingTo" : "192.168.0.201:27017",

"configVersion" : 1,

"self" : true

},

{

"_id" : 1,

"name" : "192.168.0.201:27017",

"health" : 1,

"state" : 1,

"stateStr" : "PRIMARY",

"uptime" : 8303,

"optime" : {

"ts" : Timestamp(1488627088, 2),

"t" : NumberLong(2)

},

"optimeDate" : ISODate("2017-03-04T11:31:28Z"),

"lastHeartbeat" : ISODate("2017-01-06T20:01:06.646Z"),

"lastHeartbeatRecv" : ISODate("2017-01-06T20:01:05.894Z"),

"pingMs" : NumberLong(0),

"electionTime" : Timestamp(1488627088, 1),

"electionDate" : ISODate("2017-03-04T11:31:28Z"),

"configVersion" : 1

},

{

"_id" : 2,

"name" : "192.168.0.202:27017",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY",

"uptime" : 8303,

"optime" : {

"ts" : Timestamp(1488627088, 2),

"t" : NumberLong(2)

},

"optimeDate" : ISODate("2017-03-04T11:31:28Z"),

"lastHeartbeat" : ISODate("2017-01-06T20:01:06.605Z"),

"lastHeartbeatRecv" : ISODate("2017-01-06T20:01:05.823Z"),

"pingMs" : NumberLong(0),

"syncingTo" : "192.168.0.130:27017",

"configVersion" : 1

}

],

"ok" : 1

}

可以看到PRIMARY变为192.168.0.201

test:SECONDARY> db.printReplicationInfo() #查看数据集同步情况

configured oplog size: 990MB

log length start to end: 4904127secs (1362.26hrs)

oplog first event time: Sat Jan 07 2017 01:42:43 GMT+0800 (CST)

oplog last event time: Sat Mar 04 2017 19:58:10 GMT+0800 (CST)

now: Sat Mar 04 2017 19:59:10 GMT+0800 (CST)

副本集触发选举的情况

- 新副本集初始化

- 从节点联系不到主节点

- 主节点放弃主节点

- 某从节点具有更高的优先级

副本集的重新选举的影响条件

- 心跳信息

- 优先级

- 成员节点更新时间戳与Master最后一次更新的时间戳时间相同

根据这些条件进行选举,超过半数票数的节点成为主节点

主从复制配置

{

_id: <string>, #字符串

version: <int>,

protocolVersion: <number>,

members: [ #列表

{

_id: <int>,

host: <string>, #主机名或IP

arbiterOnly: <boolean>, #是否为仲裁节点

buildIndexes: <boolean>, #是否构建索引

hidden: <boolean>, #是否为隐藏节点

priority: <number>, #优先级,默认为1

tags: <document>, #文档标识

slaveDelay: <int>, #是否延迟复制

votes: <number> #是否拥有选票,值可以为0或1

},

...

],

settings: {

chainingAllowed : <boolean>, #是否允许链式复制

heartbeatIntervalMillis : <int>,

heartbeatTimeoutSecs: <int>, #心跳超时时间

electionTimeoutMillis : <int>,

getLastErrorModes : <document>, #获取最新的错误信息的方式

getLastErrorDefaults : <document> #获取最新的错误信息

}

}

更详细的信息都可以在https://docs.mongodb.com/v3.2/reference/replica-configuration/文档下方获取。

官网提供的配置参数

members[n].priority

Optional.

Type: Number, between 0 and 1000.

Default: 1.0

优先级默认为0,我们可以根据id进行修改。

test:PRIMARY> configold=rs.conf() #备份配置参数

{

"_id" : "test",

"version" : 1,

"protocolVersion" : NumberLong(1),

"members" : [

{

"_id" : 0,

"host" : "192.168.0.130:27017",

"arbiterOnly" : false,

"buildIndexes" : true,

"hidden" : false,

"priority" : 1,

"tags" : {

},

"slaveDelay" : NumberLong(0),

"votes" : 1

},

{

"_id" : 1,

"host" : "192.168.0.201:27017",

"arbiterOnly" : false,

"buildIndexes" : true,

"hidden" : false,

"priority" : 1,

"tags" : {

},

"slaveDelay" : NumberLong(0),

"votes" : 1

},

{

"_id" : 2,

"host" : "192.168.0.202:27017",

"arbiterOnly" : false,

"buildIndexes" : true,

"hidden" : false,

"priority" : 1,

"tags" : {

},

"slaveDelay" : NumberLong(0),

"votes" : 1

}

],

"settings" : {

"chainingAllowed" : true,

"heartbeatIntervalMillis" : 2000,

"heartbeatTimeoutSecs" : 10,

"electionTimeoutMillis" : 10000,

"getLastErrorModes" : {

},

"getLastErrorDefaults" : {

"w" : 1,

"wtimeout" : 0

},

"replicaSetId" : ObjectId("586fd7118c4062032187ec74")

}

}

test:PRIMARY> configold.members[2].priority=2 #根据官方文档配置参数

2

test:PRIMARY> rs.reconfig(configold) #生效新的配置文件,注意此操作必须在主节点上进行操作。

{ "ok" : 1 }

test:PRIMARY> rs.status()

2017-03-04T20:53:32.862+0800 I NETWORK [thread1] Socket say send() errno:32 Broken pipe 192.168.0.130:27017

2017-03-04T20:53:32.966+0800 E QUERY [thread1] Error: socket exception [SEND_ERROR] for 192.168.0.130:27017 :

DB.prototype._runCommandImpl@src/mongo/shell/db.js:117:16

DB.prototype.runCommand@src/mongo/shell/db.js:128:19

DB.prototype.adminCommand@src/mongo/shell/db.js:153:16

rs.status@src/mongo/shell/utils.js:1091:12

@(shell):1:1

2017-03-04T20:53:32.969+0800 I NETWORK [thread1] trying reconnect to 192.168.0.130:27017 (192.168.0.130) failed

2017-03-04T20:53:32.973+0800 I NETWORK [thread1] reconnect 192.168.0.130:27017 (192.168.0.130) ok

可以看到主节点此时192.1668.0.130已经不是主节点,因为其为默认优先级1,但是我们给定id为2的节点优先级为2,触发了选举,进而id为2的为master

test:SECONDARY> rs.status()

{

"set" : "test",

"date" : ISODate("2017-03-04T12:53:52.197Z"),

"myState" : 2,

"term" : NumberLong(4),

"syncingTo" : "192.168.0.202:27017",

"heartbeatIntervalMillis" : NumberLong(2000),

"members" : [

{

"_id" : 0,

"name" : "192.168.0.130:27017",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY",

"uptime" : 4909210,

"optime" : {

"ts" : Timestamp(1488630394, 2),

"t" : NumberLong(4)

},

"optimeDate" : ISODate("2017-03-04T12:26:34Z"),

"syncingTo" : "192.168.0.202:27017",

"configVersion" : 2,

"self" : true

},

{

"_id" : 1,

"name" : "192.168.0.201:27017",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY",

"uptime" : 4907468,

"optime" : {

"ts" : Timestamp(1488630394, 2),

"t" : NumberLong(4)

},

"optimeDate" : ISODate("2017-03-04T12:26:34Z"),

"lastHeartbeat" : ISODate("2017-03-04T12:53:51.095Z"),

"lastHeartbeatRecv" : ISODate("2017-03-04T12:53:51.056Z"),

"pingMs" : NumberLong(0),

"syncingTo" : "192.168.0.202:27017",

"configVersion" : 2

},

{

"_id" : 2,

"name" : "192.168.0.202:27017",

"health" : 1,

"state" : 1,

"stateStr" : "PRIMARY", #id为2的节点成为主节点

"uptime" : 4907468,

"optime" : {

"ts" : Timestamp(1488630394, 2),

"t" : NumberLong(4)

},

"optimeDate" : ISODate("2017-03-04T12:26:34Z"),

"lastHeartbeat" : ISODate("2017-03-04T12:53:51.117Z"),

"lastHeartbeatRecv" : ISODate("2017-03-04T12:53:50.266Z"),

"pingMs" : NumberLong(1),

"electionTime" : Timestamp(1488630394, 1),

"electionDate" : ISODate("2017-03-04T12:26:34Z"),

"configVersion" : 2

}

],

"ok" : 1

}

test:SECONDARY> rs.conf

rs.conf( rs.config(

test:SECONDARY> rs.conf()

{

"_id" : "test",

"version" : 2,

"protocolVersion" : NumberLong(1),

"members" : [

{

"_id" : 0,

"host" : "192.168.0.130:27017",

"arbiterOnly" : false,

"buildIndexes" : true,

"hidden" : false,

"priority" : 1,

"tags" : {

},

"slaveDelay" : NumberLong(0),

"votes" : 1

},

{

"_id" : 1,

"host" : "192.168.0.201:27017",

"arbiterOnly" : false,

"buildIndexes" : true,

"hidden" : false,

"priority" : 1,

"tags" : {

},

"slaveDelay" : NumberLong(0),

"votes" : 1

},

{

"_id" : 2,

"host" : "192.168.0.202:27017",

"arbiterOnly" : false,

"buildIndexes" : true,

"hidden" : false,

"priority" : 2, #优先级变为2

"tags" : {

},

"slaveDelay" : NumberLong(0),

"votes" : 1

}

],

"settings" : {

"chainingAllowed" : true,

"heartbeatIntervalMillis" : 2000,

"heartbeatTimeoutSecs" : 10,

"electionTimeoutMillis" : 10000,

"getLastErrorModes" : {

},

"getLastErrorDefaults" : {

"w" : 1,

"wtimeout" : 0

},

"replicaSetId" : ObjectId("586fd7118c4062032187ec74")

}

}

设置仲裁节点

如果需要仲裁节点,可以用rs.addArb(hostportstr)进行添加,如果是已有的就通过rs.remove(hostportstr)移除后再添加 通过修改配置生效的方式是不生效的

test:SECONDARY> configold=rs.conf()

test:SECONDARY> configold.members[1].arbiterOnly=true

true

test:PRIMARY> rs.reconfig(configold)

{

"ok" : 0,

"errmsg" : "New and old configurations differ in the setting of the arbiterOnly field for member 192.168.0.201:27017; to make this change, remove then re-add the member",

"code" : 103

}

查看从节点落后主节点的时间

test:PRIMARY> rs.printSlaveReplicationInfo()

source: 192.168.0.130:27017

syncedTo: Sat Mar 04 2017 20:26:34 GMT+0800 (CST)

0 secs (0 hrs) behind the primary

source: 192.168.0.201:27017

syncedTo: Sat Mar 04 2017 20:26:34 GMT+0800 (CST)

0 secs (0 hrs) behind the primary

Mongodb分片

数据库节点的磁盘,网络,内存,CPU成为瓶颈的时候需要向上或者向外扩展,向上则是通过更大的硬件资源,这种并不实惠,而向外则是分片。与MySQL,Redis不同的是,这些都需要外部程序或者人为实现,例如MySQL的Gizzard,HiveDB,MySQL proxy,Pyshards等,而MongoDB自带分片架构。

Mongodb分片架构包含三种节点,routing(mongos)负责路由请求和返回请求,config server负责保存元数据信息(索引),shard负责响应存储和读取分片的数据。

所有的请求都发给routing,但是routing不保存任何数据,只是把请求路由到合适的shard处理请求,

Mongodb的分片是基于collection,每个collection根据索引按一定顺序排序,把数据切分为相同大小的数据块按照索引段存放在不同shard中,举个栗子,就说年龄,有四个shard,第一个shard中存放年龄为0~20的,第二个存放21~40,第三个存放41~60,第四个存放60以上,就会出现shard存储数据不均的情况,就需要调整索引的年龄段,数据就需要整体的进行迁移,而不是简简单单的从存储数据多的shard放到存储数据少的shard上。

索引的话一般如果是数值就可以选择使用分段的方式(range),其他数据例如地区的人员差距很大就可以根据列表聚类(list),例如东三省是一个聚类,北京是一个聚类等,如果是商品,新的商品可能热度会高,如果用分段的方式就会造成最后的shard有很高的读写,因为热度高,所有可以依据id等键进行哈希索引(hash),都是根据业务场景进行选择索引,目的就是写的时候是离散的,而读的时候是集中的。

如果要查看每个时间段内那个商品的销量最好,可能就需要根据搜索引擎和其他程序来执行而并非通过数据库进行处理。

当然如果是提供对外的查询就尽量根据用户的使用方式,或者常用的方式进行创建索引甚至组合的索引

Mongodb分片设置

测试环境删除原始数据

/usr/local/mongodb/bin/mongod --dbpath=/usr/local/mongodb/data --shutdown

mv /usr/local/mongodb/data /usr/local/mongodb/data.old

mkdir /usr/local/mongodb/data

以下是在4台机器 192.168.0.130为config server,192.168.0.201节点为routing,192.168.0.202和192.168.0.203节点为shard1和shard2。

启动config server

[root@why ~]# /usr/local/mongodb/bin/mongod --dbpath=/usr/local/mongodb/data --logpath=/usr/local/mongodb/log --logappend --fork --rest --httpinterface --configsvr

about to fork child process, waiting until server is ready for connections.

forked process: 27249

child process started successfully, parent exiting

[root@why ~]# ss -nlptu | grep 27019

tcp LISTEN 0 128 *:27019 *:* users:(("mongod",27249,6))

启动routing

[root@why-1 ~]# /usr/local/mongodb/bin/mongos --help

--configdb arg Connection string for communicating

with config servers. Acceptable forms:

CSRS: <config replset

name>/<host1:port>,<host2:port>,[...]

SCCC (deprecated): <host1:port>,<host2:

port>,<host3:port>

Single-node (for testing only):

<host1:port>

[root@why-1 ~]# /usr/local/mongodb/bin/mongos --configdb=192.168.0.130:27019 #前台显示

2017-03-05T00:14:42.635+0800 W SHARDING [main] Running a sharded cluster with fewer than 3 config servers should only be done for testing purposes and is not recommended for production.

2017-03-05T00:14:42.794+0800 I CONTROL [main] ** WARNING: You are running this process as the root user, which is not recommended.

2017-03-05T00:14:42.794+0800 I CONTROL [main]

2017-03-05T00:14:42.799+0800 I SHARDING [mongosMain] MongoS version 3.2.8 starting: pid=11913 port=27017 64-bit host=why-1 (--help for usage)

2017-03-05T00:14:42.799+0800 I CONTROL [mongosMain] db version v3.2.8

2017-03-05T00:14:42.799+0800 I CONTROL [mongosMain] git version: ed70e33130c977bda0024c125b56d159573dbaf0

2017-03-05T00:14:42.799+0800 I CONTROL [mongosMain] OpenSSL version: OpenSSL 1.0.1e-fips 11 Feb 2013

2017-03-05T00:14:42.799+0800 I CONTROL [mongosMain] allocator: tcmalloc

2017-03-05T00:14:42.799+0800 I CONTROL [mongosMain] modules: none

2017-03-05T00:14:42.799+0800 I CONTROL [mongosMain] build environment:

2017-03-05T00:14:42.799+0800 I CONTROL [mongosMain] distmod: rhel62

2017-03-05T00:14:42.799+0800 I CONTROL [mongosMain] distarch: x86_64

2017-03-05T00:14:42.799+0800 I CONTROL [mongosMain] target_arch: x86_64

2017-03-05T00:14:42.799+0800 I CONTROL [mongosMain] options: { sharding: { configDB: "192.168.0.130:27019" } }

2017-03-05T00:14:42.804+0800 I SHARDING [mongosMain] Updating config server connection string to: 192.168.0.130:27019

2017-03-05T00:14:42.887+0800 I SHARDING [LockPinger] creating distributed lock ping thread for 192.168.0.130:27019 and process why-1:27017:1488644082:-1184523421 (sleeping for 30000ms)

2017-03-05T00:14:43.117+0800 I SHARDING [LockPinger] cluster 192.168.0.130:27019 pinged successfully at 2017-03-05T00:14:42.911+0800 by distributed lock pinger '192.168.0.130:27019/why-1:27017:1488644082:-1184523421', sleeping for 30000ms

2017-03-05T00:14:43.131+0800 I SHARDING [mongosMain] distributed lock 'configUpgrade/why-1:27017:1488644082:-1184523421' acquired for 'initializing config database to new format v6', ts : 58bae7f2855893f71318d268

2017-03-05T00:14:43.142+0800 I SHARDING [mongosMain] initializing config server version to 6

2017-03-05T00:14:43.142+0800 I SHARDING [mongosMain] writing initial config version at v6

2017-03-05T00:14:43.247+0800 I SHARDING [mongosMain] initialization of config server to v6 successful

2017-03-05T00:14:43.249+0800 I SHARDING [mongosMain] distributed lock 'configUpgrade/why-1:27017:1488644082:-1184523421' unlocked.

2017-03-05T00:14:43.573+0800 I SHARDING [Balancer] about to contact config servers and shards

2017-03-05T00:14:43.574+0800 I NETWORK [HostnameCanonicalizationWorker] Starting hostname canonicalization worker

2017-03-05T00:14:43.582+0800 I SHARDING [Balancer] config servers and shards contacted successfully

2017-03-05T00:14:43.582+0800 I SHARDING [Balancer] balancer id: why-1:27017 started

2017-03-05T00:14:43.644+0800 I NETWORK [mongosMain] waiting for connections on port 27017

2017-03-05T00:14:43.693+0800 I SHARDING [Balancer] distributed lock 'balancer/why-1:27017:1488644082:-1184523421' acquired for 'doing balance round', ts : 58bae7f3855893f71318d26b

2017-03-05T00:14:43.696+0800 I SHARDING [Balancer] distributed lock 'balancer/why-1:27017:1488644082:-1184523421' unlocked.

2017-03-05T00:14:43.801+0800 W NETWORK [HostnameCanonicalizationWorker] Failed to obtain address information for hostname why-1: Name or service not known

2017-03-05T00:14:53.722+0800 I SHARDING [Balancer] distributed lock 'balancer/why-1:27017:1488644082:-1184523421' acquired for 'doing balance round', ts : 58bae7fd855893f71318d26c

2017-03-05T00:14:53.729+0800 I SHARDING [Balancer] distributed lock 'balancer/why-1:27017:1488644082:-1184523421' unlocked.

2017-03-05T00:15:03.765+0800 I SHARDING [Balancer] distributed lock 'balancer/why-1:27017:1488644082:-1184523421' acquired for 'doing balance round', ts : 58bae807855893f71318d26d

2017-03-05T00:15:03.777+0800 I SHARDING [Balancer] distributed lock 'balancer/why-1:27017:1488644082:-1184523421' unlocked.

^C2017-03-05T00:15:04.771+0800 I CONTROL [signalProcessingThread] got signal 2 (Interrupt), will terminate after current cmd ends

2017-03-05T00:15:04.772+0800 W SHARDING [LockPinger] removing distributed lock ping thread '192.168.0.130:27019/why-1:27017:1488644082:-1184523421'

2017-03-05T00:15:04.916+0800 W SHARDING [LockPinger] Error encountered while stopping ping on why-1:27017:1488644082:-1184523421 :: caused by :: 17382 Can't use connection pool during shutdown

2017-03-05T00:15:04.916+0800 I SHARDING [signalProcessingThread] dbexit: rc:0

通过不在后台执行可以看到连接的过程,只是暂时configsvr中没有配置。

[root@why-1 ~]# /usr/local/mongodb/bin/mongos --configdb=192.168.0.130:27019 --fork --logpath=/usr/local/mongodb/log --logappend

2017-03-05T00:25:24.816+0800 W SHARDING [main] Running a sharded cluster with fewer than 3 config servers should only be done for testing purposes and is not recommended for production.

about to fork child process, waiting until server is ready for connections.

forked process: 11967

child process started successfully, parent exiting

[root@why-1 ~]# ss -nlptu | grep 27017

tcp LISTEN 0 128 *:27017 *:* users:(("mongos",11967,12))

查看分片状态

[root@why-1 ~]# /usr/local/mongodb/bin/mongo --host 192.168.0.201

MongoDB shell version: 3.2.8

connecting to: 192.168.0.201:27017/test

Server has startup warnings:

2017-03-05T00:25:24.840+0800 I CONTROL [main] ** WARNING: You are running this process as the root user, which is not recommended.

2017-03-05T00:25:24.841+0800 I CONTROL [main]

mongos> sh.status()

--- Sharding Status ---

sharding version: {

"_id" : 1,

"minCompatibleVersion" : 5,

"currentVersion" : 6,

"clusterId" : ObjectId("58bae7f3855893f71318d269")

}

shards:

active mongoses:

"3.2.8" : 1

balancer:

Currently enabled: yes

Currently running: no

Failed balancer rounds in last 5 attempts: 0

Migration Results for the last 24 hours:

No recent migrations

databases:

启动shard

shard只需要正常的启动mongodb即可,两台都是这样的操作。

[root@why-2 mongodb]# /usr/local/mongodb/bin/mongod --dbpath=/usr/local/mongodb/data --logpath=/usr/local/mongodb/log --logappend --port=27017 --fork --rest --httpinterface

about to fork child process, waiting until server is ready for connections.

forked process: 11568

child process started successfully, parent exiting

routing进行操作

mongos> sh.help()

sh.addShard( host ) server:port OR setname/server:port

sh.enableSharding(dbname) enables sharding on the database dbname

sh.shardCollection(fullName,key,unique) shards the collection

sh.splitFind(fullName,find) splits the chunk that find is in at the median

sh.splitAt(fullName,middle) splits the chunk that middle is in at middle

sh.moveChunk(fullName,find,to) move the chunk where 'find' is to 'to' (name of shard)

sh.setBalancerState( <bool on or not> ) turns the balancer on or off true=on, false=off

sh.getBalancerState() return true if enabled

sh.isBalancerRunning() return true if the balancer has work in progress on any mongos

sh.disableBalancing(coll) disable balancing on one collection

sh.enableBalancing(coll) re-enable balancing on one collection

sh.addShardTag(shard,tag) adds the tag to the shard

sh.removeShardTag(shard,tag) removes the tag from the shard

sh.addTagRange(fullName,min,max,tag) tags the specified range of the given collection

sh.removeTagRange(fullName,min,max,tag) removes the tagged range of the given collection

sh.status() prints a general overview of the cluster

mongos> sh.addShard("192.168.0.202") #添加shard

{ "shardAdded" : "shard0000", "ok" : 1 }

mongos> sh.addShard("192.168.0.203")

{ "shardAdded" : "shard0001", "ok" : 1 }

mongos> sh.status()

--- Sharding Status ---

sharding version: {

"_id" : 1,

"minCompatibleVersion" : 5,

"currentVersion" : 6,

"clusterId" : ObjectId("58bae7f3855893f71318d269")

}

shards:

{ "_id" : "shard0000", "host" : "192.168.0.202:27017" }

{ "_id" : "shard0001", "host" : "192.168.0.203:27017" }

active mongoses:

"3.2.8" : 1

balancer:

Currently enabled: yes

Currently running: no

Failed balancer rounds in last 5 attempts: 0

Migration Results for the last 24 hours:

No recent migrations

databases:

mongos> sh.enableSharding("testdb") #指定需要切割的数据库

{ "ok" : 1 }

mongos> sh.status()

--- Sharding Status ---

sharding version: {

"_id" : 1,

"minCompatibleVersion" : 5,

"currentVersion" : 6,

"clusterId" : ObjectId("58bae7f3855893f71318d269")

}

shards:

{ "_id" : "shard0000", "host" : "192.168.0.202:27017" }

{ "_id" : "shard0001", "host" : "192.168.0.203:27017" }

active mongoses:

"3.2.8" : 1

balancer:

Currently enabled: yes

Currently running: no

Failed balancer rounds in last 5 attempts: 0

Migration Results for the last 24 hours:

No recent migrations

databases:

{ "_id" : "testdb", "primary" : "shard0000", "partitioned" : true }

mongos> sh.shardCollection("testdb.students",{"age":1}) #指定文档索引

{ "collectionsharded" : "testdb.students", "ok" : 1 }

mongos> sh.status()

--- Sharding Status ---

sharding version: {

"_id" : 1,

"minCompatibleVersion" : 5,

"currentVersion" : 6,

"clusterId" : ObjectId("58bae7f3855893f71318d269")

}

shards:

{ "_id" : "shard0000", "host" : "192.168.0.202:27017" }

{ "_id" : "shard0001", "host" : "192.168.0.203:27017" }

active mongoses:

"3.2.8" : 1

balancer:

Currently enabled: yes

Currently running: no

Failed balancer rounds in last 5 attempts: 0

Migration Results for the last 24 hours:

No recent migrations

databases:

{ "_id" : "testdb", "primary" : "shard0000", "partitioned" : true }

testdb.students

shard key: { "age" : 1 }

unique: false

balancing: true

chunks:

shard0000 1

{ "age" : { "$minKey" : 1 } } -->> { "age" : { "$maxKey" : 1 } } on : shard0000 Timestamp(1, 0)

mongos> use testdb

switched to db testdb

mongos> for (i=1;i<=100000;i++) db.students.insert({name:"student"+i,age:(i%6),school:"Num"+(i%5)+"School",address:"Beijing China"})

WriteResult({ "nInserted" : 1 })

mongos> sh.status() #添加数据后可以看到详细的索引分片

--- Sharding Status ---

sharding version: {

"_id" : 1,

"minCompatibleVersion" : 5,

"currentVersion" : 6,

"clusterId" : ObjectId("58bae7f3855893f71318d269")

}

shards:

{ "_id" : "shard0000", "host" : "192.168.0.202:27017" }

{ "_id" : "shard0001", "host" : "192.168.0.203:27017" }

active mongoses:

"3.2.8" : 1

balancer:

Currently enabled: yes

Currently running: no

Failed balancer rounds in last 5 attempts: 0

Migration Results for the last 24 hours:

3 : Success

databases:

{ "_id" : "testdb", "primary" : "shard0000", "partitioned" : true }

testdb.students

shard key: { "age" : 1 }

unique: false

balancing: true

chunks:

shard0000 3

shard0001 2

{ "age" : { "$minKey" : 1 } } -->> { "age" : 2 } on : shard0001 Timestamp(2, 0)

{ "age" : 2 } -->> { "age" : 10 } on : shard0001 Timestamp(3, 0)

{ "age" : 10 } -->> { "age" : 61 } on : shard0000 Timestamp(3, 1)

{ "age" : 61 } -->> { "age" : 119 } on : shard0000 Timestamp(2, 3)

{ "age" : 119 } -->> { "age" : { "$maxKey" : 1 } } on : shard0000 Timestamp(2, 4)

mongos> sh.isBalancerRunning() #查看Balancer分片数据平衡是否开启,默认不开启会频繁迁移数据,造成性能影响

false

mongos> sh.getBalancerState()

true