<服务>KVM

目录:

KVM

KVM简介

KVM是内核级虚拟化技术,在RedHat6.0版本后为红帽认定的虚拟化技术,大概是10年10月份。5.0版本为xen

硬件虚拟化,软件虚拟化和平台虚拟化

KVM就可以算作硬件虚拟化,OpenVZ可以算作软件虚拟化,而容器Docker就属于平台虚拟化。

全虚拟化和半虚拟化

半虚拟化相对全虚拟化在性能方面是占优的。

虚拟化技术

虚拟化主要是为了提高硬件资源的利用率,更加容易迁移业务,如果是IDC机房高配置硬件可以节约很多机柜位置的开销。

系统运行有两个模式,一个是内核态,一个是用户态,可以通过系统命令进行查看。

[root@why ~]# mpstat 1

Linux 2.6.32-573.22.1.el6.x86_64 (why) 02/25/2017 _x86_64_ (1 CPU)

11:21:12 PM CPU %usr %nice %sys %iowait %irq %soft %steal %guest %idle

11:21:13 PM all 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 100.00

11:21:14 PM all 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 100.00

11:21:15 PM all 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 100.00

11:21:16 PM all 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 100.00

usr为用户态,而sys为内核态,系统调用的时候就处于内核态,每次系统调用都会产生一次上下文切换,上下文切换的次数多久会影响性能。

首先要明确几个概念:

- KVM只是一个内核模块,提供对CPU虚拟化和内存虚拟化,其他网络等都通过QEMU来提供,用KVM创建的虚拟机其实是一个qemu的进程。

- KVM包含设备驱动和针对模拟PC的硬件的用户空间组件

- KVM需要CPU支持虚拟化功能,只能运行在具有虚拟化支持的CPU上进行运行

开启KVM

依赖

机器要支持Inter VT-x或AMD -v,才能够提供CPU虚拟化的支持,而Inter VT-x/EPT或AMD -v/RVI在其基础上添加了对内存虚拟化的支持。这两个在服务器中一般都是在BIOS里打开的。

[root@why ~]# rpm -ivh http://mirrors.ustc.edu.cn/fedora/epel/6/x86_64/epel-release-6-8.noarch.rpm

Retrieving http://mirrors.ustc.edu.cn/fedora/epel/6/x86_64/epel-release-6-8.noarch.rpm

warning: /var/tmp/rpm-tmp.2ffY9i: Header V3 RSA/SHA256 Signature, key ID 0608b895: NOKEY

Preparing... ########################################### [100%]

1:epel-release ########################################### [100%]

检查是否有CPU虚拟化支持

[root@why ~]# egrep 'vmx|svm' /proc/cpuinfo --color

flags : fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush dts mmx fxsr sse sse2 ss syscall nx pdpe1gb rdtscp lm constant_tsc up arch_perfmon pebs bts xtopology tsc_reliable nonstop_tsc aperfmperf unfair_spinlock pni pclmulqdq vmx ssse3 fma cx16 pcid sse4_1 sse4_2 x2apic movbe popcnt aes xsave avx f16c rdrand hypervisor lahf_lm ida arat epb xsaveopt pln pts dts tpr_shadow vnmi ept vpid fsgsbase smep

检查是否有KVM模块

[root@why ~]# lsmod | grep kvm

[root@why ~]# yum install -y qemu-kvm

[root@why ~]# lsmod | grep kvm

kvm_intel 54285 0

kvm 333172 1 kvm_intel

安装KVM管理工具

[root@why ~]# yum install virt-manager python-virtinst qemu-kvm-tools libvirt libvirt-python

创建KVM

创建硬盘

[root@why ~]# qemu-img --help

create [-f fmt] [-o options] filename [size]

[root@why ~]# qemu-img create -f raw /data/kvm.raw 20G #raw格式,大小为20G

Formatting '/data/kvm.raw', fmt=raw size=21474836480

[root@why ~]# ll /data/kvm.raw

-rw-r--r--. 1 root root 21474836480 Feb 26 10:23 /data/kvm.raw

查看磁盘

[root@why ~]# qemu-img info /data/kvm.raw

image: /data/kvm.raw

file format: raw

virtual size: 20G (21474836480 bytes)

disk size: 0

启动qemu-kvm这个可以执行rpm -ql qemu-kvm看到位置

[root@why ~]# /usr/libexec/qemu-kvm

VNC server running on `::1:5900'

打开另一个客户端可以看到这个进程和端口

[root@why ~]# netstat -nlptu | grep 5900

tcp 0 0 ::1:5900 :::* LISTEN 1875/qemu-kvm

[root@why ~]# ps -ef | grep kvm

root 758 2 0 09:51 ? 00:00:00 [kvm-irqfd-clean]

root 1875 1533 47 10:27 pts/0 00:00:37 /usr/libexec/qemu-kvm

root 1877 2 0 10:27 ? 00:00:00 [kvm-pit-wq]

root 1926 1893 0 10:28 pts/1 00:00:00 grep kvm

复制光盘镜像

[root@why ~]# dd if=/dev/cdrom of=/iso/redhat64.iso

7526400+0 records in

7526400+0 records out

3853516800 bytes (3.9 GB) copied, 339.841 s, 11.3 MB/s

启动服务

[root@why ~]# /etc/init.d/libvirtd start

Starting libvirtd daemon: 2017-02-26 02:53:29.534+0000: 27087: info : libvirt version: 0.10.2, package: 29.el6 (Red Hat, Inc. <http://bugzilla.redhat.com/bugzilla>, 2013-10-09-06:25:35, x86-026.build.eng.bos.redhat.com)

2017-02-26 02:53:29.534+0000: 27087: warning : virGetHostname:2294 : getaddrinfo failed for 'why': Name or service not known

[ OK ]

创建虚拟机

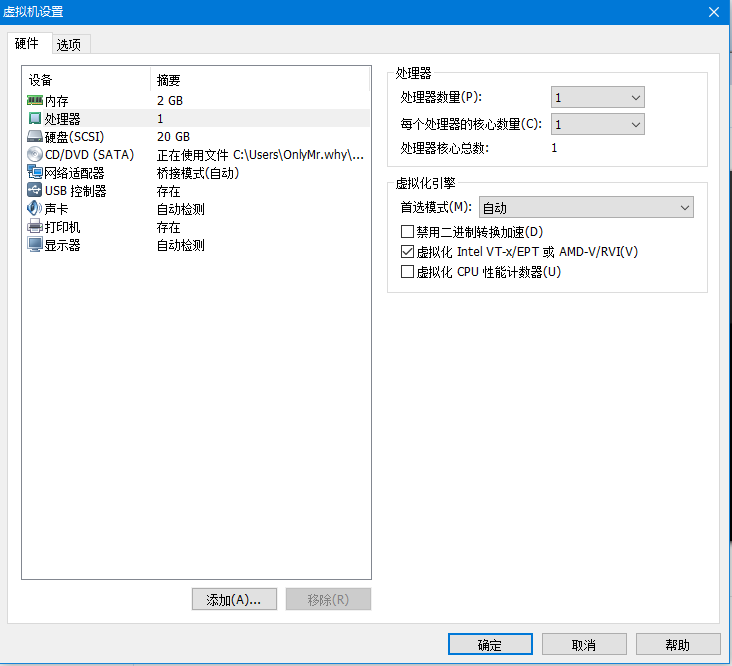

[root@why ~]# virt-install --virt-type kvm --name kvm-demo --ram 512 --disk path=/data/kvm.raw --cdrom=/iso/redhat64.iso --network network=default --graphics vnc,listen=0.0.0.0 --noautoconsole --os-type=linux --os-variant=rhel6

Starting install...

Creating domain... | 0 B 00:00

Domain installation still in progress. You can reconnect to

the console to complete the installation process.

- --virt-type kvm #虚拟机类型

- --name kvm-demo #虚拟机名称

- --ram 512 #内存,默认单位为MB

- --disk path=/data/kvm.raw #指定磁盘路径

- --cdrom=/iso/redhat64.iso #指定光盘镜像文件

- --network network=default #指定网络为默认网络

- --graphics vnc,listen=0.0.0.0 #指定VNC监听 更多的参数可以通过virt-install --help查看

可以通过/usr/libexec/qemu-kvm -cpu ?查看支持的CPU

[root@why ~]# ifconfig

eth0 Link encap:Ethernet HWaddr 00:0C:29:0F:27:B5

inet addr:192.168.0.206 Bcast:192.168.0.255 Mask:255.255.255.0

inet6 addr: fe80::20c:29ff:fe0f:27b5/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:7290 errors:0 dropped:0 overruns:0 frame:0

TX packets:2836 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:742028 (724.6 KiB) TX bytes:507635 (495.7 KiB)

lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

inet6 addr: ::1/128 Scope:Host

UP LOOPBACK RUNNING MTU:16436 Metric:1

RX packets:0 errors:0 dropped:0 overruns:0 frame:0

TX packets:0 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:0 (0.0 b) TX bytes:0 (0.0 b)

virbr0 Link encap:Ethernet HWaddr 52:54:00:58:85:A7

inet addr:192.168.122.1 Bcast:192.168.122.255 Mask:255.255.255.0

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:3 errors:0 dropped:0 overruns:0 frame:0

TX packets:0 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:216 (216.0 b) TX bytes:0 (0.0 b)

vnet0 Link encap:Ethernet HWaddr FE:54:00:49:8A:4B

inet6 addr: fe80::fc54:ff:fe49:8a4b/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:3 errors:0 dropped:0 overruns:0 frame:0

TX packets:375 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:500

RX bytes:258 (258.0 b) TX bytes:19656 (19.1 KiB)

可以在启动之后出现了连个新的网卡,可以查看一下网络的配置文件

[root@why ~]# cd /etc/libvirt

[root@why libvirt]# tree .

.

├── libvirt.conf

├── libvirtd.conf

├── lxc.conf

├── nwfilter

│ ├── allow-arp.xml

│ ├── allow-dhcp-server.xml

│ ├── allow-dhcp.xml

│ ├── allow-incoming-ipv4.xml

│ ├── allow-ipv4.xml

│ ├── clean-traffic.xml

│ ├── no-arp-ip-spoofing.xml

│ ├── no-arp-mac-spoofing.xml

│ ├── no-arp-spoofing.xml

│ ├── no-ip-multicast.xml

│ ├── no-ip-spoofing.xml

│ ├── no-mac-broadcast.xml

│ ├── no-mac-spoofing.xml

│ ├── no-other-l2-traffic.xml

│ ├── no-other-rarp-traffic.xml

│ ├── qemu-announce-self-rarp.xml

│ └── qemu-announce-self.xml

├── qemu

│ ├── kvm-demo.xml

│ └── networks

│ ├── autostart

│ │ └── default.xml -> ../default.xml

│ └── default.xml

└── qemu.conf

4 directories, 24 files

[root@why libvirt]# cat qemu/networks/default.xml

<network>

<name>default</name>

<uuid>9b4a9960-a888-4a28-b3b5-28a2be4136d1</uuid>

<bridge name="virbr0" />

<mac address='52:54:00:58:85:A7'/>

<forward/>

<ip address="192.168.122.1" netmask="255.255.255.0">

<dhcp>

<range start="192.168.122.2" end="192.168.122.254" />

</dhcp>

</ip>

</network>

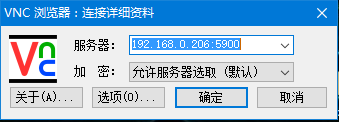

VNC连接KVM

下载VNC

我是在CSDN下载的http://download.csdn.net/download/tutu_1127/5043242

VNC连接KVM虚拟机

直接连接VNC 192.168.0.206:5900,注意需要关闭防火墙才行

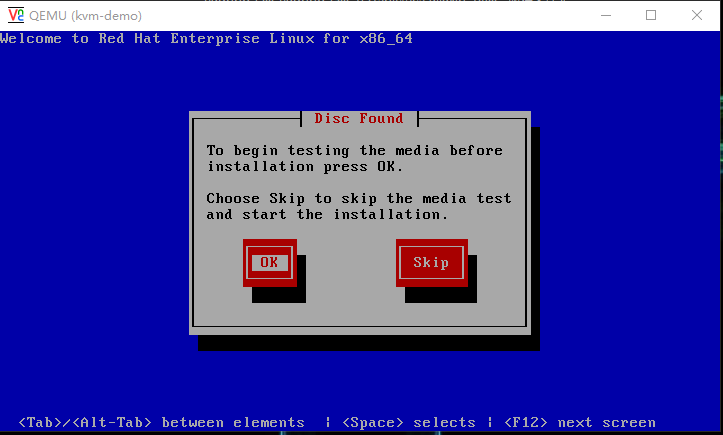

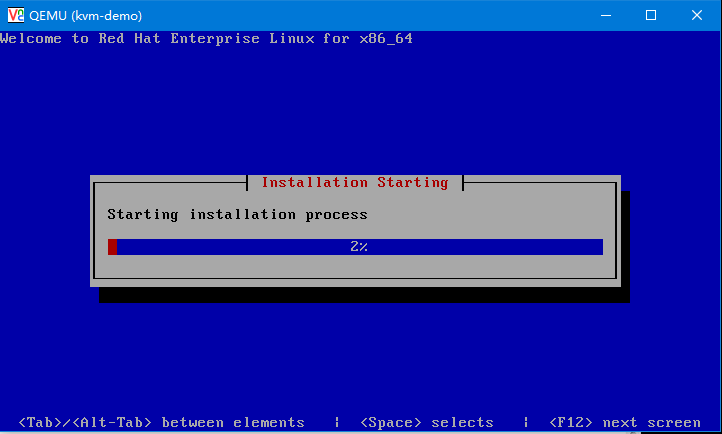

进入磁盘检查,选择skip

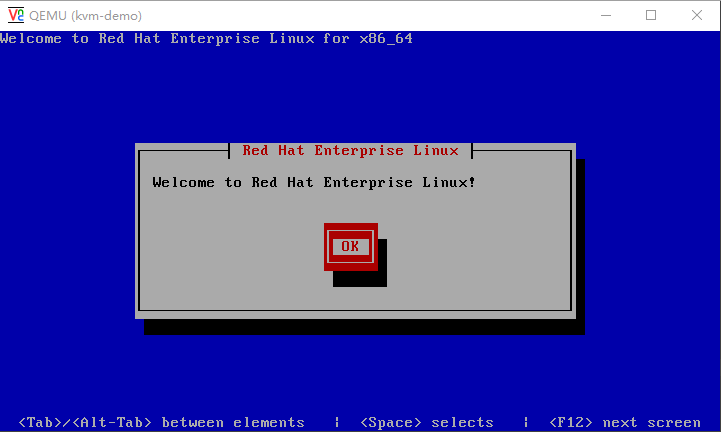

进入欢迎界面

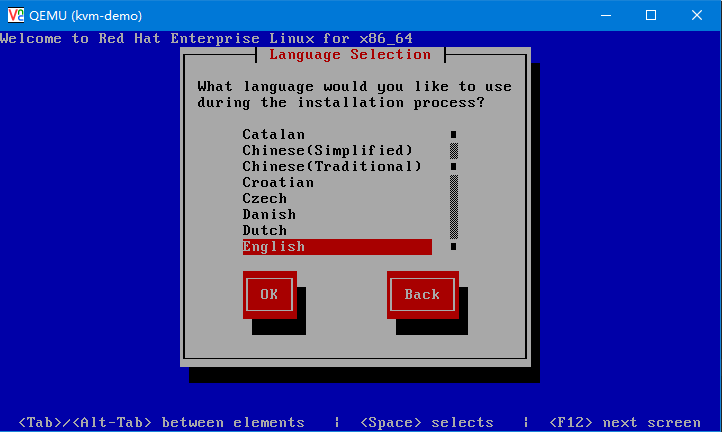

选择语言

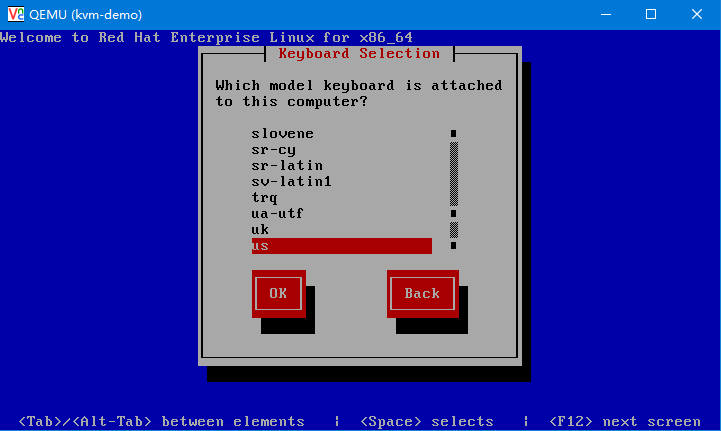

选择键盘

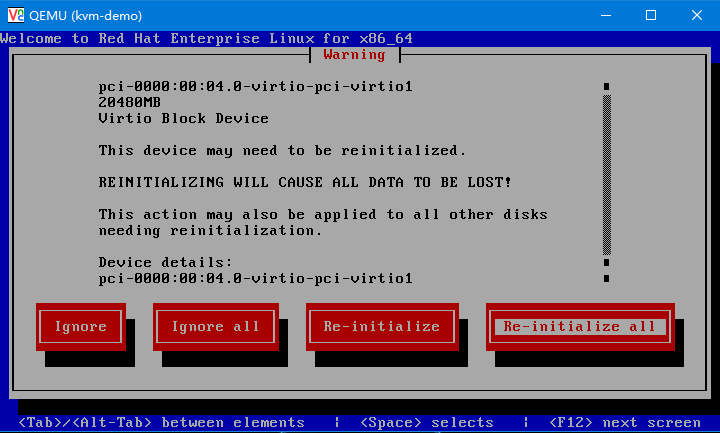

硬盘初始化,选择Re-initialize all

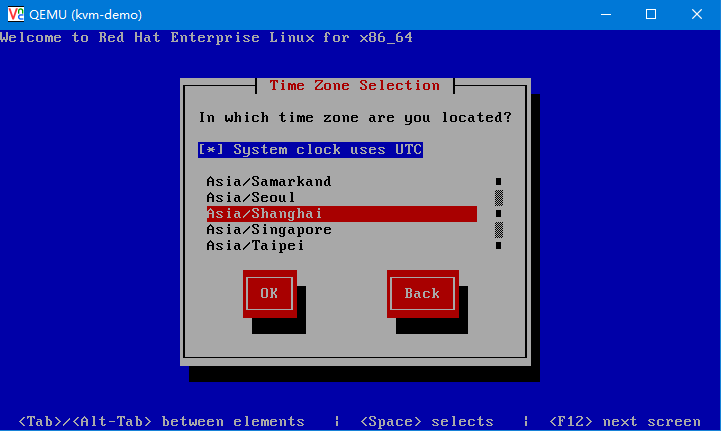

选择时区,选择shanghai

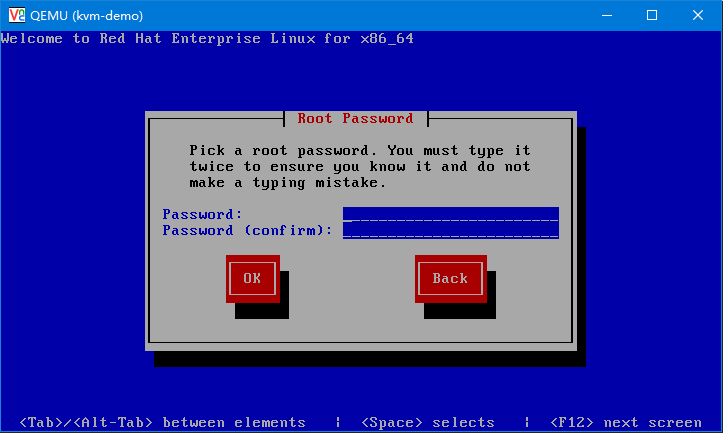

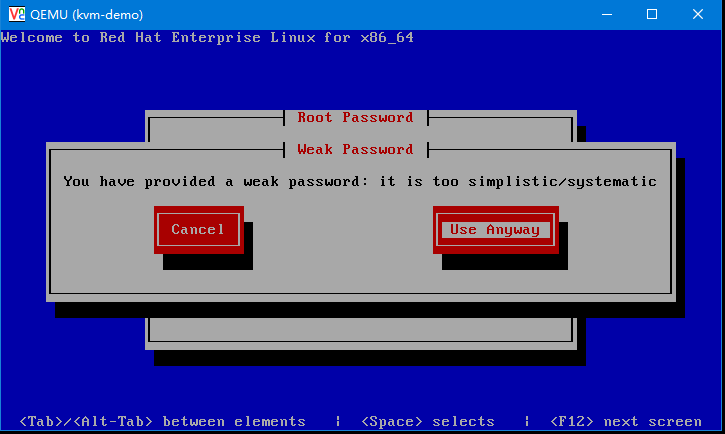

输入管理员密码

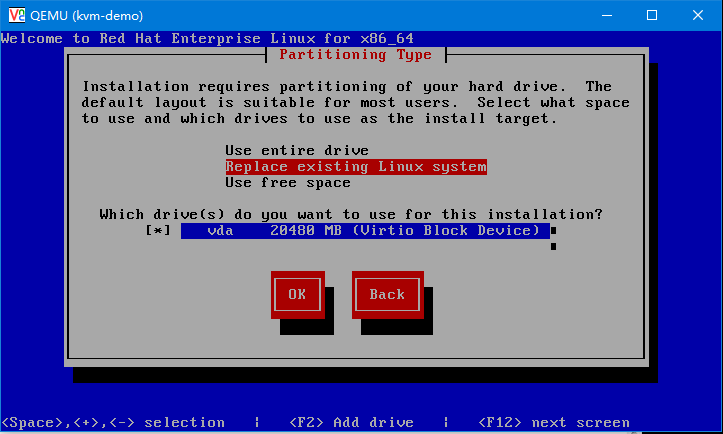

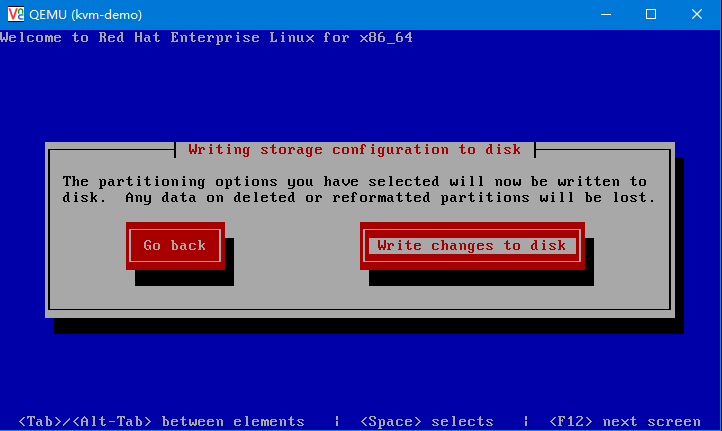

选择分区

安装基础包

[root@why libvirt]# virsh list

Id Name State

----------------------------------------------------

1 kvm-demo running

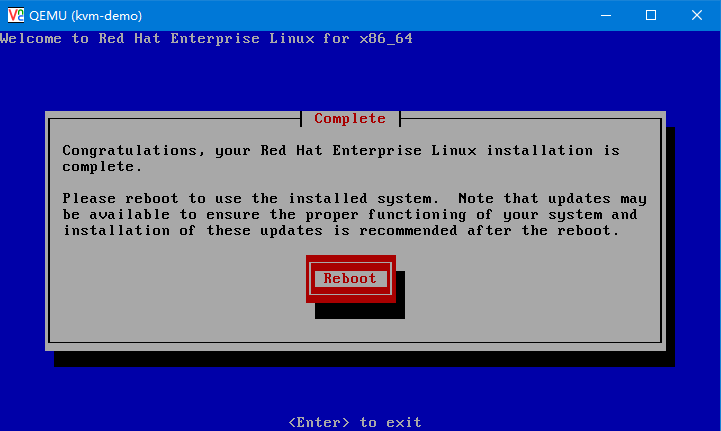

安装完成

KVM配置

[root@why libvirt]# cat qemu/kvm-demo.xml

<!--

WARNING: THIS IS AN AUTO-GENERATED FILE. CHANGES TO IT ARE LIKELY TO BE

OVERWRITTEN AND LOST. Changes to this xml configuration should be made using:

virsh edit kvm-demo

or other application using the libvirt API.

-->

<domain type='kvm'> #类型kvm

<name>kvm-demo</name> #主机名kvm-demo

<uuid>e4430cc0-999b-f3dc-12da-79b18bc422c3</uuid> #UUID,在主机里

<memory unit='KiB'>524288</memory> #内存

<currentMemory unit='KiB'>524288</currentMemory>

<vcpu placement='static'>1</vcpu> #CPU

<os>

<type arch='x86_64' machine='rhel6.5.0'>hvm</type> #架构x86_64,主机rhel6.5.0

<boot dev='hd'/>

</os>

<features>

<acpi/>

<apic/>

<pae/>

</features>

<clock offset='utc'/>

<on_poweroff>destroy</on_poweroff>

<on_reboot>restart</on_reboot>

<on_crash>restart</on_crash>

<devices>

<emulator>/usr/libexec/qemu-kvm</emulator>

<disk type='file' device='disk'>

<driver name='qemu' type='raw' cache='none'/>

<source file='/data/kvm.raw'/> #硬盘

<target dev='vda' bus='virtio'/>

<address type='pci' domain='0x0000' bus='0x00' slot='0x04' function='0x0'/>

</disk>

<disk type='block' device='cdrom'> #光驱

<driver name='qemu' type='raw'/>

<target dev='hdc' bus='ide'/>

<readonly/>

<address type='drive' controller='0' bus='1' target='0' unit='0'/>

</disk>

<controller type='usb' index='0'>

<address type='pci' domain='0x0000' bus='0x00' slot='0x01' function='0x2'/>

</controller>

<controller type='ide' index='0'>

<address type='pci' domain='0x0000' bus='0x00' slot='0x01' function='0x1'/>

</controller>

<interface type='network'>

<mac address='52:54:00:49:8a:4b'/>

<source network='default'/>

<model type='virtio'/>

<address type='pci' domain='0x0000' bus='0x00' slot='0x03' function='0x0'/>

</interface>

<serial type='pty'>

<target port='0'/>

</serial>

<console type='pty'>

<target type='serial' port='0'/>

</console>

<input type='tablet' bus='usb'/>

<input type='mouse' bus='ps2'/>

<graphics type='vnc' port='-1' autoport='yes' listen='0.0.0.0'>

<listen type='address' address='0.0.0.0'/>

</graphics>

<video>

<model type='cirrus' vram='9216' heads='1'/>

<address type='pci' domain='0x0000' bus='0x00' slot='0x02' function='0x0'/>

</video>

<memballoon model='virtio'>

<address type='pci' domain='0x0000' bus='0x00' slot='0x05' function='0x0'/>

</memballoon>

</devices>

</domain>

更多的可以看到http://libvirt.org/formatsnapshot.html#SnapshotAttributes

安装完成后会默认关机,启动KVM

[root@why libvirt]# virsh list --all

Id Name State

----------------------------------------------------

- kvm-demo shut off

[root@why libvirt]# virsh start kvm-demo

Domain kvm-demo started

检查KVM状态,可以看到KVM为一个进程

[root@why libvirt]# netstat -nlptu | grep kvm

tcp 0 0 0.0.0.0:5900 0.0.0.0:* LISTEN 28636/qemu-kvm

[root@why libvirt]# ps -ef | grep kvm

root 758 2 0 09:51 ? 00:00:00 [kvm-irqfd-clean]

qemu 28636 1 93 12:44 ? 00:01:39 /usr/libexec/qemu-kvm -name kvm-demo -S -M rhel6.5.0 -enable-kvm -m 512 -realtime mlock=off -smp 1,sockets=1,cores=1,threads=1 -uuid e4430cc0-999b-f3dc-12da-79b18bc422c3 -nodefconfig -nodefaults -chardev socket,id=charmonitor,path=/var/lib/libvirt/qemu/kvm-demo.monitor,server,nowait -mon chardev=charmonitor,id=monitor,mode=control -rtc base=utc -no-shutdown -device piix3-usb-uhci,id=usb,bus=pci.0,addr=0x1.0x2 -drive file=/data/kvm.raw,if=none,id=drive-virtio-disk0,format=raw,cache=none -device virtio-blk-pci,scsi=off,bus=pci.0,addr=0x4,drive=drive-virtio-disk0,id=virtio-disk0,bootindex=1 -drive if=none,media=cdrom,id=drive-ide0-1-0,readonly=on,format=raw -device ide-drive,bus=ide.1,unit=0,drive=drive-ide0-1-0,id=ide0-1-0 -netdev tap,fd=22,id=hostnet0,vhost=on,vhostfd=23 -device virtio-net-pci,netdev=hostnet0,id=net0,mac=52:54:00:49:8a:4b,bus=pci.0,addr=0x3 -chardev pty,id=charserial0 -device isa-serial,chardev=charserial0,id=serial0 -device usb-tablet,id=input0 -vnc 0.0.0.0:0 -vga cirrus -device virtio-balloon-pci,id=balloon0,bus=pci.0,addr=0x5

root 28638 2 0 12:44 ? 00:00:00 [kvm-pit-wq]

root 28673 28481 0 12:46 pts/5 00:00:00 grep kvm

可以看到启动的啥时候监听了5900端口,并且启动了一个qemu-kvm进程,后边指定的参数即为xml文件中的参数。

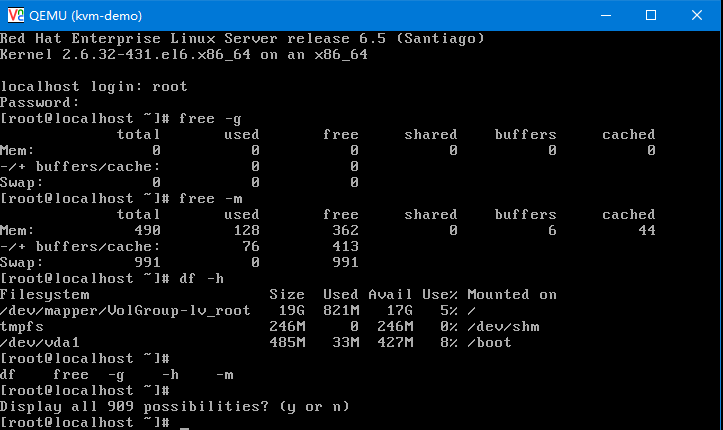

如果出现VNC无法正常输入,请切换到英文输入。可以看到内存和磁盘与定义的一致。

关闭的时候需要使用destroy,而shutdown和reboot不生效,因为安装最低版本没有安装acpid而shutdown和reboot需要调用acpid,所以需要安装并设置为开机启动。

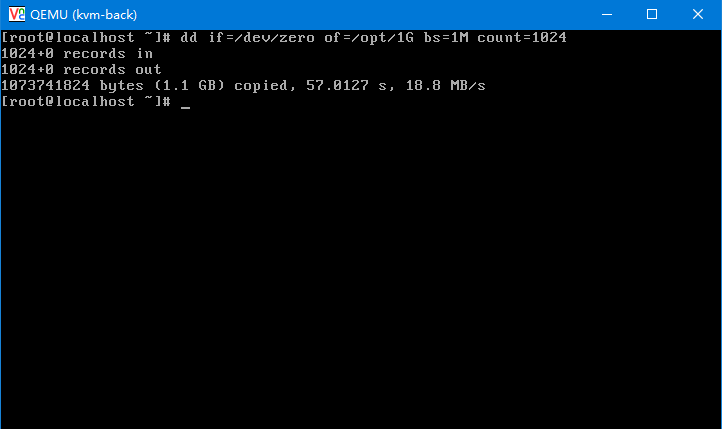

虚拟机复制

[root@why ~]# virsh dumpxml kvm-demo > /etc/libvirt/qemu/kvm-back.xml

[root@why ~]# cp /data/kvm.raw /data/kvm_back.raw

[root@why ~]# vi /etc/libvirt/qemu/kvm-backup.xml

修改name,uuid,mac和source file

[root@why ~]# virsh define /etc/libvirt/qemu/kvm-backup.xml

Domain kvm-back defined from /etc/libvirt/qemu/kvm-backup.xml

因为是直接拷贝的磁盘,磁盘中的eth0中的网卡文件中的UUID等,和udev下的网卡文件需要修改,就和克隆虚拟机一样。

虚拟机修改内存

直接通过virt参数setmem修改不生效,甚至还会造成虚拟机崩溃需要在关机后修改xml中的内存参数(通过virsh edit kvm-demo修改)。 修改硬盘大小qemu-img resize new.raw +1G

虚拟机磁盘格式

KVM中常用的格式raw raw的优点是:

- 寻址简单,访问效率高

- 可以通过格式转换变为其他的格式

- 方便被宿主机挂载

raw的缺点是:

- 不支持压缩,快照,加密和CoW等特性

- 创建后就占用了宿主机指定大小的空间

QCOW2是Qemu支持的一种虚拟机镜像格式,是qcow的改进,基本单元为cluster,每个cluster由若干个扇区组成,每个扇区512字节,所以寻址需要定位镜像文件的cluster,然后才是扇区,需要两次寻址,类似主页二级页表转换机制。支持加密压缩等

磁盘格式转换

[root@why data]# qemu-img convert -c -f raw -O qcow2 kvm_back.raw kvm_back.qcow2

[root@why data]# ll -h

total 4.0G

-rw-r--r--. 1 root root 584M Feb 26 17:13 kvm_back.qcow2

-rw-r--r--. 1 root root 20G Feb 26 17:03 kvm_back.raw

-rw-r--r--. 1 root root 20G Feb 26 16:25 kvm.raw

drwx------. 2 root root 16K Feb 26 08:08 lost+found

然后把修改配置,使用qcow2磁盘

[root@why data]# virsh edit kvm-back

Domain kvm-back XML configuration edited.

修改内容

<driver name='qemu' type='qcow2' cache='none'/>

<source file='/data/kvm_back.qcow2'/>

[root@why data]# virsh start kvm-back

Domain kvm-back started

[root@why data]# qemu-img check /data/kvm_back.qcow2

No errors were found on the image.

Image end offset: 615055360

也依然可以正常启动

通过python libvirt库调用KVM

[root@why data]# vi demo.py

[root@why data]# chmod +x demo.py

[root@why data]# ./demo.py

ID = 5

Name = kvm-back

State = 1

[root@why data]# cat demo.py

#!/usr/bin/python

import libvirt

conn = libvirt.open("qemu:///system")

for id in conn.listDomainsID():

dom = conn.lookupByID(id)

infos = dom.info()

print ''

print 'ID = %d'%id

print 'Name = %s'%dom.name()

print 'State = %d'%infos[0]

print ''

磁盘快照

[root@why data]# virsh list --all

Id Name State

----------------------------------------------------

5 kvm-back running

- kvm-demo shut off

[root@why data]# qemu-img snapshot -l !$

qemu-img snapshot -l /data/kvm_back.qcow2

Snapshot list:

ID TAG VM SIZE DATE VM CLOCK

1 backup 0 2017-02-26 22:20:12 00:00:00.000

[root@why data]# qemu-img snapshot -a kvm-back-snapshot-1 /data/kvm_back.qcow2

[root@why data]# qemu-img snapshot -c backup /data/kvm_back.qcow2

[root@why data]# qemu-img snapshot -l /data/kvm_back.qcow2

Snapshot list:

ID TAG VM SIZE DATE VM CLOCK

1 backup 0 2017-02-26 22:20:12 00:00:00.000

[root@why data]# qemu-img snapshot -a backup /data/kvm_back.qcow2

虚拟机快照

[root@why data]# virsh snapshot-list kvm-back

Name Creation Time State

------------------------------------------------------------

[root@why data]# virsh snapshot-create-as kvm-back kvm-back-snapshot-1

Domain snapshot kvm-back-snapshot-1 created

[root@why data]# virsh snapshot-list kvm-back

Name Creation Time State

------------------------------------------------------------

kvm-back-snapshot-1 2017-02-26 22:56:24 +0800 running

[root@why data]# qemu-img snapshot -l /data/kvm_back.qcow2

Snapshot list:

ID TAG VM SIZE DATE VM CLOCK

1 kvm-back-snapshot-1 158M 2017-02-26 22:56:24 00:54:12.384

可以创建一个文件拍摄快照,然后删除文件,恢复快照

[root@why data]# qemu-img snapshot -a kvm-back-snapshot-1 /data/kvm_back.qcow2

恢复快照这个操作必须在虚拟机关闭后使用才生效。

虚拟机监控

[root@why data]# yum install -y virt-top

[root@why data]# virt-top

virt-top 22:49:24 - x86_64 1/1CPU 2394MHz 1869MB 1.7%

2 domains, 1 active, 1 running, 0 sleeping, 0 paused, 1 inactive D:0 O:0 X:0

CPU: 0.7% Mem: 609 MB (609 MB by guests)

ID S RDRQ WRRQ RXBY TXBY %CPU %MEM TIME NAME

5 R 0 0 104 0 0.7 32.0 4:41.30 kvm-back

- (kvm-demo)

当然也可以在虚拟机上安装zabbix进行监控,比较推荐这种方式

KVM优化

包含的内容有CPU,内存,寻址,存储I/O,网络I/O

CPU优化

Inter VT-x或AMD -v

虚拟机cpu缓存优化

[root@why data]# lscpu

Architecture: x86_64

CPU op-mode(s): 32-bit, 64-bit

Byte Order: Little Endian

CPU(s): 1

On-line CPU(s) list: 0

Thread(s) per core: 1

Core(s) per socket: 1

Socket(s): 1

NUMA node(s): 1

Vendor ID: GenuineIntel

CPU family: 6

Model: 69

Stepping: 1

CPU MHz: 2394.454

BogoMIPS: 4788.90

Virtualization: VT-x

Hypervisor vendor: VMware

Virtualization type: full

L1d cache: 32K

L1i cache: 32K

L2 cache: 256K

L3 cache: 3072K

NUMA node0 CPU(s): 0

1级缓存为静态缓存,也分为指令缓存和数据缓存,而2级和3级缓存为动态缓存

[root@why data]# lscpu -p

# The following is the parsable format, which can be fed to other

# programs. Each different item in every column has an unique ID

# starting from zero.

# CPU,Core,Socket,Node,,L1d,L1i,L2,L3

0,0,0,0,,0,0,0,0

而通常2级缓存通常是共享的,而在多个CPU的时候,就会被调度在不同的cpu上缓存,不同的cpu之间切换,会造成二级缓存失效,可以进行CPU的绑定

[root@why data]# taskset --help

taskset (util-linux-ng 2.17.2)

usage: taskset [options] [mask | cpu-list] [pid | cmd [args...]]

set or get the affinity of a process

-p, --pid operate on existing given pid

-c, --cpu-list display and specify cpus in list format

-h, --help display this help

-V, --version output version information

The default behavior is to run a new command:

taskset 03 sshd -b 1024

You can retrieve the mask of an existing task:

taskset -p 700

Or set it:

taskset -p 03 700

List format uses a comma-separated list instead of a mask:

taskset -pc 0,3,7-11 700

Ranges in list format can take a stride argument:

e.g. 0-31:2 is equivalent to mask 0x55555555

内存优化

ksm内存地址合并

现在的硬件大多数都是NUMA架构,每个CPU管理固定的内存,而DMA则可以很好的支持内存使用

[root@why data]# /etc/init.d/ksm start

Starting ksm: [ OK ]

kms可以周期的检测内存,把一样的数据就会把内存地址合并,减少内存的使用,因为在相同的服务主机就有一些相同的数据,可以更好的优化性能

大叶内存

降低swap分区的使用,修改/proc/sys/vm/swappiness的值为0,当然不建议设为0,因为不确定业务高的时候会产生影响

另外每台KVM支持超配

IO优化

使用virtio的支持

[root@why data]# ps -ef | grep kvm | grep virtio --color

qemu 2848 1 6 22:02 ? 00:05:20 /usr/libexec/qemu-kvm -name kvm-back -S -M rhel6.5.0 -enable-kvm -m 610 -realtime mlock=off -smp 1,sockets=1,cores=1,threads=1 -uuid e4430cc0-999b-f3dc-12da-79b18bc422c4 -nodefconfig -nodefaults -chardev socket,id=charmonitor,path=/var/lib/libvirt/qemu/kvm-back.monitor,server,nowait -mon chardev=charmonitor,id=monitor,mode=control -rtc base=utc -no-shutdown -device piix3-usb-uhci,id=usb,bus=pci.0,addr=0x1.0x2 -drive file=/data/kvm_back.qcow2,if=none,id=drive-virtio-disk0,format=qcow2,cache=none -device virtio-blk-pci,scsi=off,bus=pci.0,addr=0x4,drive=drive-virtio-disk0,id=virtio-disk0,bootindex=1 -drive if=none,media=cdrom,id=drive-ide0-1-0,readonly=on,format=raw -device ide-drive,bus=ide.1,unit=0,drive=drive-ide0-1-0,id=ide0-1-0 -netdev tap,fd=22,id=hostnet0,vhost=on,vhostfd=23 -device virtio-net-pci,netdev=hostnet0,id=net0,mac=52:54:00:49:8a:3b,bus=pci.0,addr=0x3 -chardev pty,id=charserial0 -device isa-serial,chardev=charserial0,id=serial0 -device usb-tablet,id=input0 -vnc 0.0.0.0:0 -k en-us -vga cirrus -device virtio-balloon-pci,id=balloon0,bus=pci.0,addr=0x5

调度算法

[root@why data]# cat /sys/block/sda/queue/scheduler

noop anticipatory deadline [cfq]

这个调度算法针对磁盘,cfq为完全公平的模式,为每个进程维护一个IO的队列,deadline一般用于数据库,anticipatory比较适合多些少读的算法,noop一般用于ssd硬盘。

Inter VT-x/EPT或AMD -v/RVI

KVM与OpenStack的关系

OpenStack为一个云管理的平台,默认是没有虚拟化环境的,虚拟化由KVM提供,OpenStack也只是调用了libvirt的API进行管理KVM。 OpenStack创建的KVM的xml文件修改是不能永久生效,只要通过OpenStack启动KVM虚拟机都会重新生成xml文件启动。类似Ambari管理配置文件一样。OpenStack创建的/var/lib/nova/instances下这个在/usr/libvirt/qemu下也有一份。